kid goku drawing 3d modeling pose

3D Machine Learning

In contempo years, tremendous amount of progress is beingness made in the field of 3D Machine Learning, which is an interdisciplinary field that fuses figurer vision, computer graphics and automobile learning. This repo is derived from my report notes and will be used as a identify for triaging new research papers.

I'll use the following icons to differentiate 3D representations:

-

📷 Multi-view Images -

👾 Volumetric -

🎲 Point Cloud -

💎 Polygonal Mesh -

💊 Primitive-based

To notice related papers and their relationships, check out Connected Papers, which provides a neat way to visualize the academic field in a graph representation.

Go Involved

To contribute to this Repo, you may add content through pull requests or open an effect to let me know.

We have besides created a Slack workplace for people around the globe to ask questions, share cognition and facilitate collaborations. Together, I'm sure nosotros can advance this field equally a collaborative endeavour. Join the community with this link.

Tabular array of Contents

- Courses

- Datasets

- 3D Models

- 3D Scenes

- 3D Pose Estimation

- Single Object Classification

- Multiple Objects Detection

- Scene/Object Semantic Partition

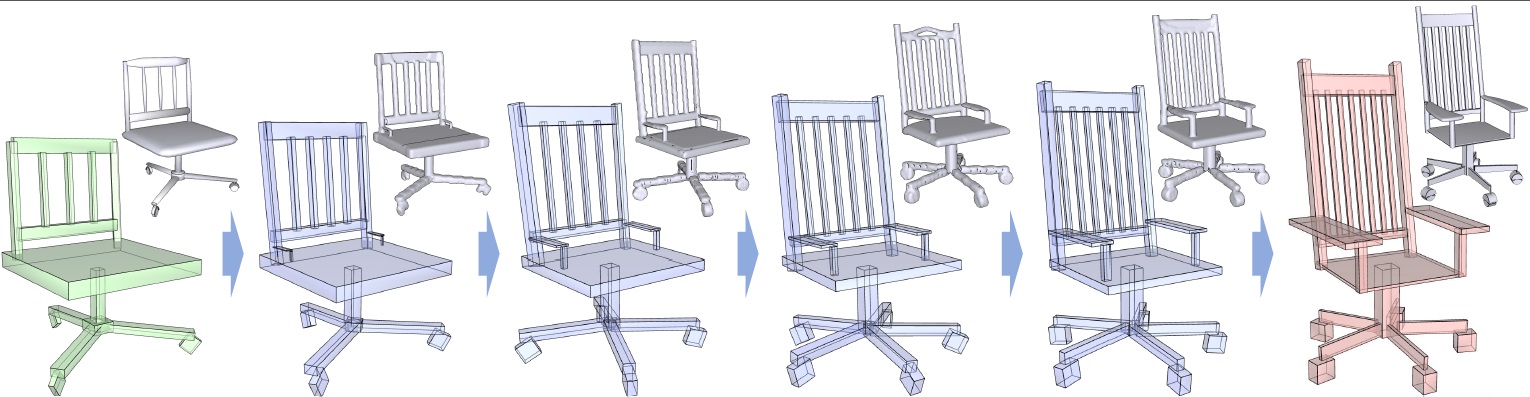

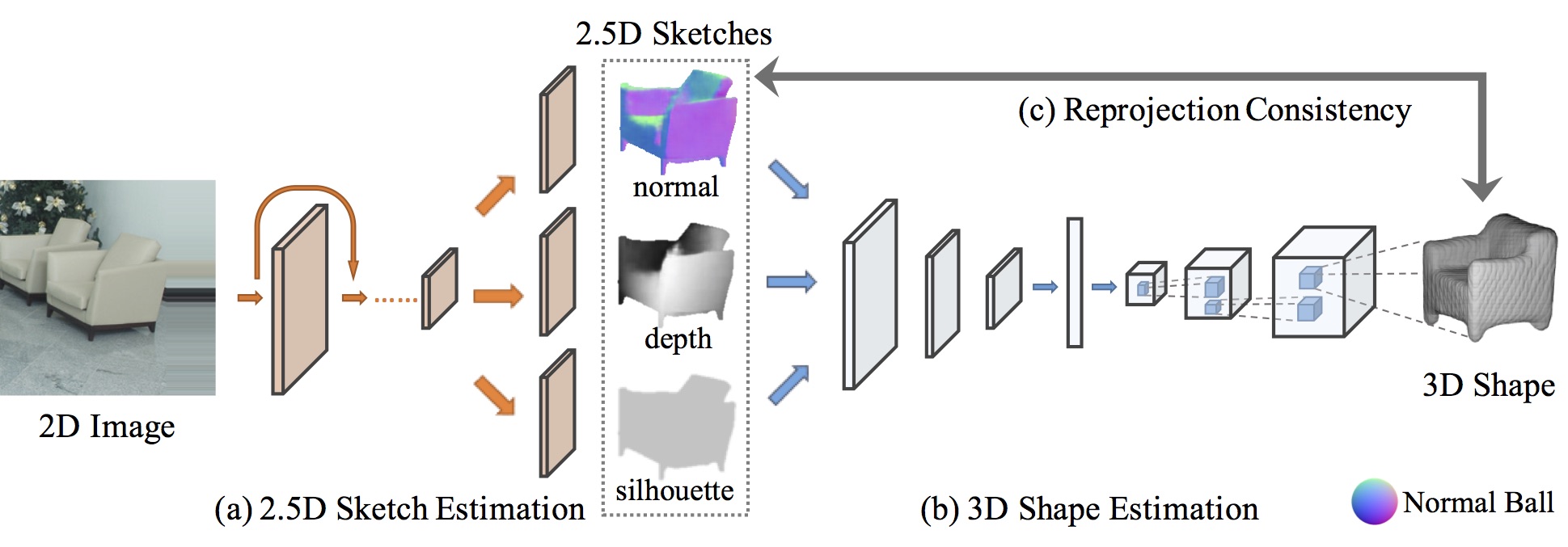

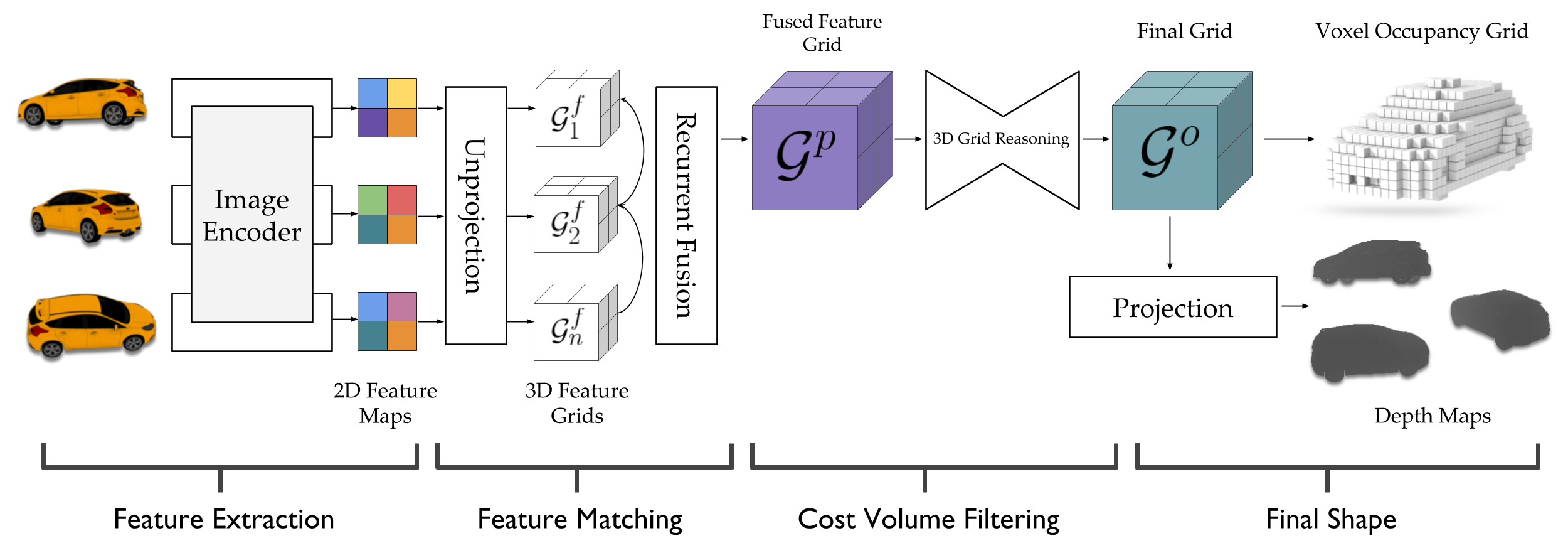

- 3D Geometry Synthesis/Reconstruction

- Parametric Morphable Model-based methods

- Part-based Template Learning methods

- Deep Learning Methods

- Texture/Material Analysis and Synthesis

- Fashion Learning and Transfer

- Scene Synthesis/Reconstruction

- Scene Understanding

Available Courses

Stanford CS231A: Computer Vision-From 3D Reconstruction to Recognition (Winter 2018)

UCSD CSE291-I00: Auto Learning for 3D Information (Winter 2018)

Stanford CS468: Auto Learning for 3D Data (Spring 2017)

MIT six.838: Shape Analysis (Spring 2017)

Princeton COS 526: Advanced Computer Graphics (Fall 2010)

Princeton CS597: Geometric Modeling and Analysis (Fall 2003)

Geometric Deep Learning

Newspaper Collection for 3D Understanding

CreativeAI: Deep Learning for Graphics

Datasets

To come across a survey of RGBD datasets, check out Michael Firman'southward collection as well as the associated paper, RGBD Datasets: By, Present and Future. Point Cloud Library too has a good dataset catalogue.

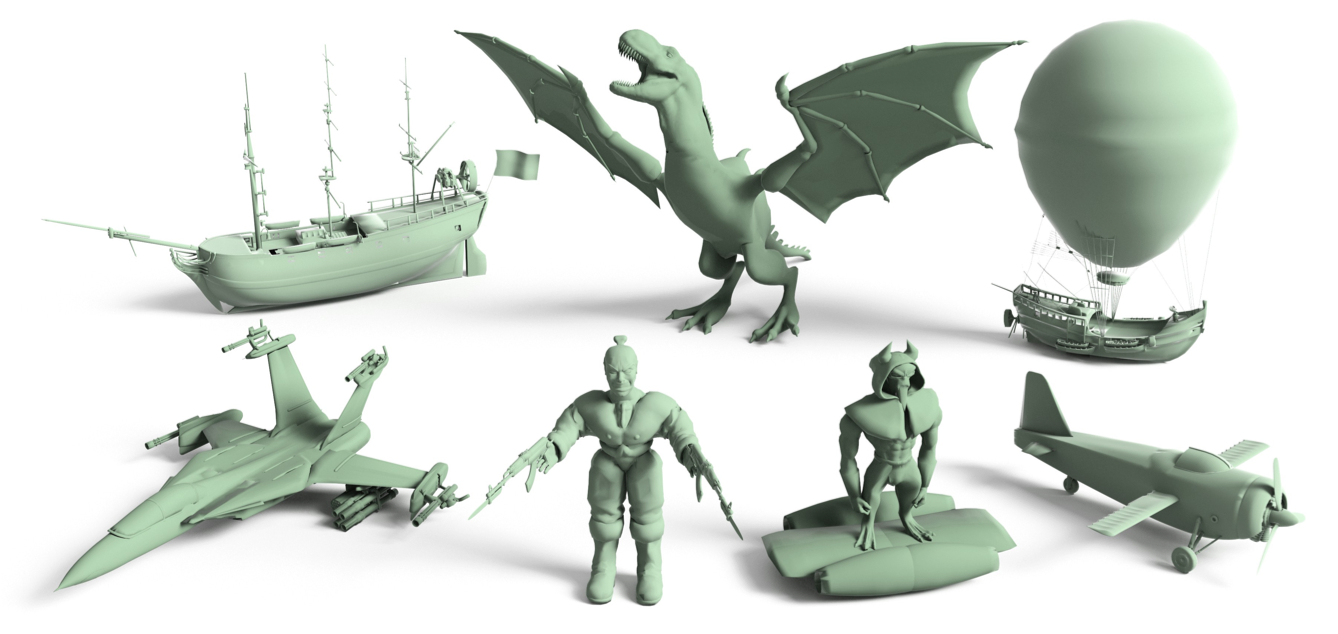

3D Models

Princeton Shape Criterion (2003) [Link]

1,814 models collected from the spider web in .OFF format. Used to evaluating shape-based retrieval and analysis algorithms.

.jpeg)

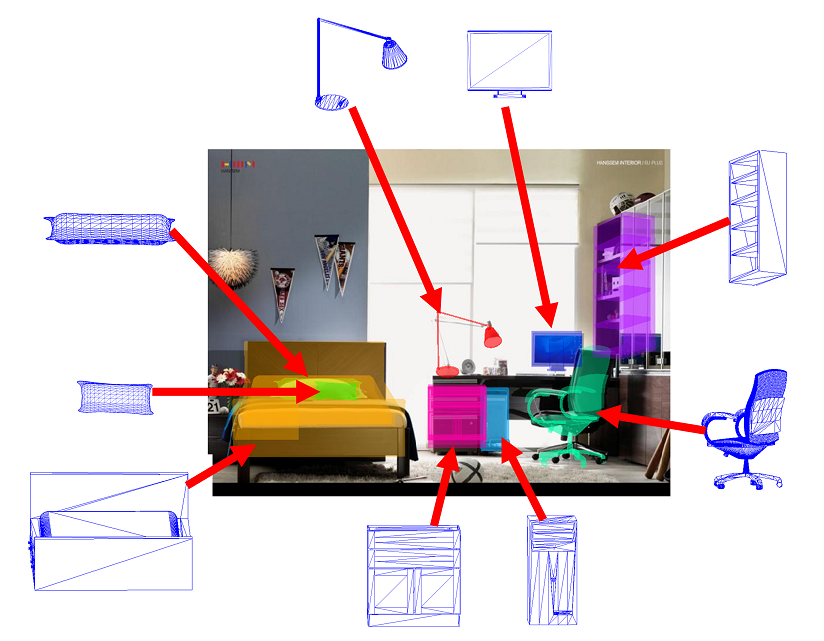

Dataset for IKEA 3D models and aligned images (2013) [Link]

759 images and 219 models including Sketchup (skp) and Wavefront (obj) files, skillful for pose estimation.

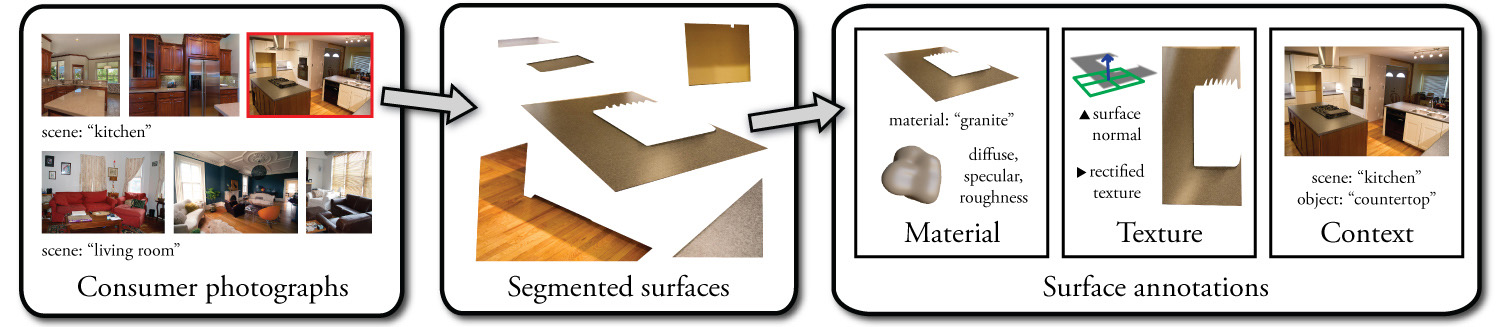

Open up Surfaces: A Richly Annotated Catalog of Surface Appearance (SIGGRAPH 2013) [Link]

OpenSurfaces is a large database of annotated surfaces created from existent-world consumer photographs. Our note framework draws on crowdsourcing to segment surfaces from photos, and so annotate them with rich surface properties, including material, texture and contextual information.

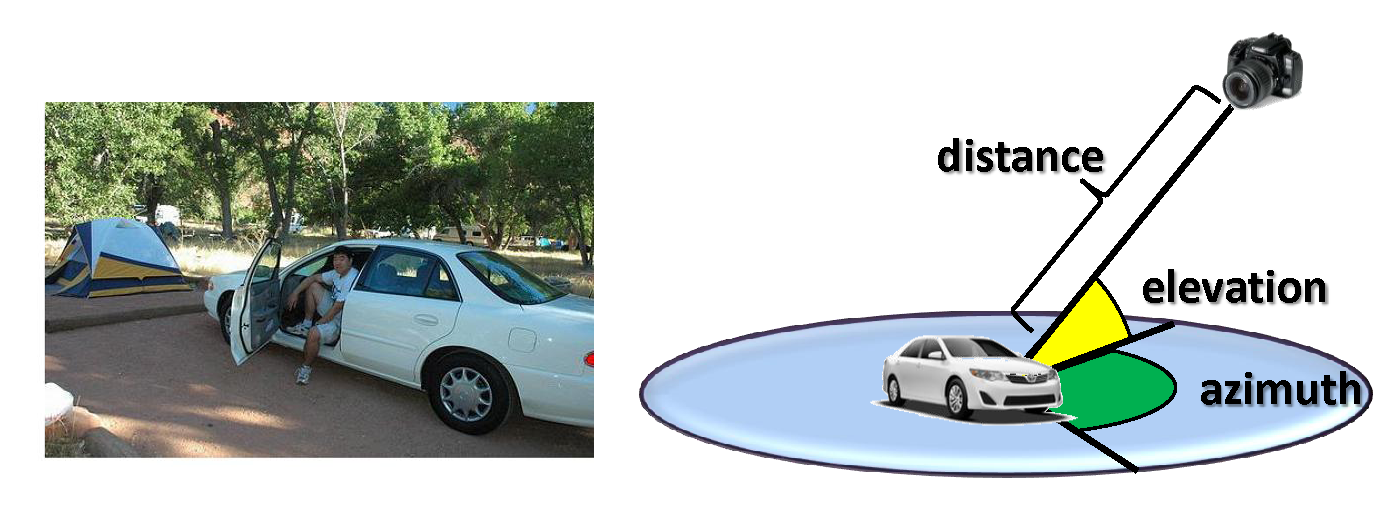

PASCAL3D+ (2014) [Link]

12 categories, on average 3k+ objects per category, for 3D object detection and pose estimation.

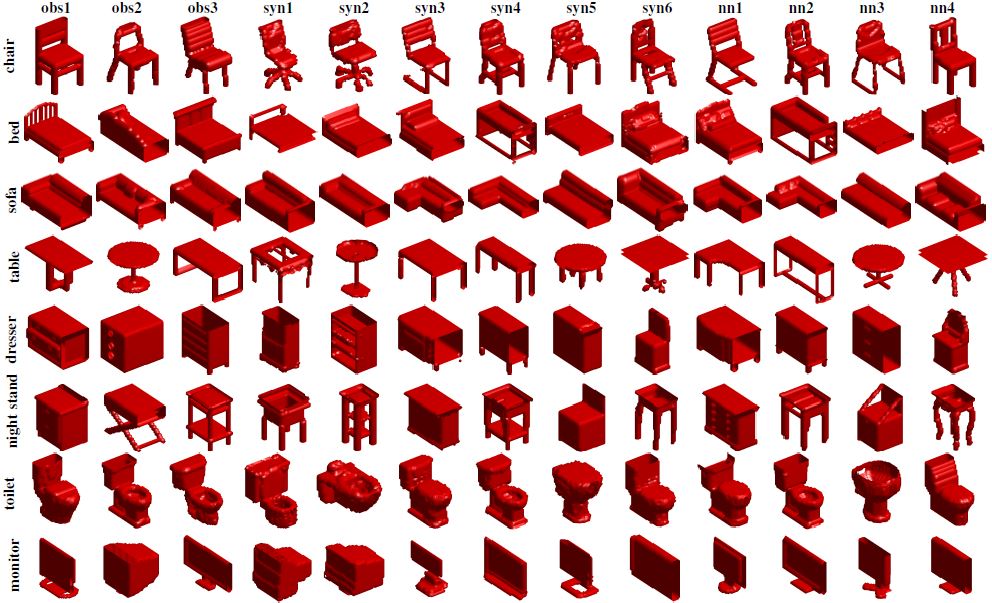

ModelNet (2015) [Link]

127915 3D CAD models from 662 categories

ModelNet10: 4899 models from 10 categories

ModelNet40: 12311 models from 40 categories, all are uniformly orientated

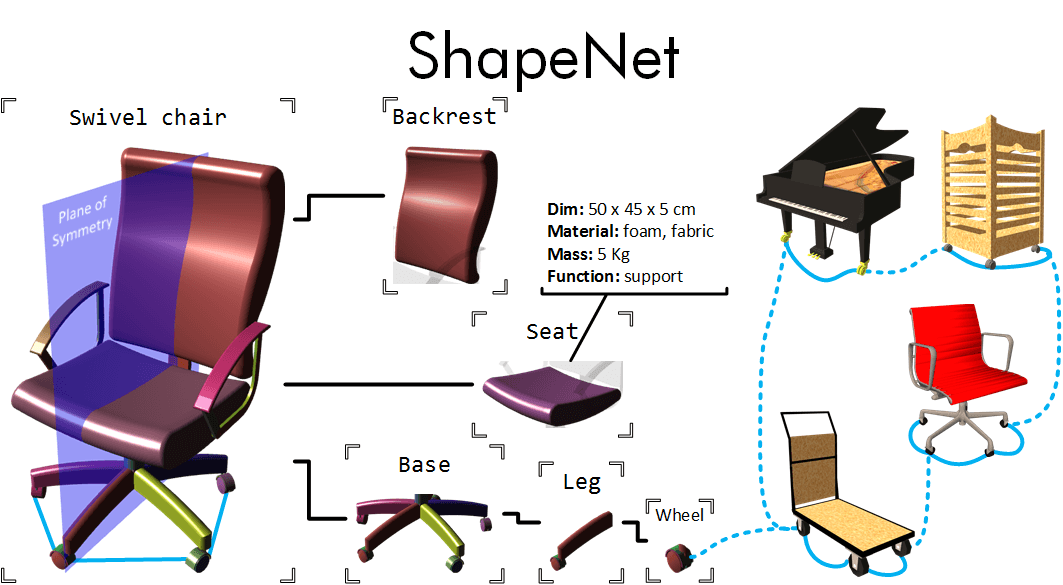

ShapeNet (2015) [Link]

3Million+ models and 4K+ categories. A dataset that is big in calibration, well organized and richly annotated.

ShapeNetCore [Link]: 51300 models for 55 categories.

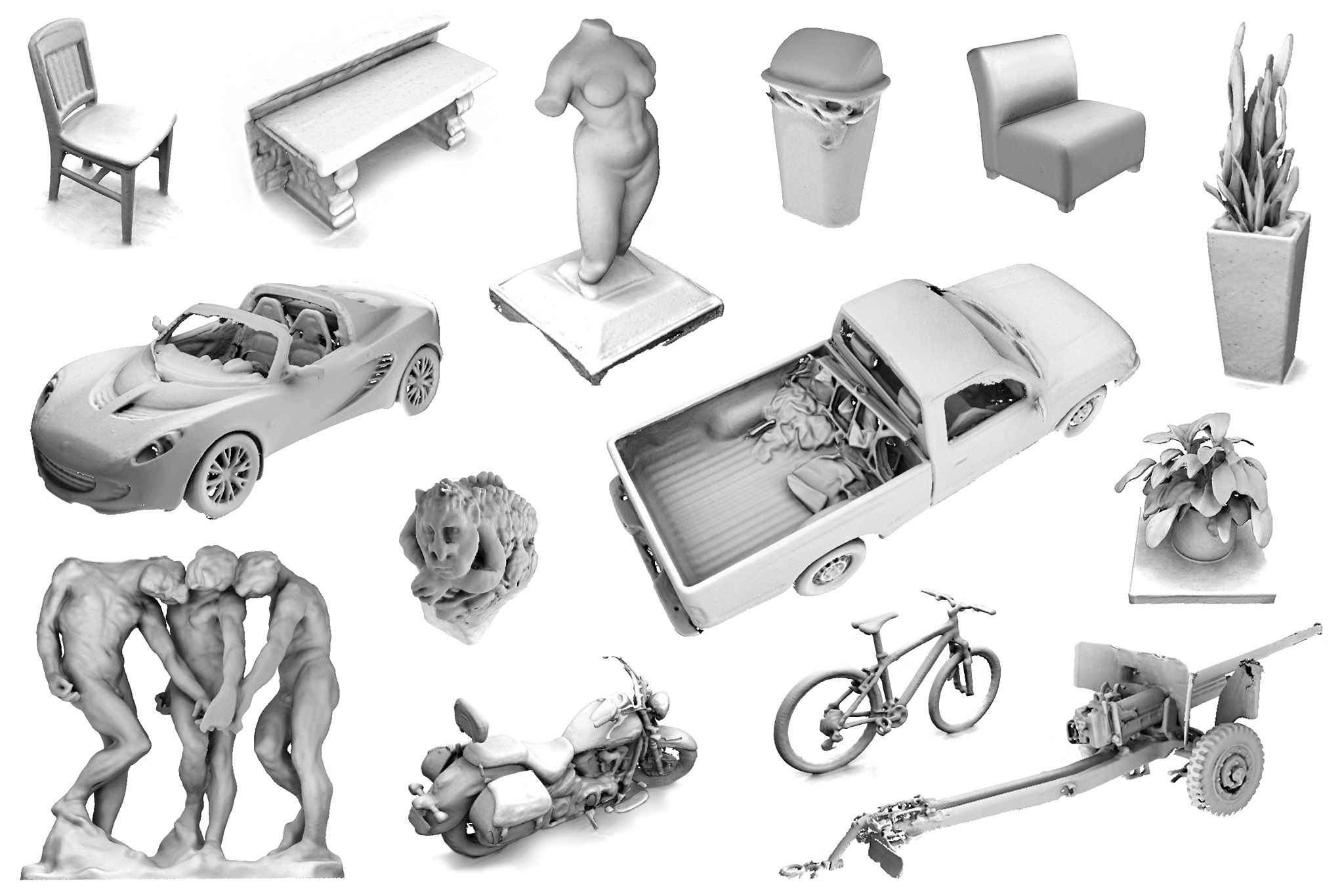

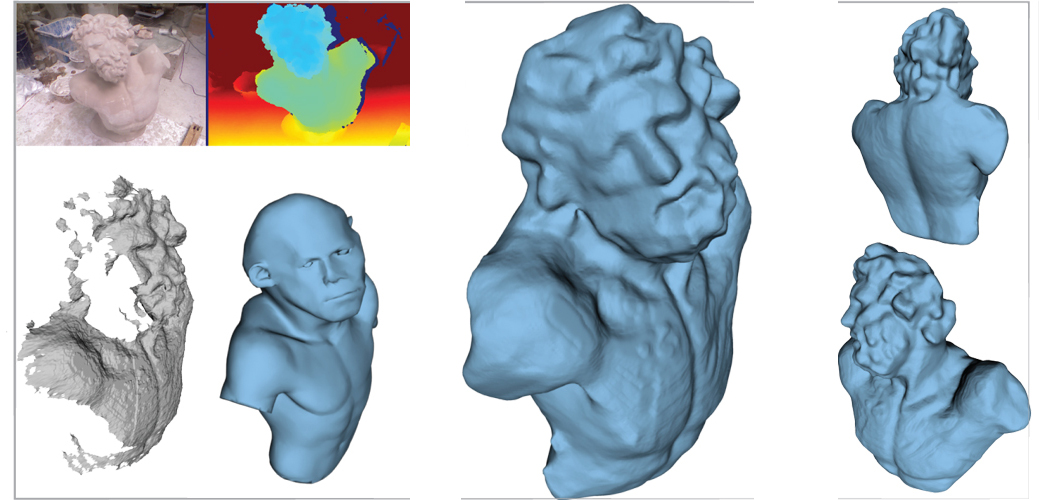

A Large Dataset of Object Scans (2016) [Link]

10K scans in RGBD + reconstructed 3D models in .PLY format.

ObjectNet3D: A Large Calibration Database for 3D Object Recognition (2016) [Link]

100 categories, xc,127 images, 201,888 objects in these images and 44,147 3D shapes.

Tasks: region proposal generation, 2D object detection, articulation 2D detection and 3D object pose interpretation, and paradigm-based 3D shape retrieval

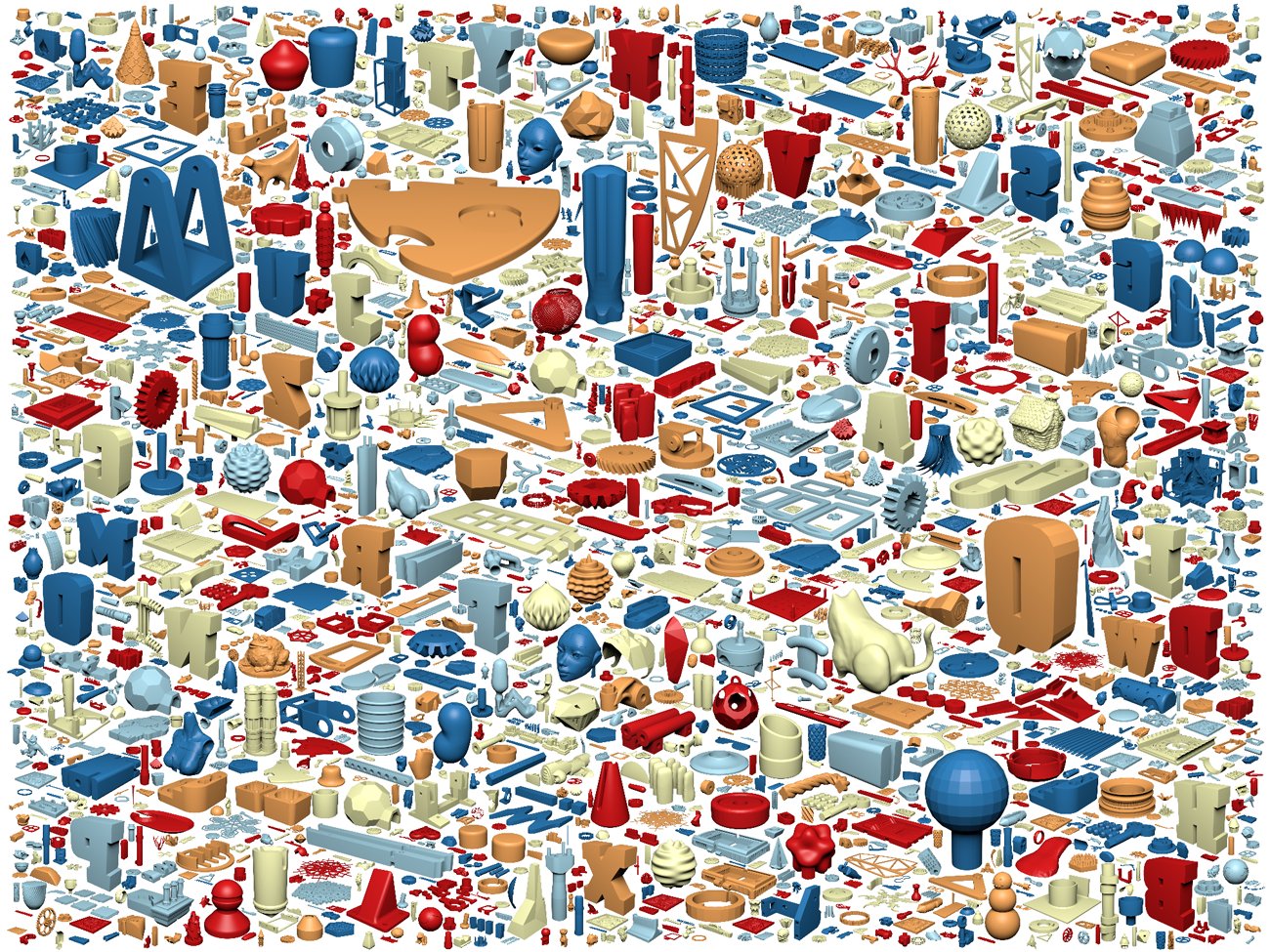

Thingi10K: A Dataset of 10,000 3D-Printing Models (2016) [Link]

10,000 models from featured "things" on thingiverse.com, suitable for testing 3D press techniques such as structural assay , shape optimization, or solid geometry operations.

ABC: A Big CAD Model Dataset For Geometric Deep Learning [Link][Newspaper]

This piece of work introduce a dataset for geometric deep learning consisting of over 1 meg private (and high quality) geometric models, each associated with authentic basis truth data on the decomposition into patches, explicit sharp feature annotations, and analytic differential properties.

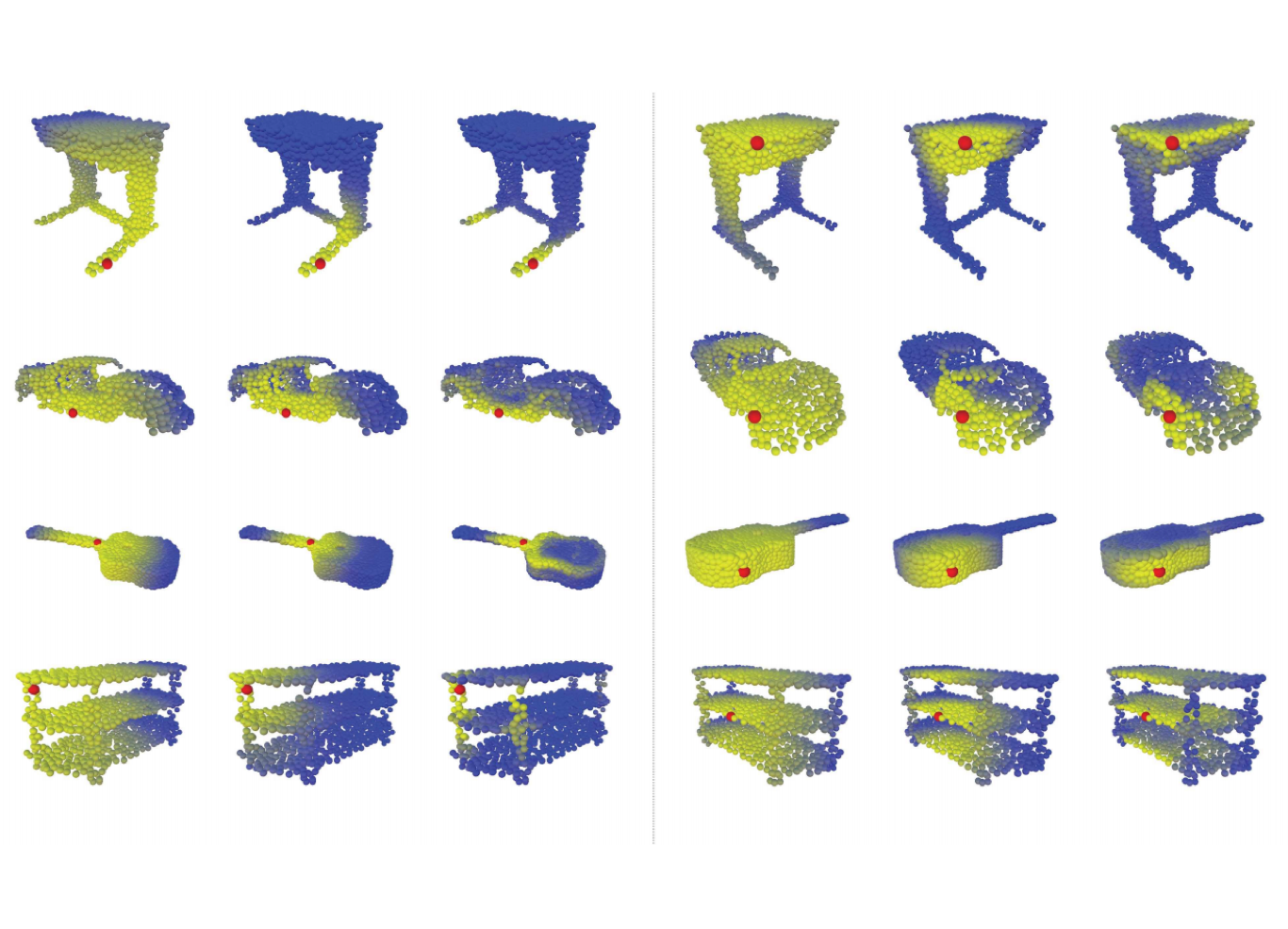

This work introduce ScanObjectNN, a new real-world point deject object dataset based on scanned indoor scene data. The comprehensive benchmark in this work shows that this dataset poses great challenges to existing signal deject nomenclature techniques as objects from real-world scans are often chaotic with background and/or are partial due to occlusions. Three key open problems for point cloud object nomenclature are identified, and a new point deject nomenclature neural network that achieves state-of-the-art performance on classifying objects with chaotic background is proposed.

VOCASET: Oral communication-4D Caput Browse Dataset (2019( [Link][Paper]

VOCASET, is a 4D face up dataset with about 29 minutes of 4D scans captured at 60 fps and synchronized audio. The dataset has 12 subjects and 480 sequences of about 3-4 seconds each with sentences chosen from an array of standard protocols that maximize phonetic multifariousness.

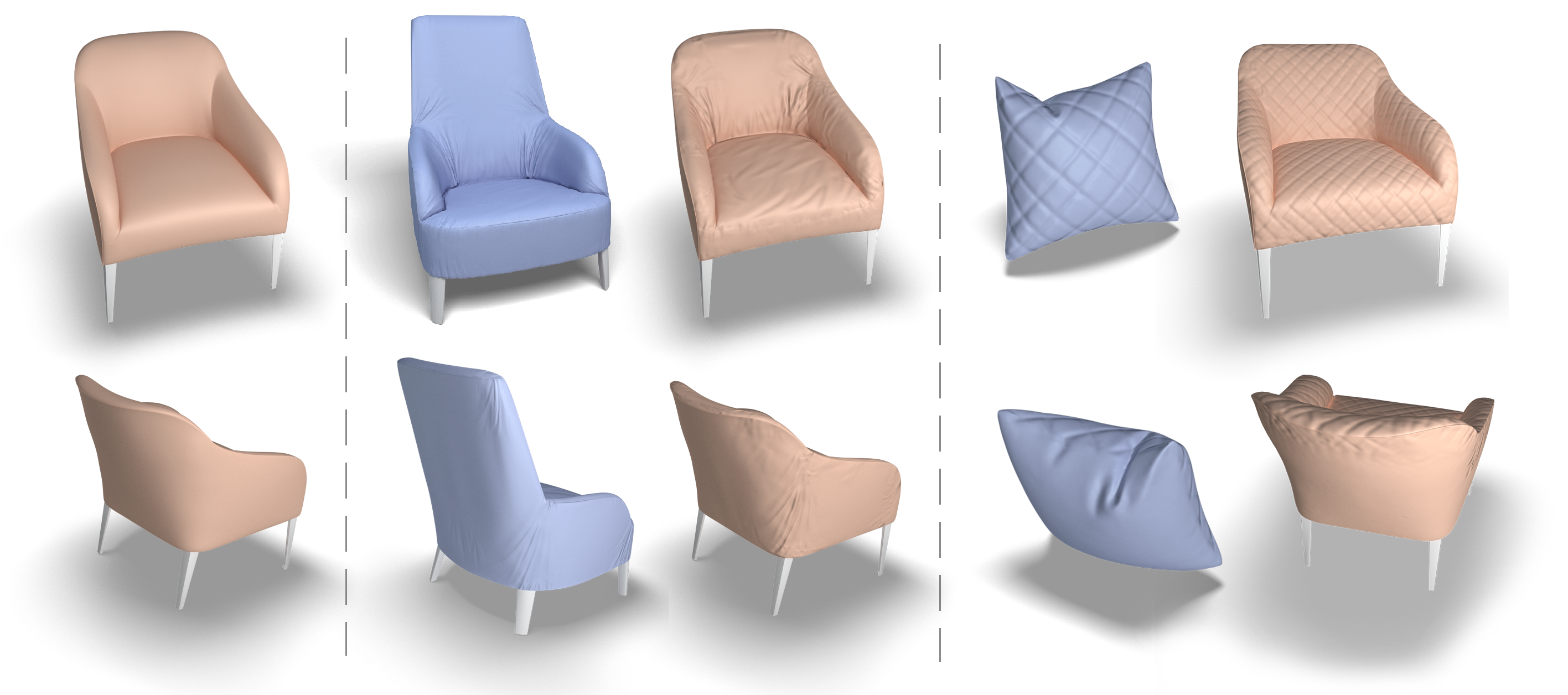

3D-Hereafter: 3D Furniture shape with TextURE (2020( [Link]

VOCASET, contains twenty,000+ make clean and realistic constructed scenes in 5,000+ diverse rooms, which include 10,000+ unique high quality 3D instances of furniture with high resolution informative textures developed by professional designers.

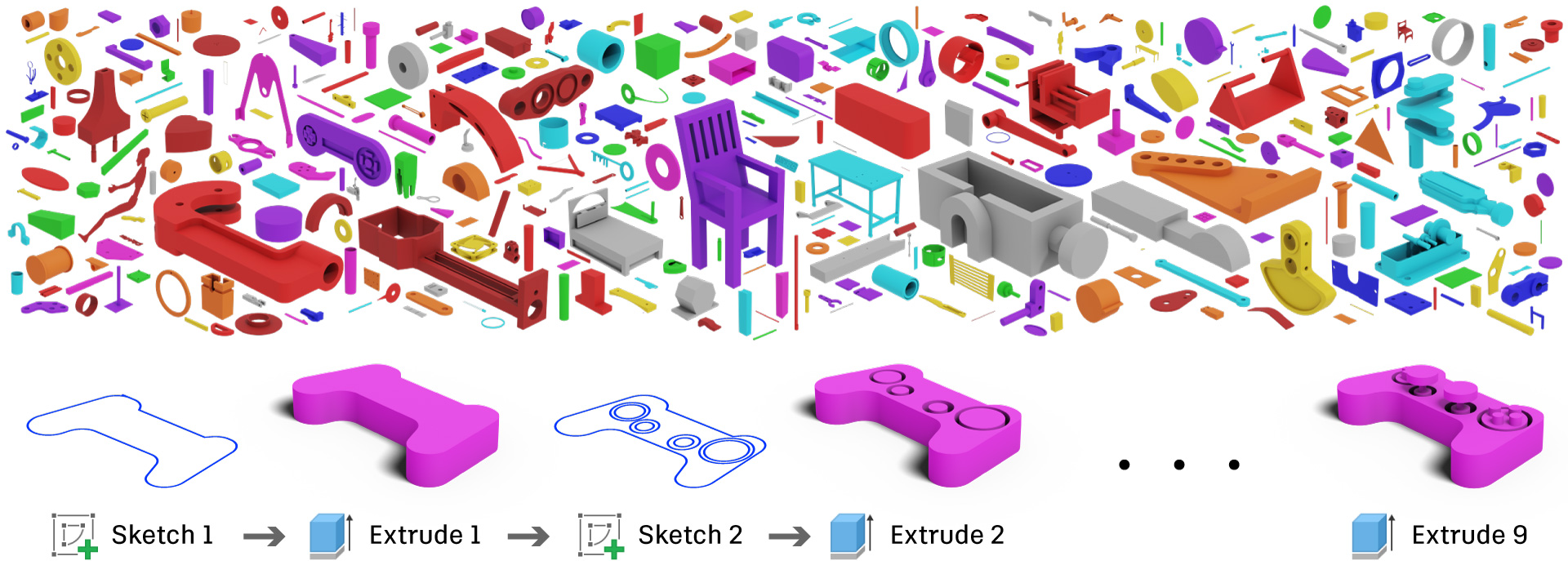

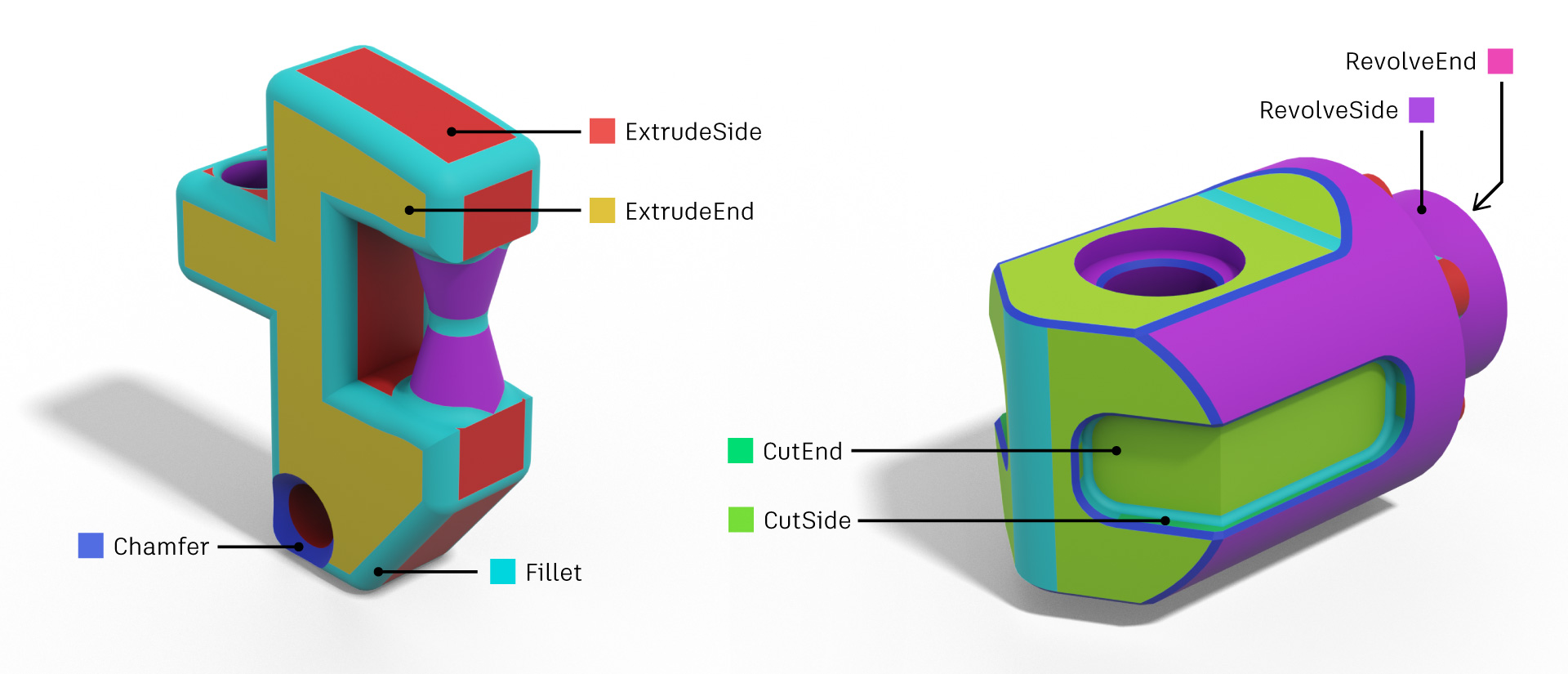

Fusion 360 Gallery Dataset (2020) [Link][Paper]

The Fusion 360 Gallery Dataset contains rich second and 3D geometry data derived from parametric CAD models. The Reconstruction Dataset provides sequential construction sequence data from a subset of unproblematic 'sketch and extrude' designs. The Segmentation Dataset provides a partitioning of 3D models based on the CAD modeling operation, including B-Rep format, mesh, and point deject.

Mechanical Components Benchmark (2020)[Link][Paper]

MCB is a large-scale dataset of 3D objects of mechanical components. It has a full number of 58,696 mechanical components with 68 classes.

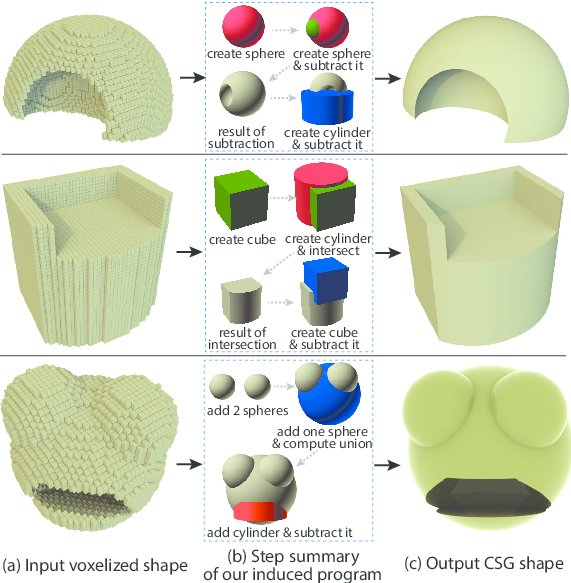

Combinatorial 3D Shape Dataset (2020) [Link][Newspaper]

Combinatorial 3D Shape Dataset is composed of 406 instances of fourteen classes. Each object in our dataset is considered equivalent to a sequence of primitive placement. Compared to other 3D object datasets, our proposed dataset contains an assembling sequence of unit primitives. It implies that nosotros can quickly obtain a sequential generation process that is a man assembling mechanism. Furthermore, we can sample valid random sequences from a given combinatorial shape after validating the sampled sequences. To sum up, the characteristics of our combinatorial 3D shape dataset are (i) combinatorial, (ii) sequential, (3) decomposable, and (iv) manipulable.

3D Scenes

NYU Depth Dataset V2 (2012) [Link]

1449 densely labeled pairs of aligned RGB and depth images from Kinect video sequences for a diversity of indoor scenes.

SUNRGB-D 3D Object Detection Challenge [Link]

xix object categories for predicting a 3D bounding box in real world dimension

Training set: x,355 RGB-D scene images, Testing set: 2860 RGB-D images

SceneNN (2016) [Link]

100+ indoor scene meshes with per-vertex and per-pixel annotation.

ScanNet (2017) [Link]

An RGB-D video dataset containing 2.5 meg views in more than 1500 scans, annotated with 3D camera poses, surface reconstructions, and instance-level semantic segmentations.

Matterport3D: Learning from RGB-D Information in Indoor Environments (2017) [Link]

10,800 panoramic views (in both RGB and depth) from 194,400 RGB-D images of 90 edifice-scale scenes of private rooms. Instance-level semantic segmentations are provided for region (living room, kitchen) and object (sofa, TV) categories.

SUNCG: A Large 3D Model Repository for Indoor Scenes (2017) [Link]

The dataset contains over 45K different scenes with manually created realistic room and furniture layouts. All of the scenes are semantically annotated at the object level.

MINOS: Multimodal Indoor Simulator (2017) [Link]

MINOS is a simulator designed to back up the evolution of multisensory models for goal-directed navigation in complex indoor environments. MINOS leverages large datasets of complex 3D environments and supports flexible configuration of multimodal sensor suites. MINOS supports SUNCG and Matterport3D scenes.

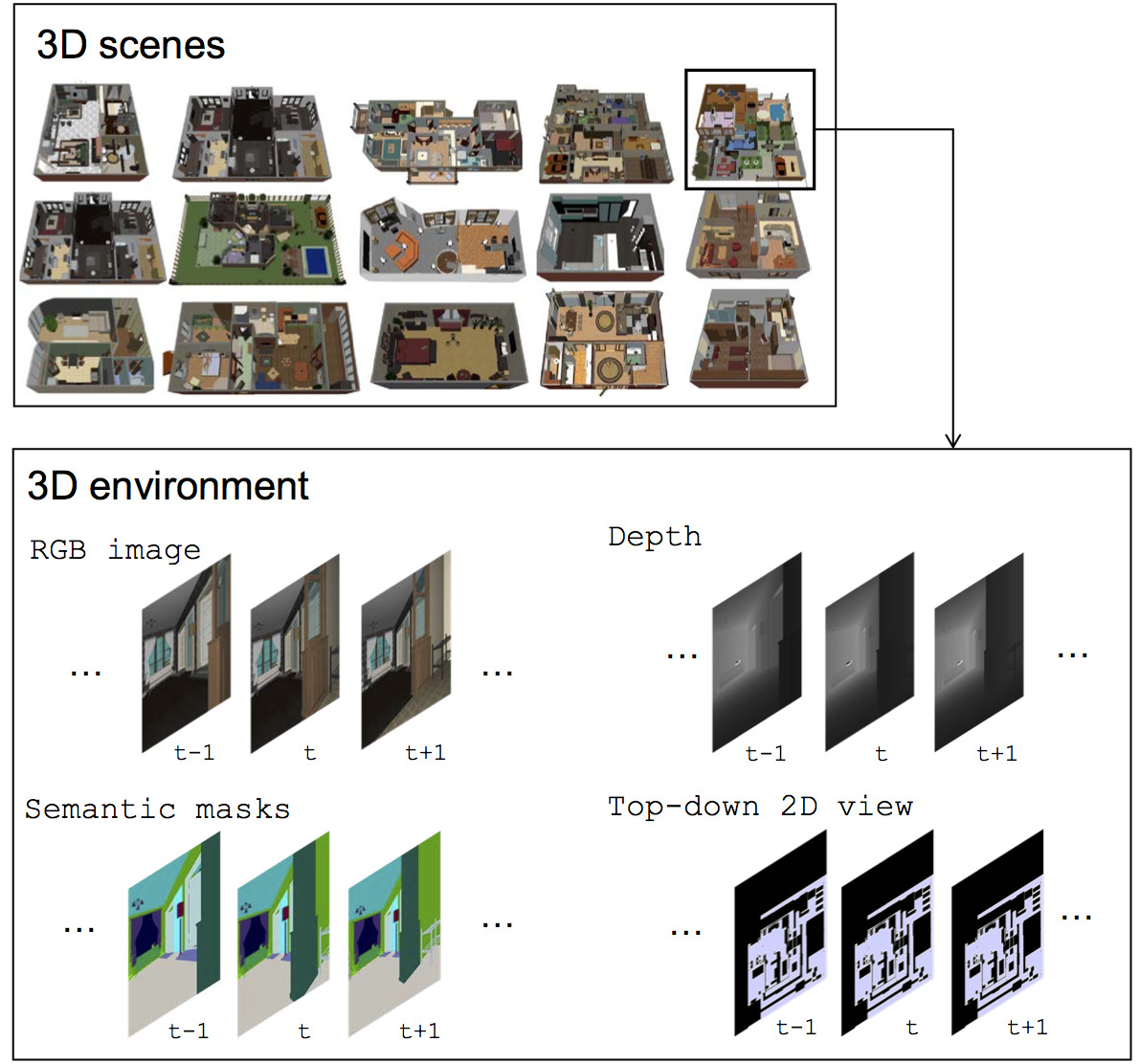

Facebook House3D: A Rich and Realistic 3D Surround (2017) [Link]

House3D is a virtual 3D environment which consists of 45K indoor scenes equipped with a diverse set of scene types, layouts and objects sourced from the SUNCG dataset. All 3D objects are fully annotated with category labels. Agents in the environment have admission to observations of multiple modalities, including RGB images, depth, segmentation masks and tiptop-down 2D map views.

Abode: a Household Multimodal Environment (2017) [Link]

Home integrates over 45,000 diverse 3D house layouts based on the SUNCG dataset, a scale which may facilitate learning, generalization, and transfer. HoME is an open-source, OpenAI Gym-compatible platform extensible to tasks in reinforcement learning, language grounding, sound-based navigation, robotics, multi-agent learning.

AI2-THOR: Photorealistic Interactive Environments for AI Agents [Link]

AI2-THOR is a photo-realistic interactable framework for AI agents. There are a total 120 scenes in version 1.0 of the THOR environment covering four different room categories: kitchens, living rooms, bedrooms, and bathrooms. Each room has a number of actionable objects.

UnrealCV: Virtual Worlds for Computer Vision (2017) [Link][Paper]

An open up source project to assist estimator vision researchers build virtual worlds using Unreal Engine 4.

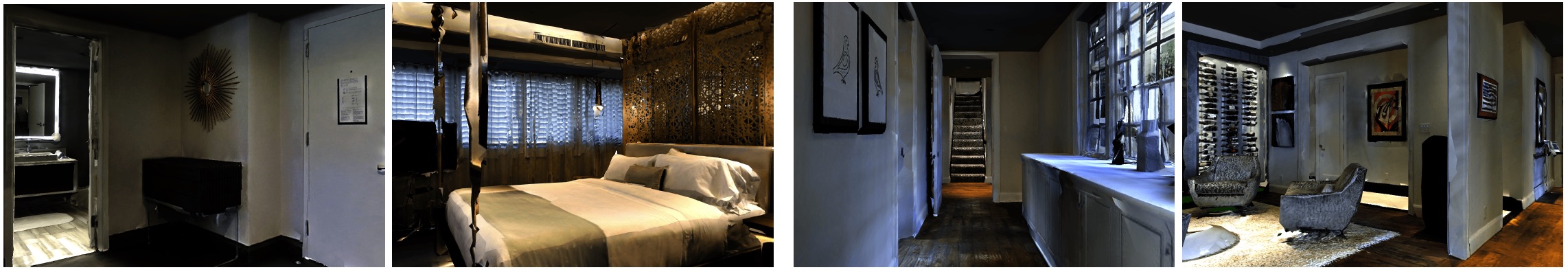

Gibson Environment: Real-Globe Perception for Embodied Agents (2018 CVPR) [Link]

This platform provides RGB from thou point clouds, every bit well as multimodal sensor data: surface normal, depth, and for a fraction of the spaces, semantics object annotations. The environment is too RL set up with physics integrated. Using such datasets can farther narrow downwardly the discrepency between virtual environment and existent world.

%20.jpeg)

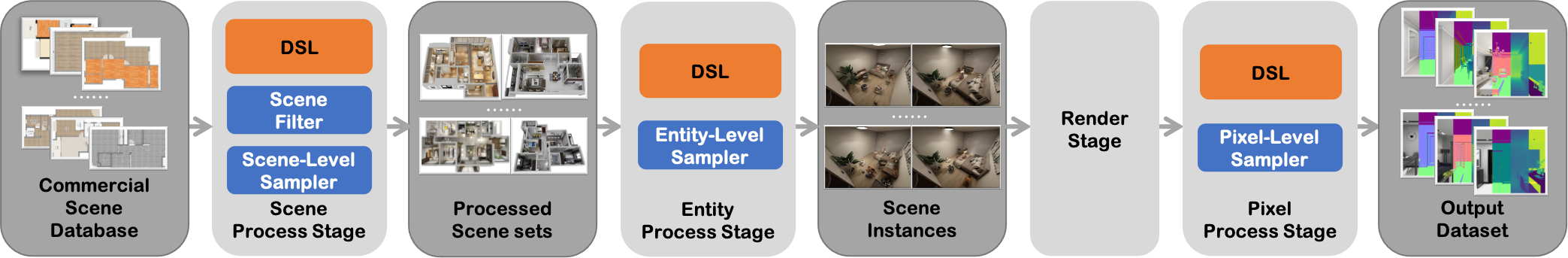

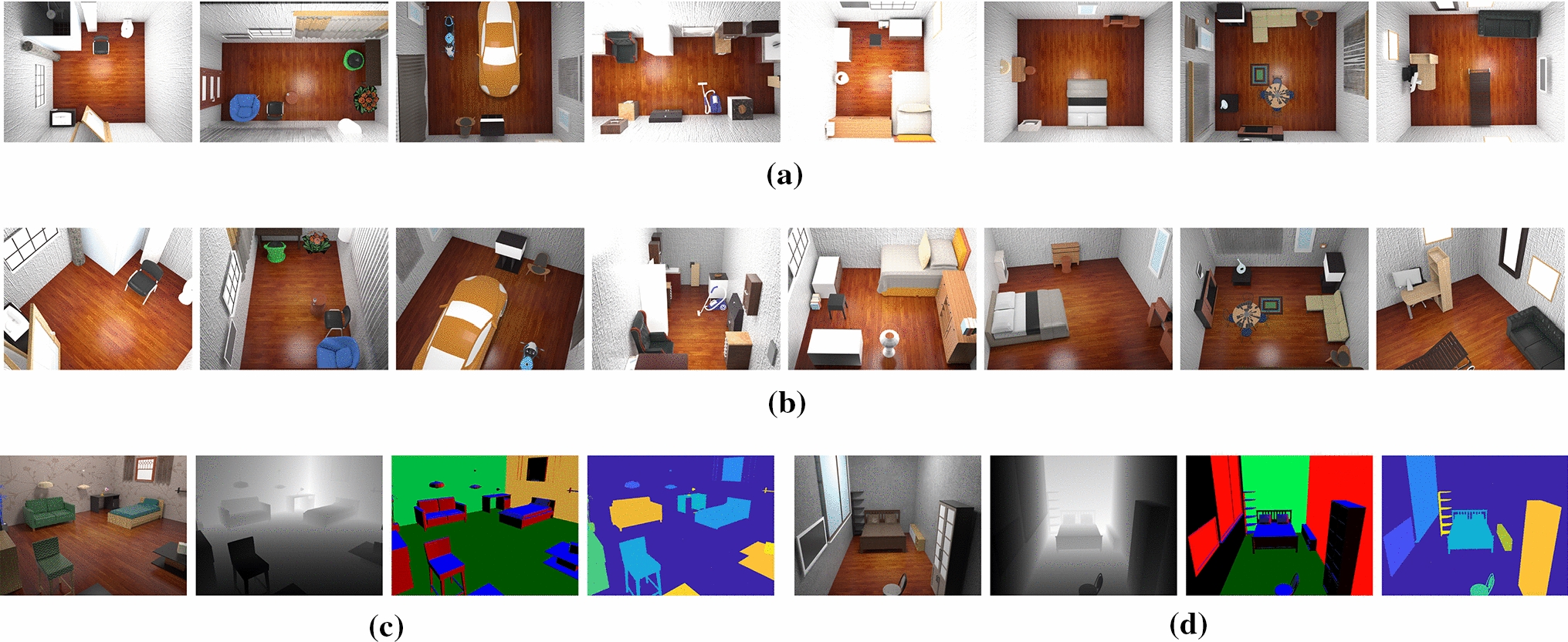

InteriorNet: Mega-scale Multi-sensor Photo-realistic Indoor Scenes Dataset [Link]

System Overview: an end-to-finish pipeline to render an RGB-D-inertial benchmark for large calibration interior scene understanding and mapping. Our dataset contains 20M images created by pipeline: (A) We collect around 1 one thousand thousand CAD models provided by world-leading furniture manufacturers. These models have been used in the real-world production. (B) Based on those models, around 1,100 professional designers create around 22 million interior layouts. Most of such layouts have been used in real-world decorations. (C) For each layout, nosotros generate a number of configurations to represent different random lightings and simulation of scene modify over fourth dimension in daily life. (D) We provide an interactive simulator (ViSim) to help for creating ground truth IMU, events, as well as monocular or stereo camera trajectories including hand-fatigued, random walking and neural network based realistic trajectory. (E) All supported image sequences and footing truth.

Semantic3D[Link]

Large-Scale Point Cloud Classification Benchmark, which provides a large labelled 3D point cloud data gear up of natural scenes with over four billion points in total, and also covers a range of various urban scenes.

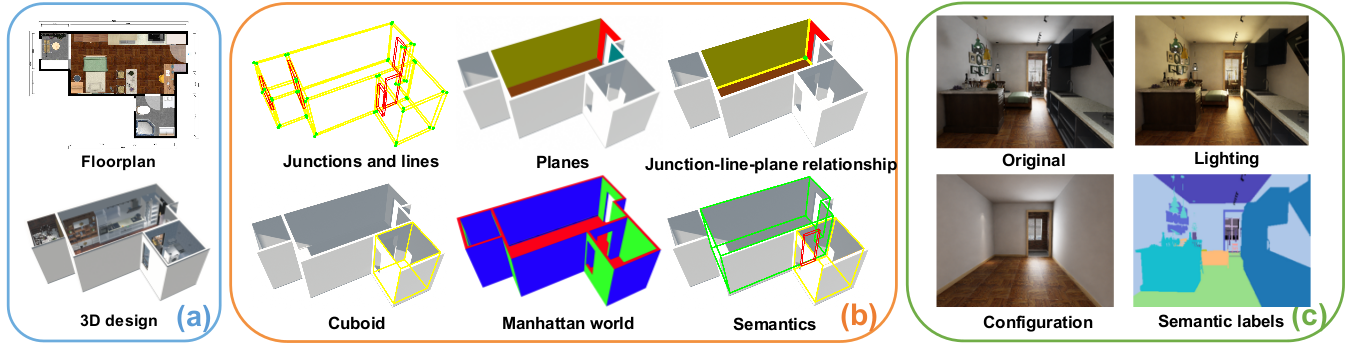

Structured3D: A Big Photograph-realistic Dataset for Structured 3D Modeling [Link]

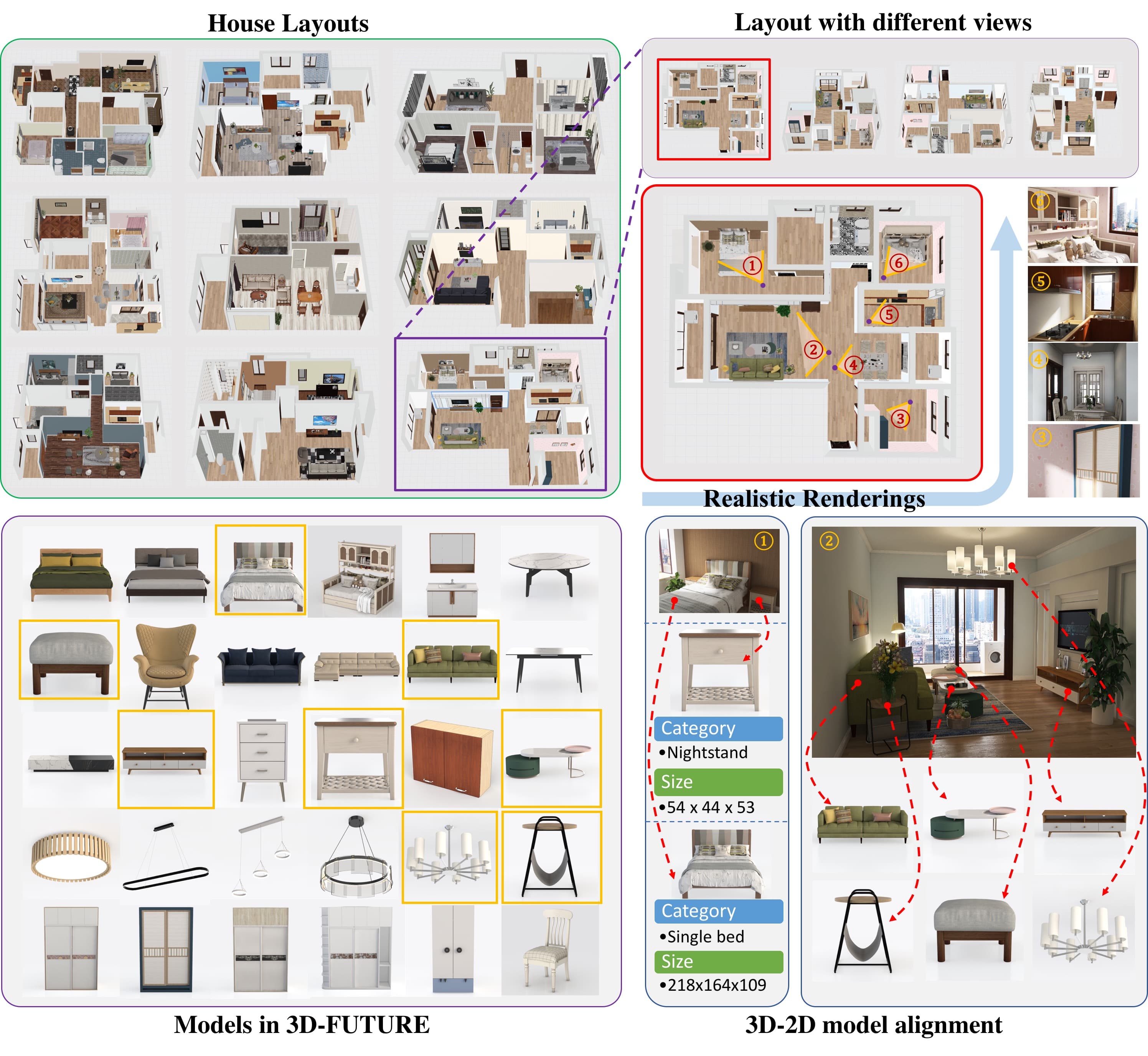

3D-FRONT: 3D Furnished Rooms with layOuts and semaNTics [Link]

Contains ten,000 houses (or apartments) and ~seventy,000 rooms with layout information.

3ThreeDWorld(TDW): A High-Fidelity, Multi-Modal Platform for Interactive Physical Simulation [Link]

MINERVAS: Massive INterior EnviRonments VirtuAl Synthesis [Link]

3D Pose Estimation

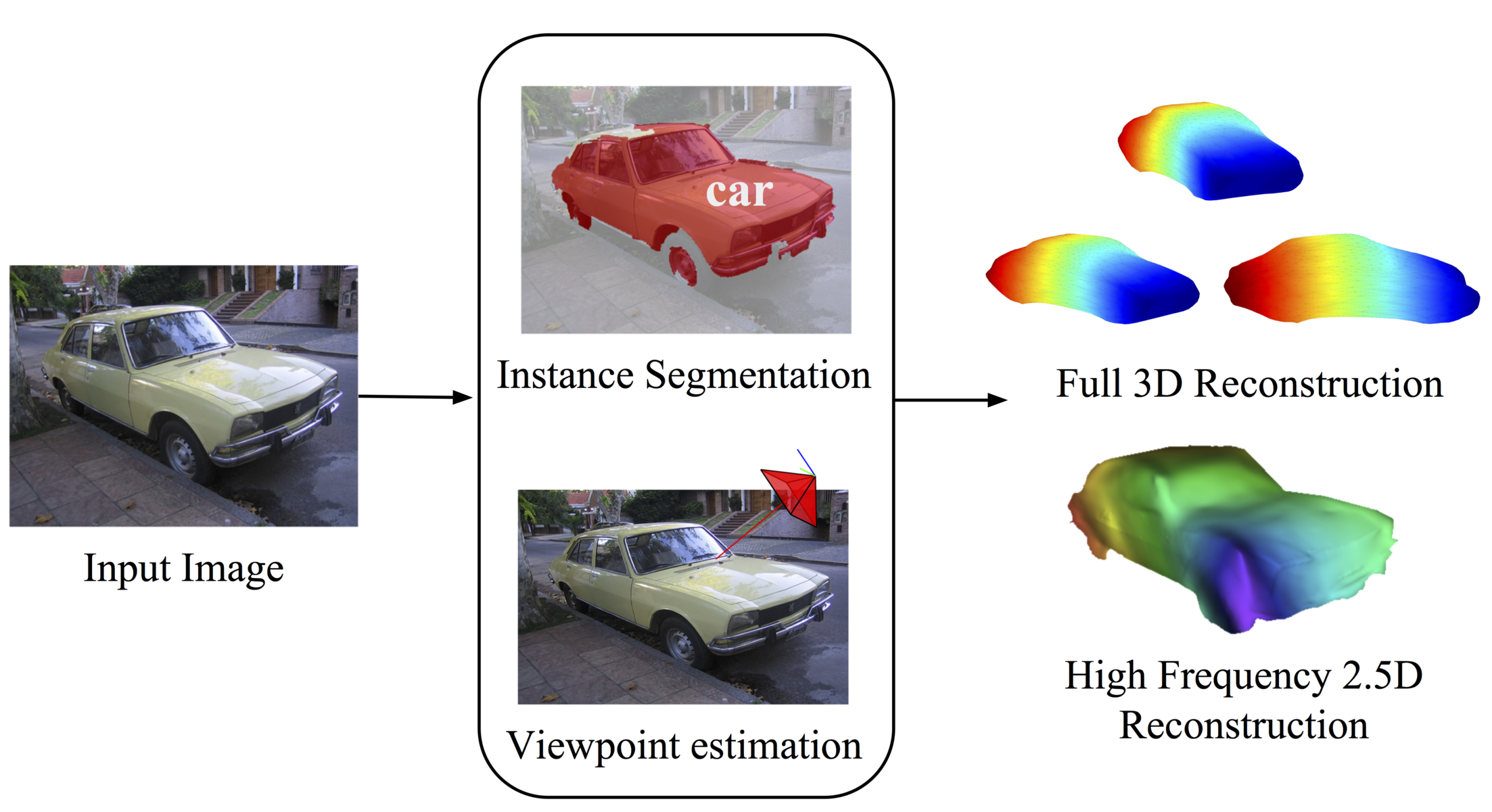

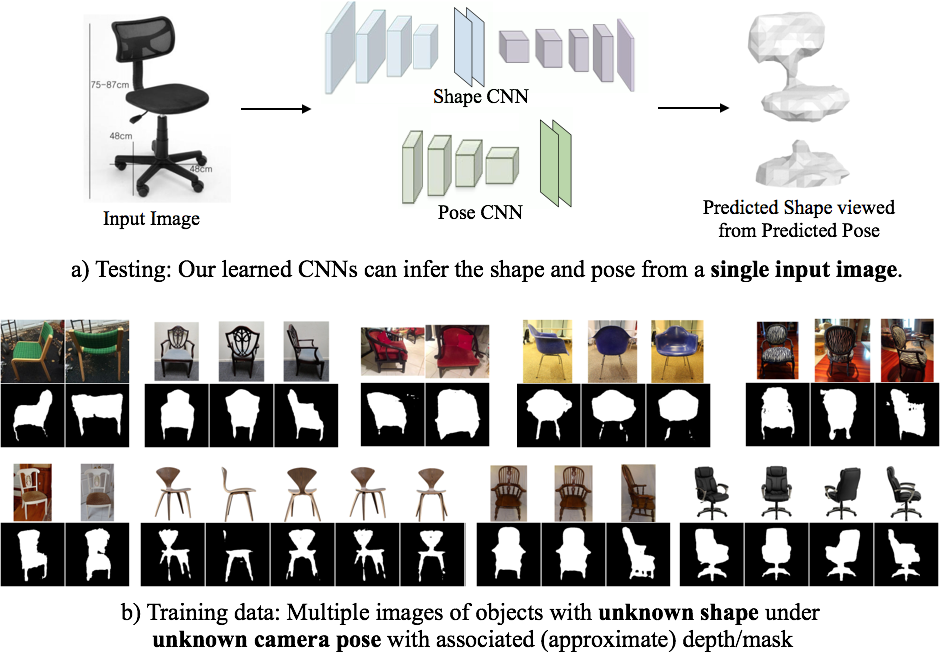

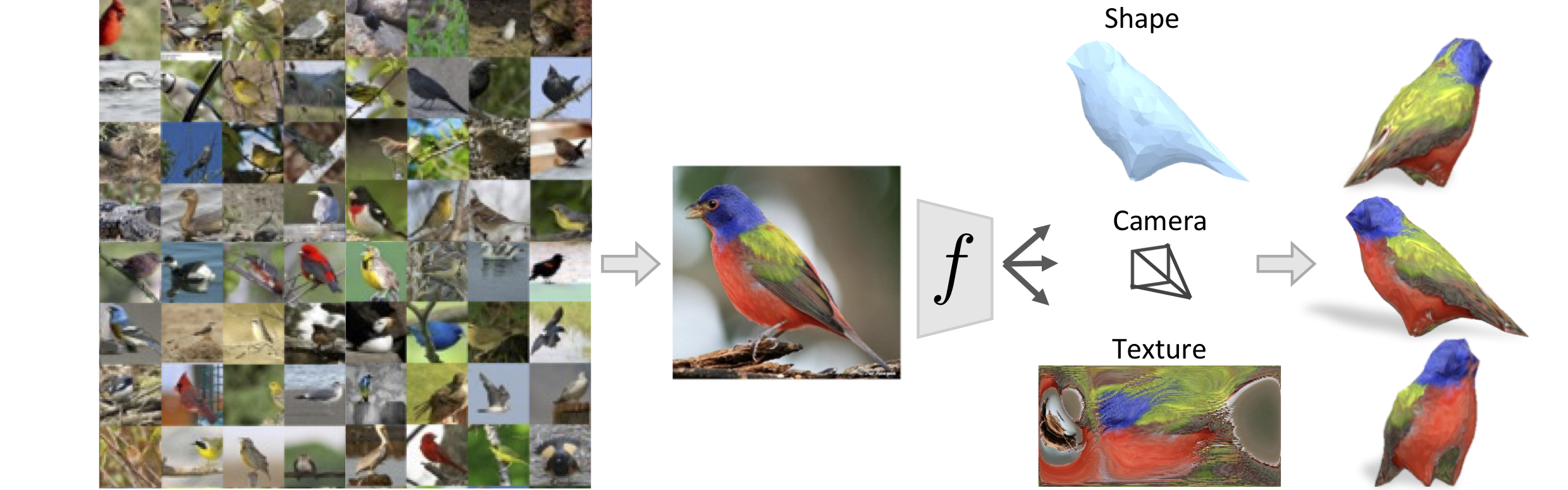

Category-Specific Object Reconstruction from a Single Paradigm (2014) [Paper]

Viewpoints and Keypoints (2015) [Paper]

Render for CNN: Viewpoint Estimation in Images Using CNNs Trained with Rendered 3D Model Views (2015 ICCV) [Newspaper]

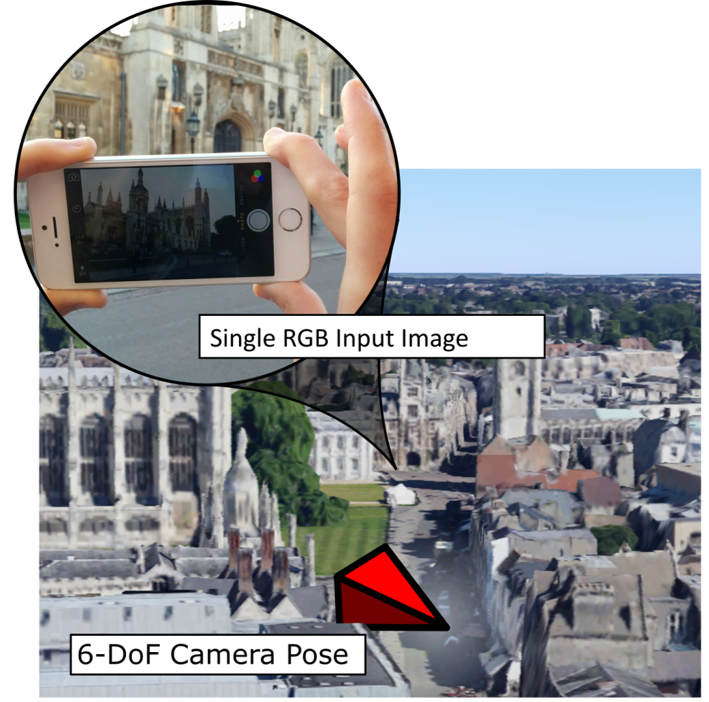

PoseNet: A Convolutional Network for Existent-Time six-DOF Camera Relocalization (2015) [Paper]

Modeling Doubt in Deep Learning for Camera Relocalization (2016) [Paper]

Robust camera pose interpretation by viewpoint classification using deep learning (2016) [Paper]

Image-based localization using lstms for structured characteristic correlation (2017 ICCV) [Paper]

Prototype-Based Localization Using Hourglass Networks (2017 ICCV Workshops) [Paper]

Geometric loss functions for camera pose regression with deep learning (2017 CVPR) [Paper]

Generic 3D Representation via Pose Interpretation and Matching (2017) [Paper]

3D Bounding Box Estimation Using Deep Learning and Geometry (2017) [Paper]

6-DoF Object Pose from Semantic Keypoints (2017) [Newspaper]

Relative Photographic camera Pose Estimation Using Convolutional Neural Networks (2017) [Paper]

3DMatch: Learning Local Geometric Descriptors from RGB-D Reconstructions (2017) [Paper]

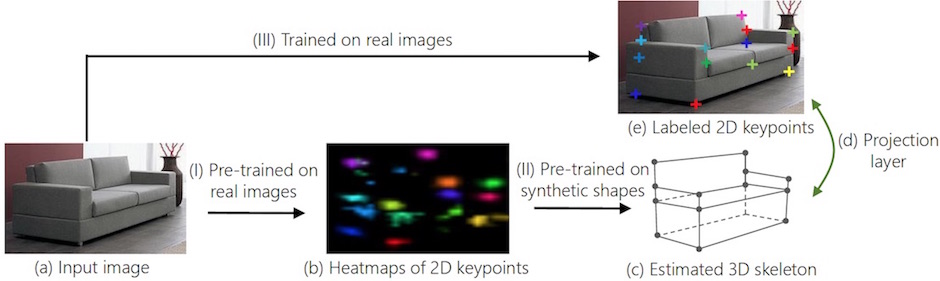

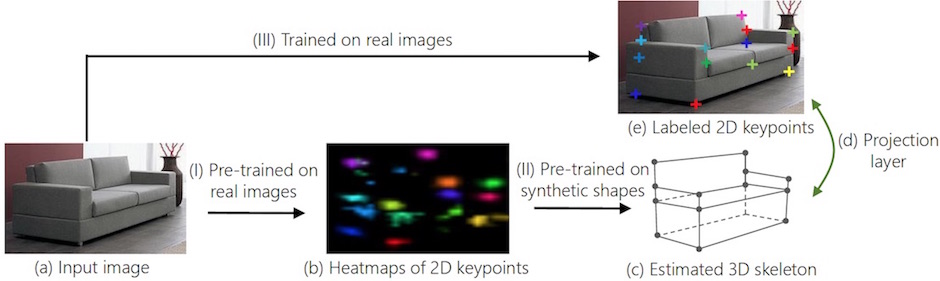

Unmarried Epitome 3D Interpreter Network (2016) [Paper] [Code]

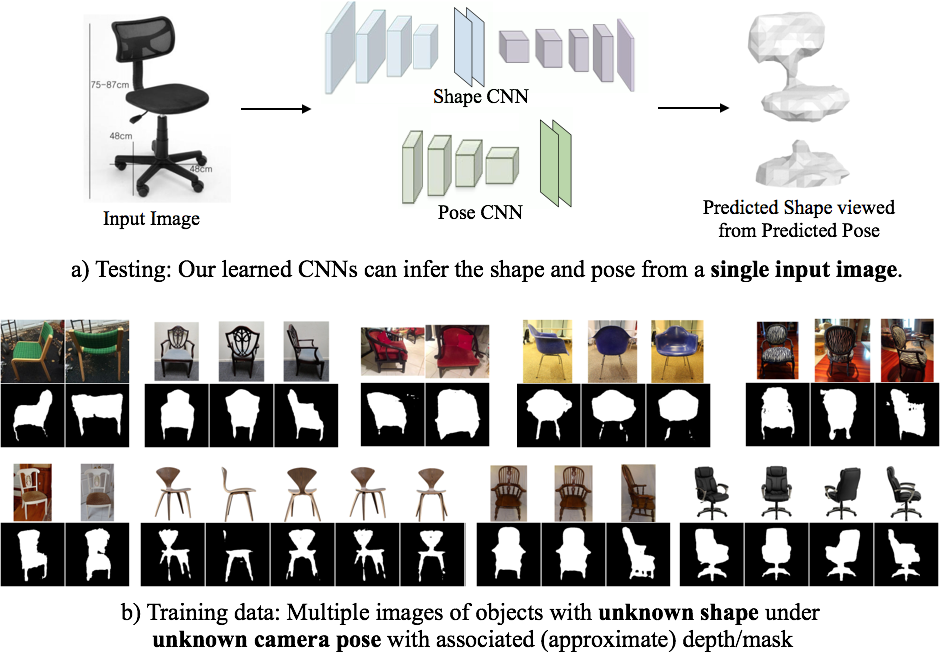

Multi-view Consistency as Supervisory Signal for Learning Shape and Pose Prediction (2018 CVPR) [Newspaper]

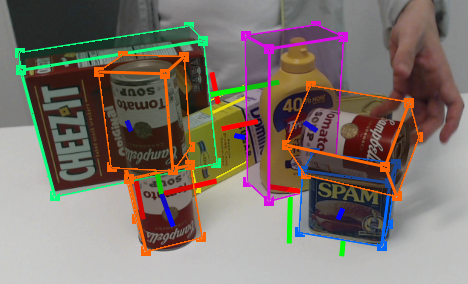

PoseCNN: A Convolutional Neural Network for 6D Object Pose Estimation in Cluttered Scenes (2018) [Newspaper]

Characteristic Mapping for Learning Fast and Authentic 3D Pose Inference from Synthetic Images (2018 CVPR) [Newspaper]

Pix3D: Dataset and Methods for Single-Paradigm 3D Shape Modeling (2018 CVPR) [Paper]

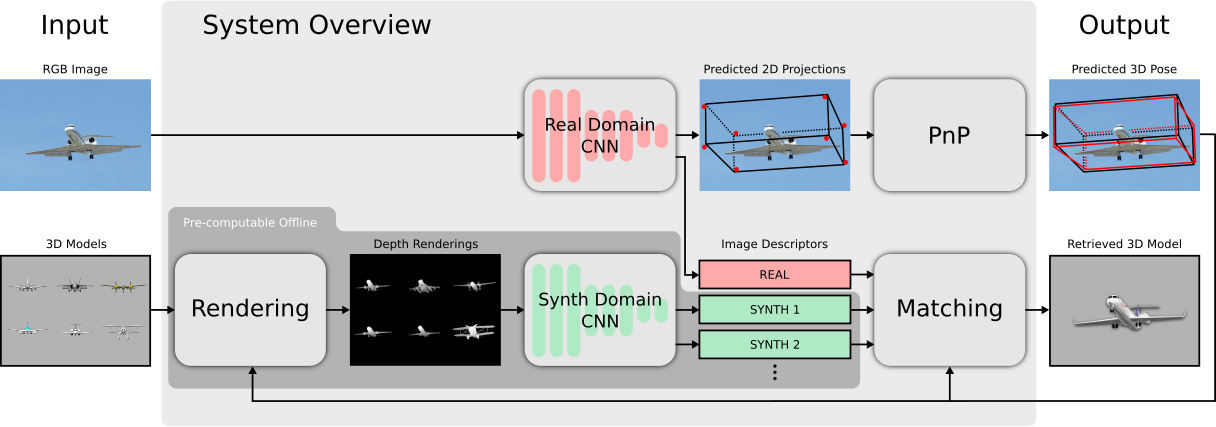

3D Pose Estimation and 3D Model Retrieval for Objects in the Wild (2018 CVPR) [Paper]

Deep Object Pose Estimation for Semantic Robotic Grasping of Household Objects (2018) [Paper]

MocapNET2: a existent-time method that estimates the 3D human pose directly in the popular Bio Vision Hierarchy (BVH) format (2021) [Paper], [Code]

![]()

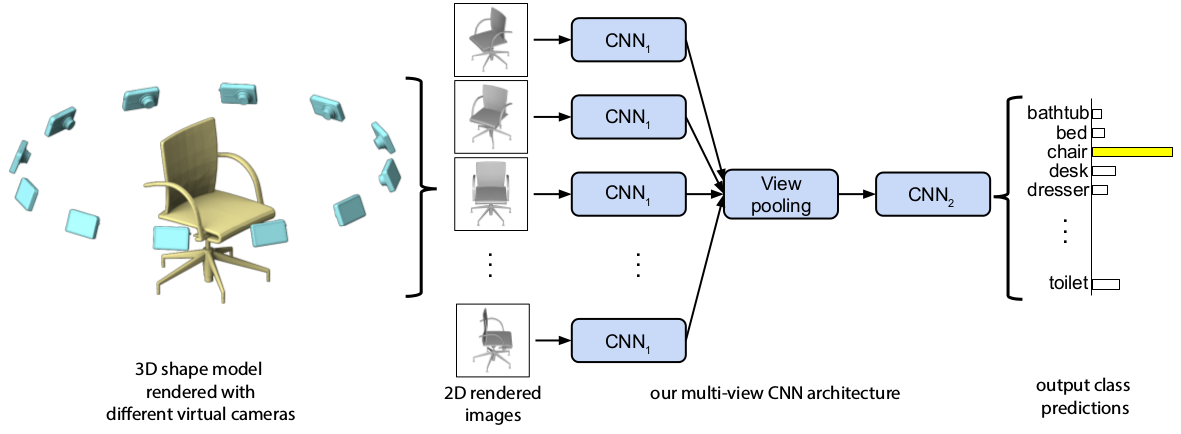

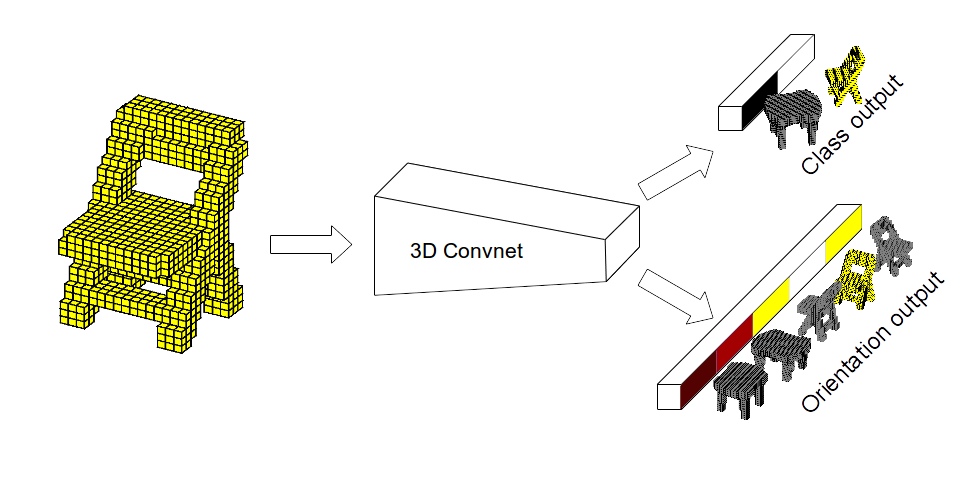

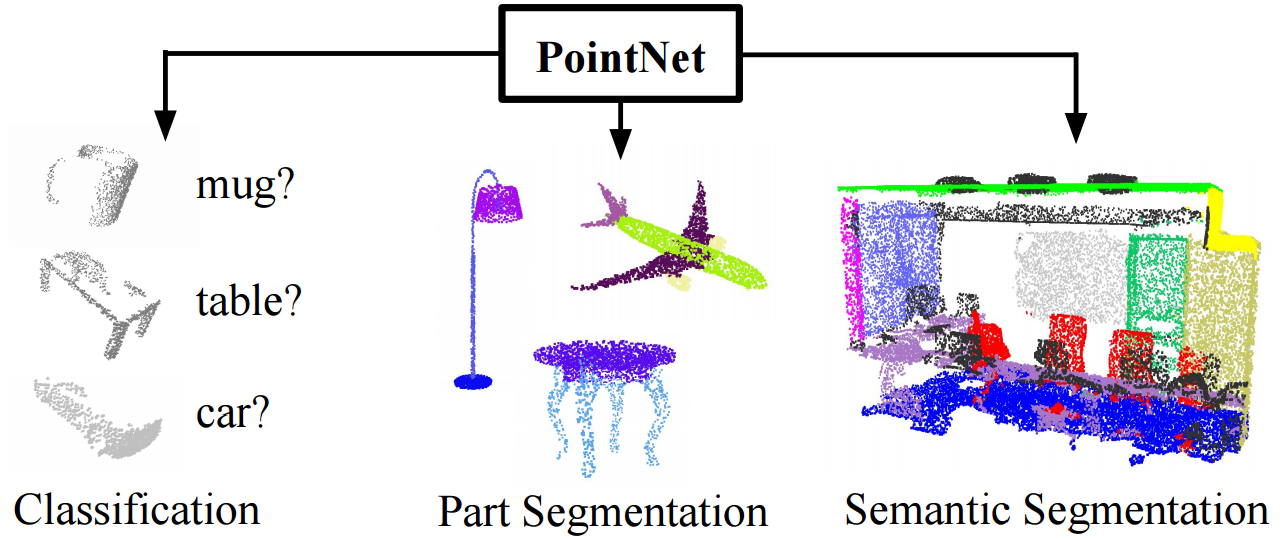

Single Object Classification

.jpeg)

Multiple Objects Detection

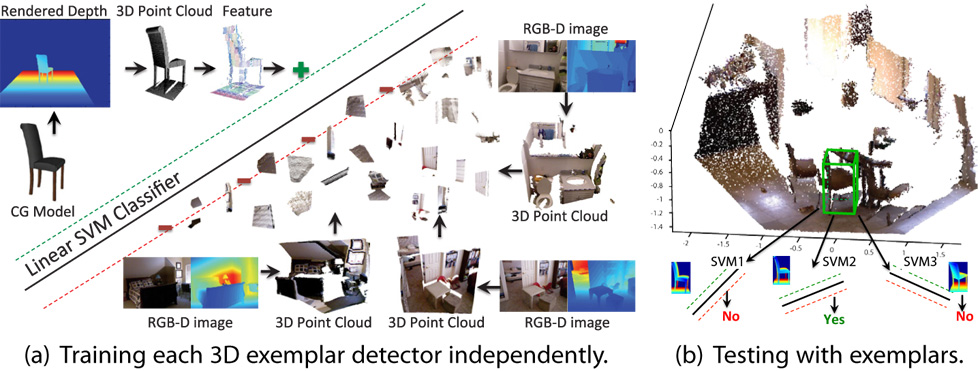

Sliding Shapes for 3D Object Detection in Depth Images (2014) [Paper]

Object Detection in 3D Scenes Using CNNs in Multi-view Images (2016) [Newspaper]

Deep Sliding Shapes for Amodal 3D Object Detection in RGB-D Images (2016) [Paper] [Code]

Three-Dimensional Object Detection and Layout Prediction using Clouds of Oriented Gradients (2016) [CVPR '16 Paper] [CVPR 'eighteen Paper] [T-PAMI '19 Paper]

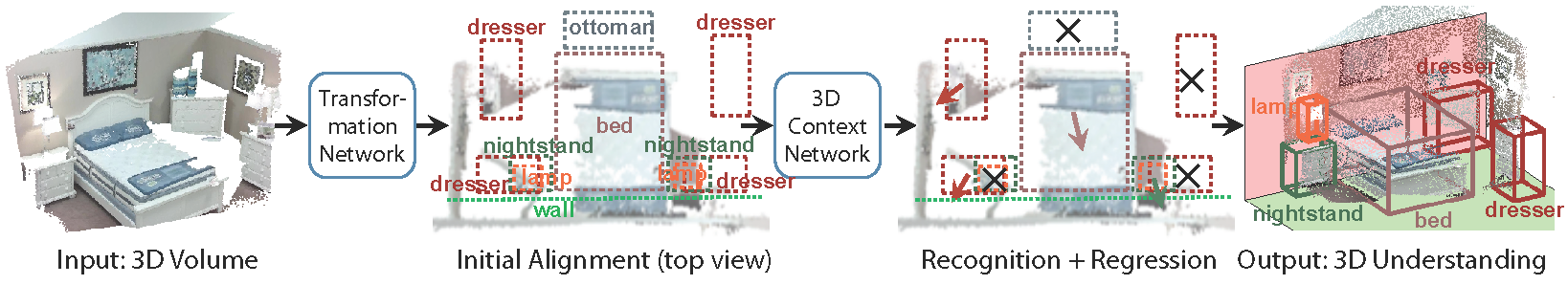

DeepContext: Context-Encoding Neural Pathways for 3D Holistic Scene Understanding (2016) [Paper]

Sunday RGB-D: A RGB-D Scene Understanding Benchmark Suite (2017) [Paper]

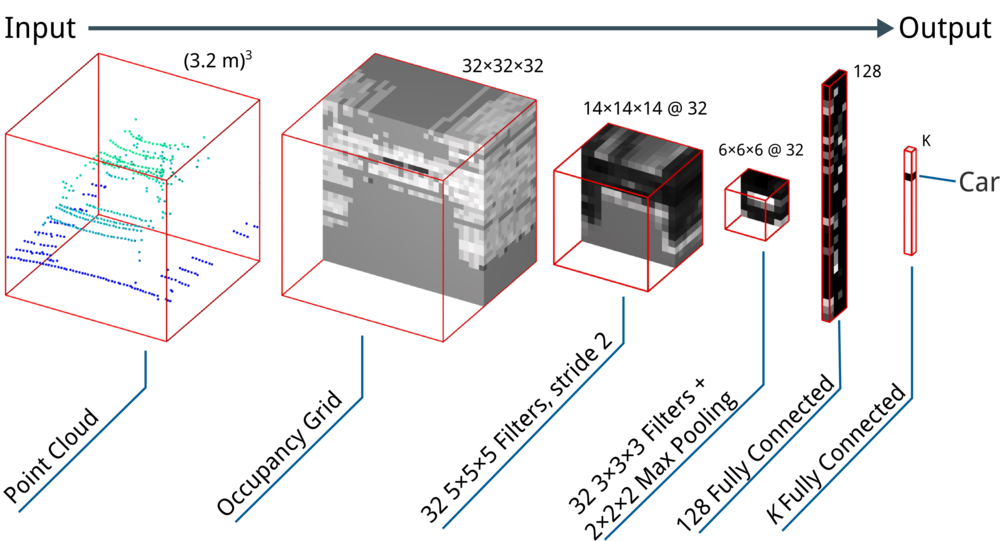

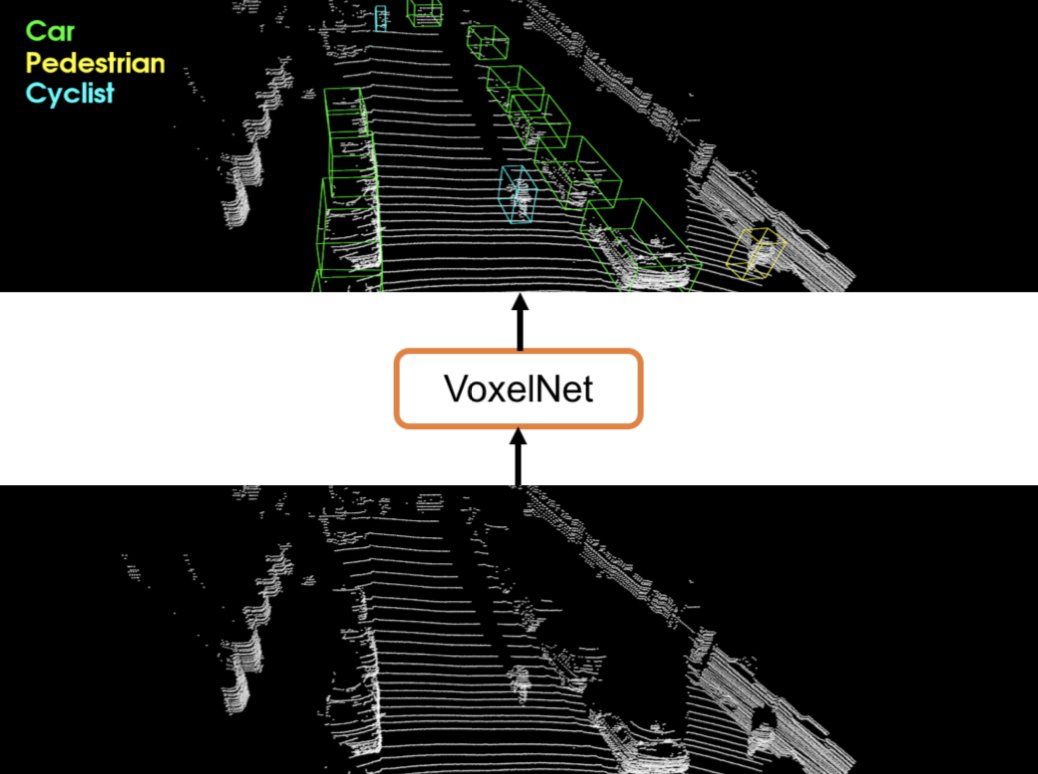

VoxelNet: Cease-to-Finish Learning for Point Cloud Based 3D Object Detection (2017) [Paper]

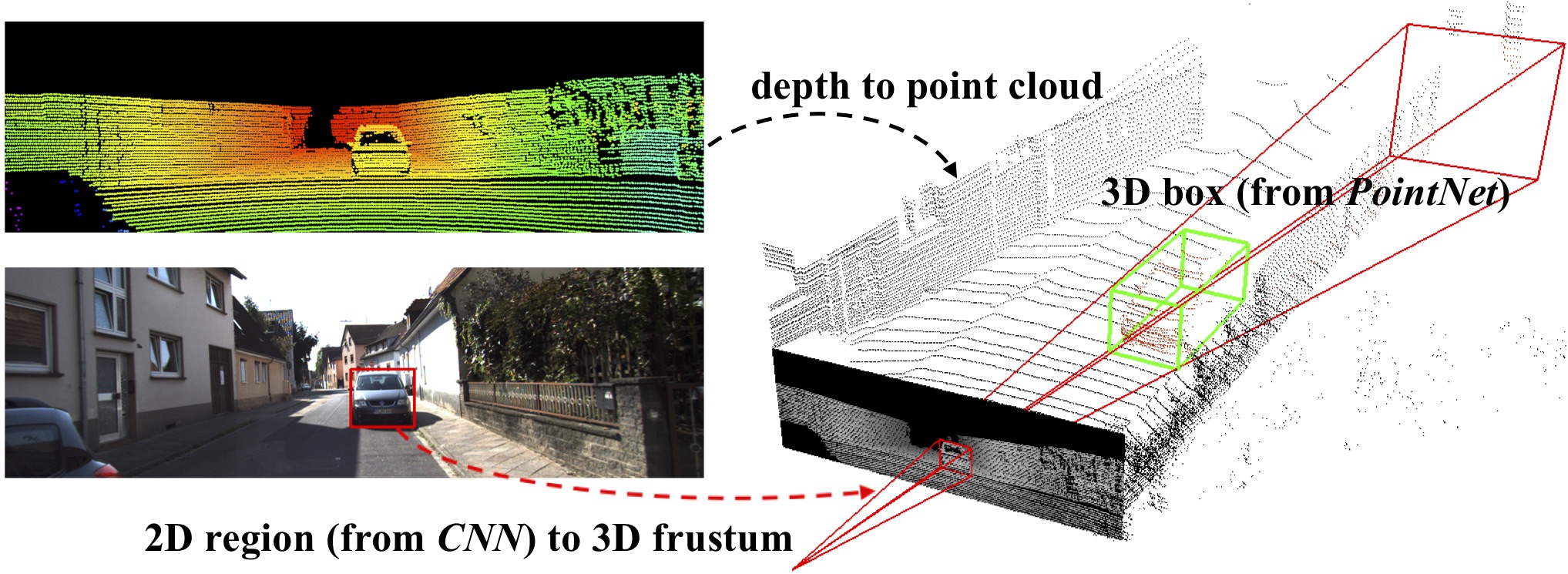

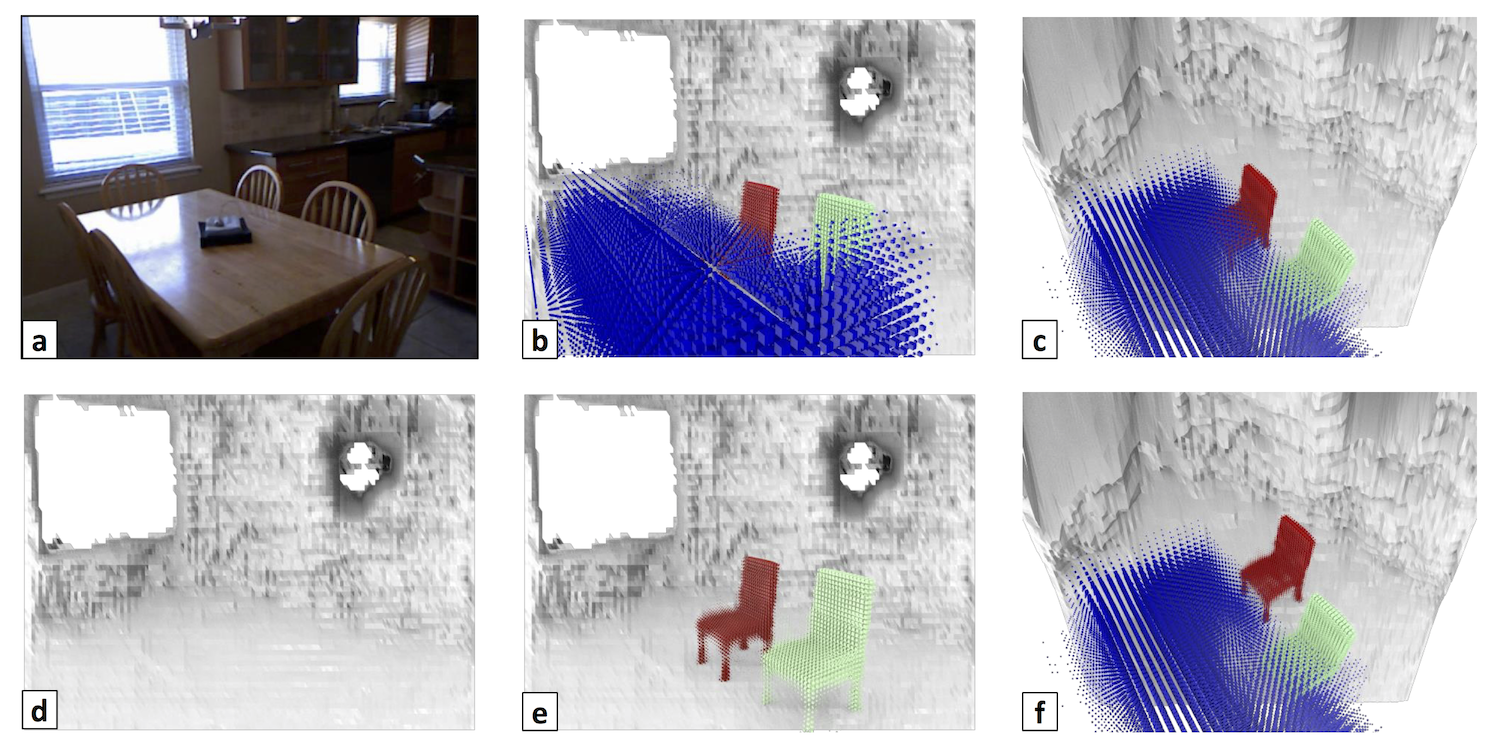

Frustum PointNets for 3D Object Detection from RGB-D Data (CVPR2018) [Paper]

A^2-Net: Molecular Structure Estimation from Cryo-EM Density Volumes (AAAI2019) [Paper]

Stereo R-CNN based 3D Object Detection for Autonomous Driving (CVPR2019) [Paper]

Deep Hough Voting for 3D Object Detection in Point Clouds (ICCV2019) [Paper] [code]

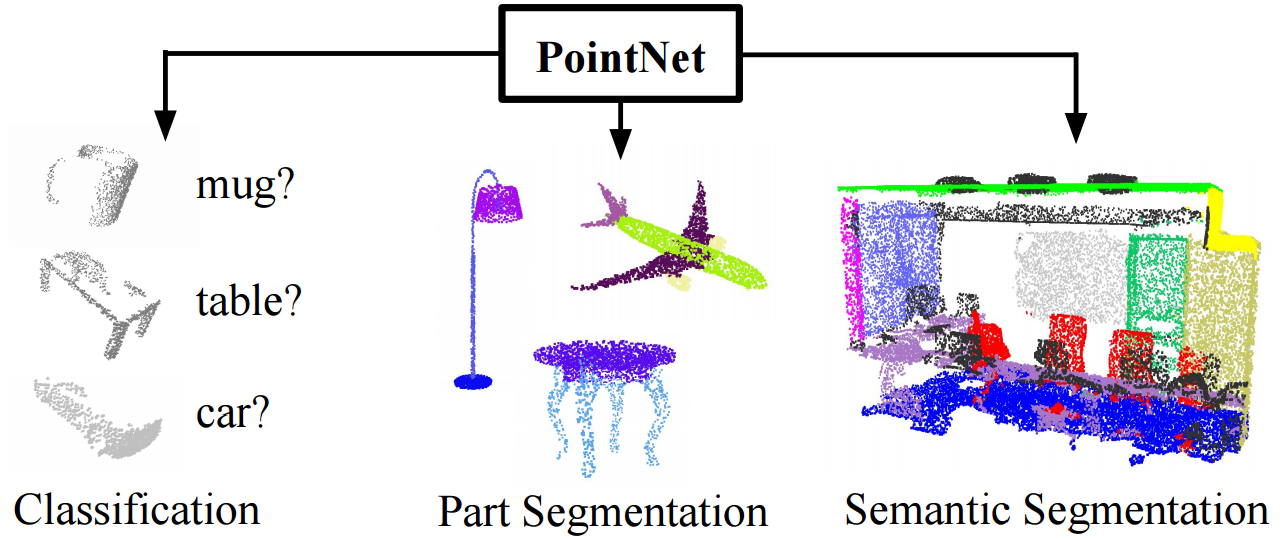

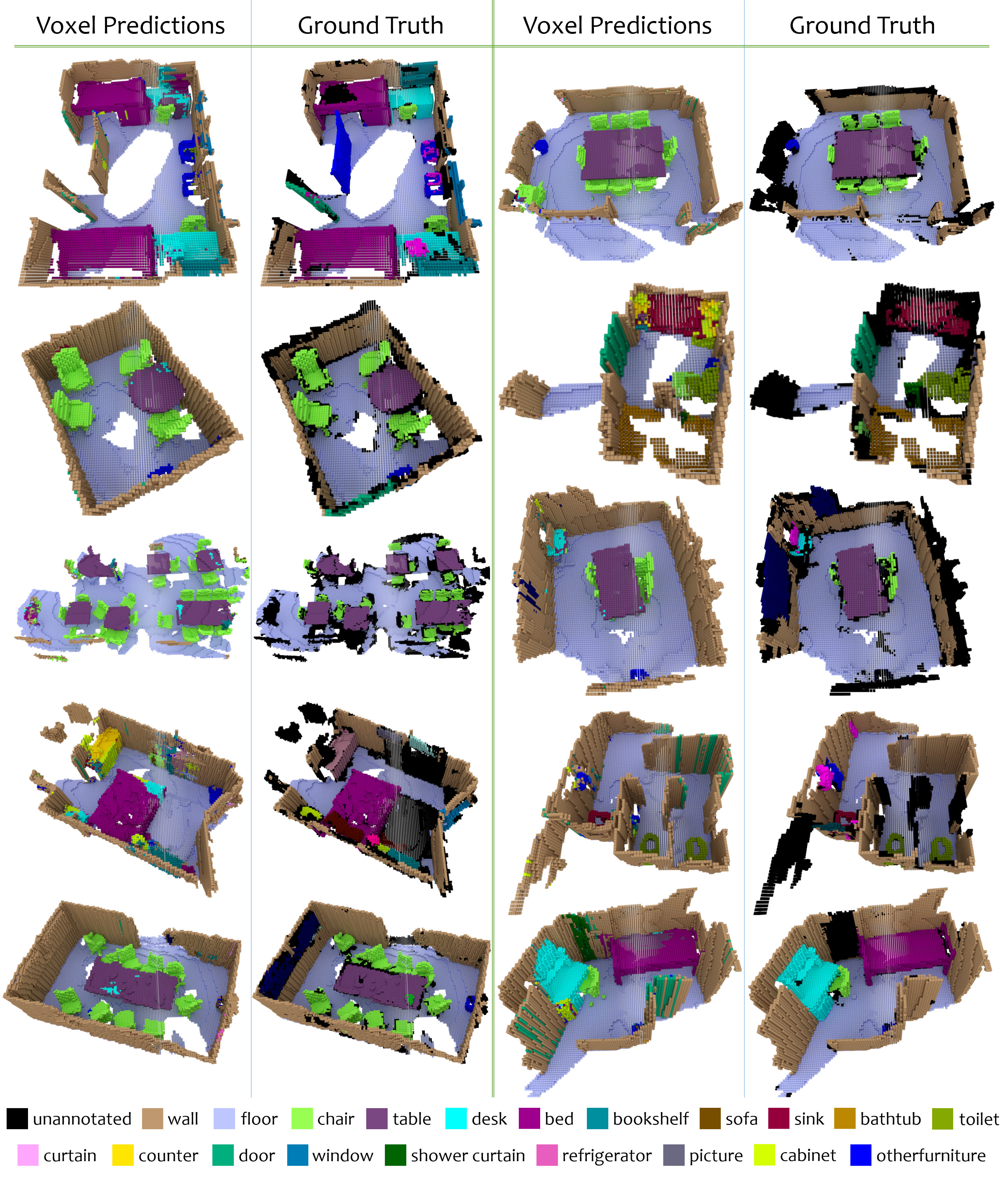

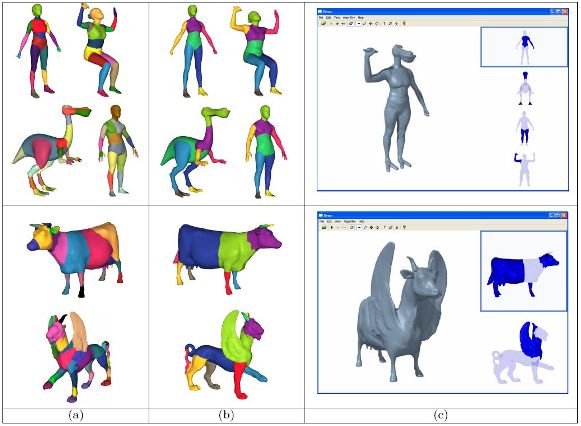

Scene/Object Semantic Segmentation

Learning 3D Mesh Segmentation and Labeling (2010) [Paper]

Unsupervised Co-Segmentation of a Set of Shapes via Descriptor-Infinite Spectral Clustering (2011) [Paper]

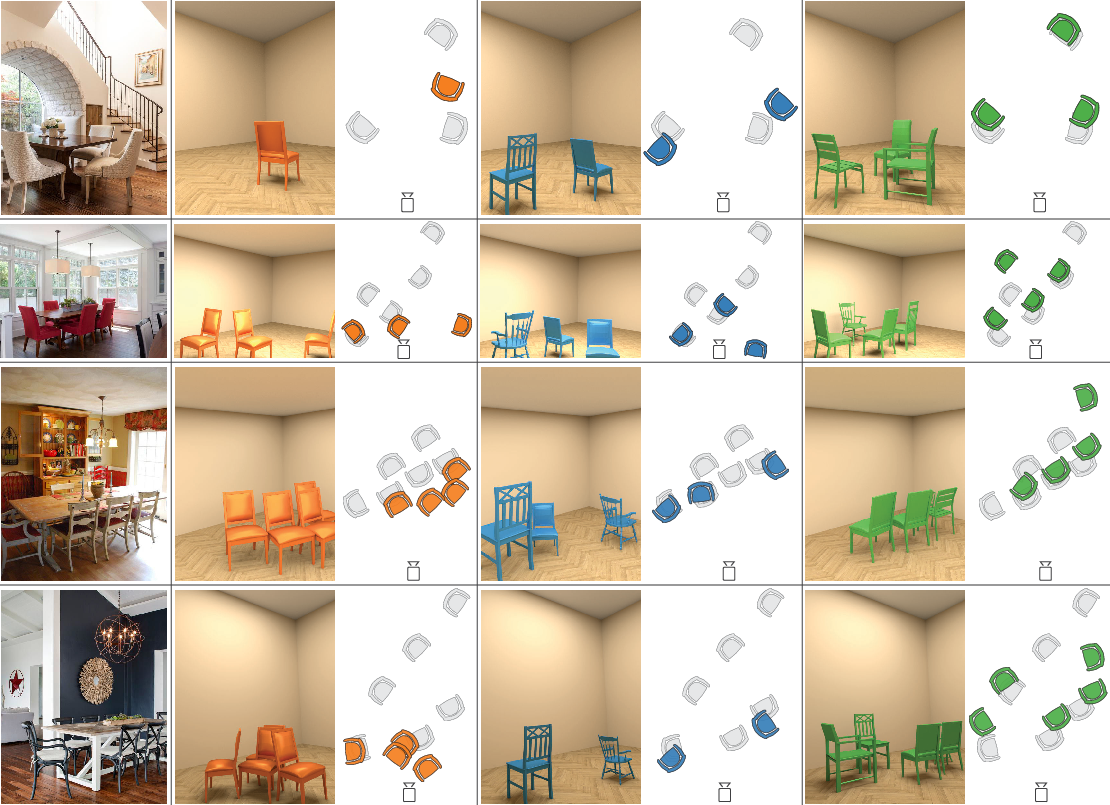

Single-View Reconstruction via Joint Analysis of Prototype and Shape Collections (2015) [Paper] [Code]

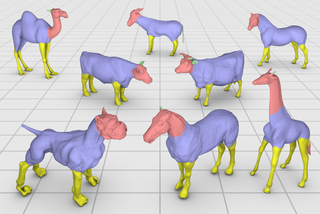

3D Shape Segmentation with Projective Convolutional Networks (2017) [Paper] [Code]

Learning Hierarchical Shape Segmentation and Labeling from Online Repositories (2017) [Paper]

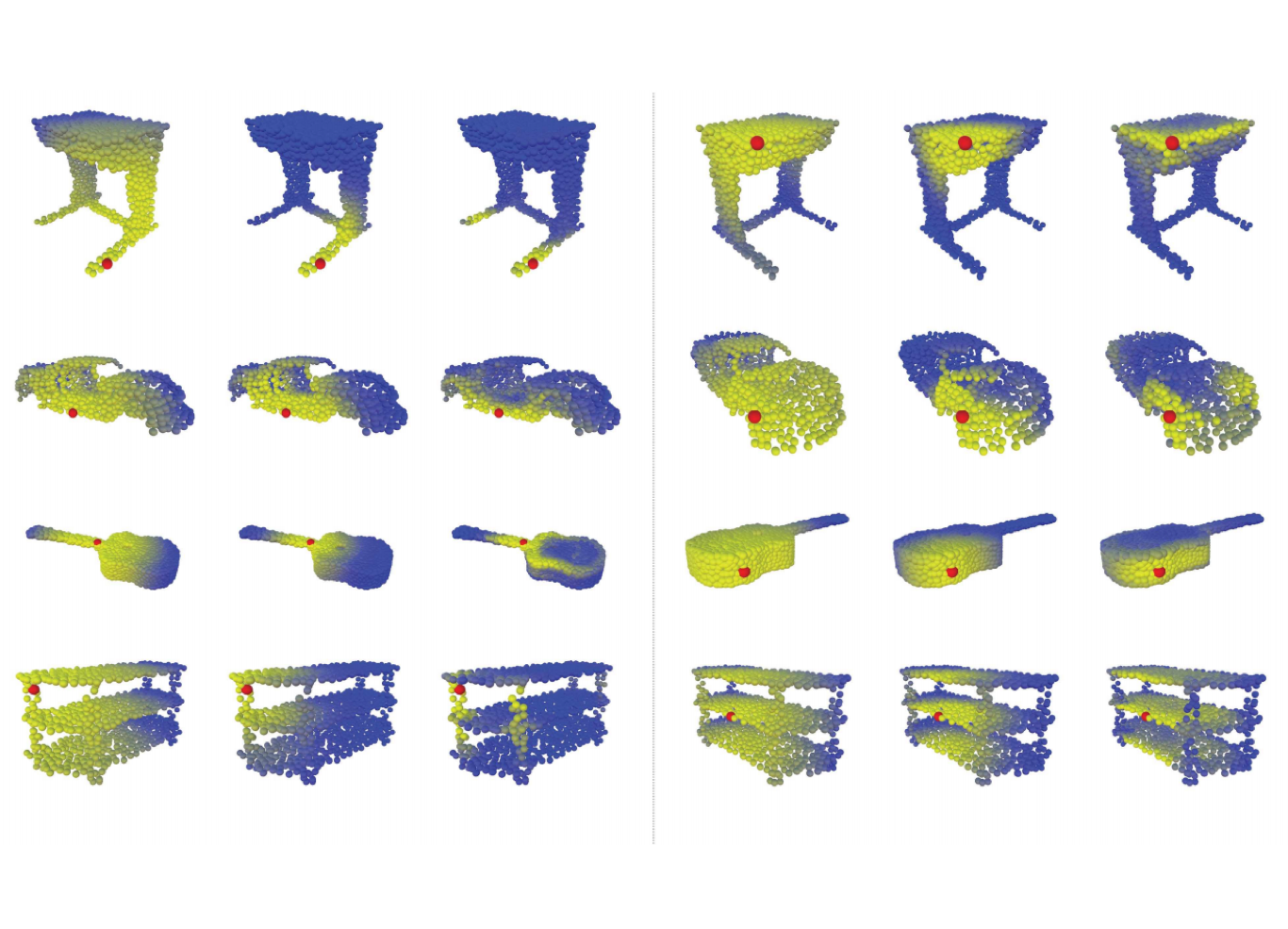

We propose pointwise convolution that performs on-the-fly voxelization for learning local features of a signal deject.

.jpeg)

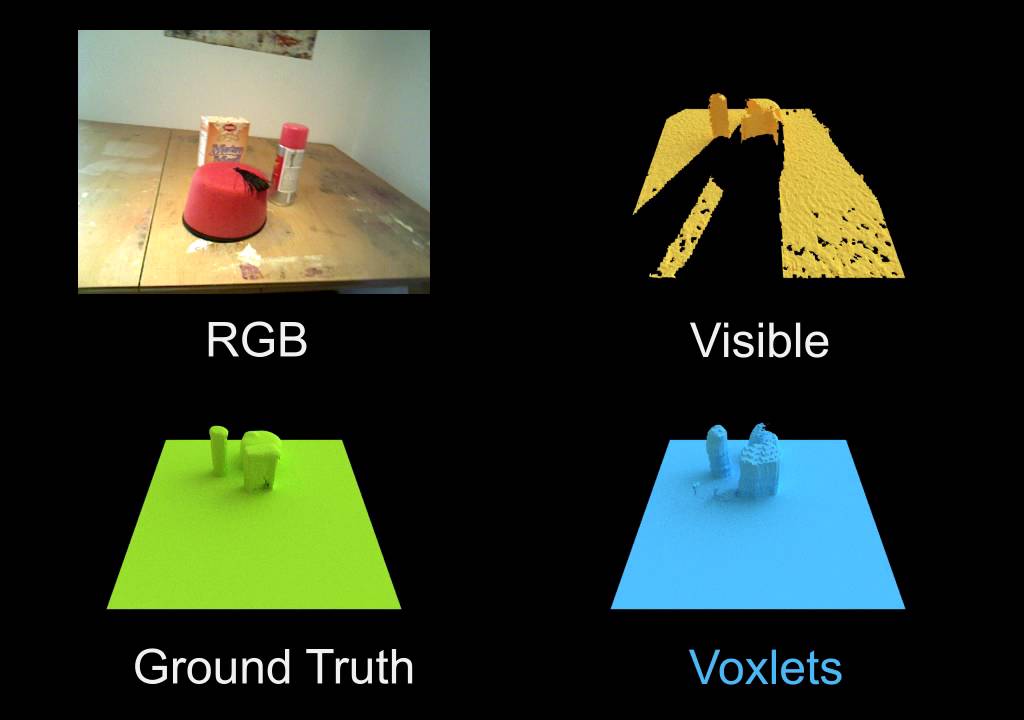

We propose an efficient yet robust technique for on-the-fly dense reconstruction and semantic segmentation of 3D indoor scenes. Our method is built atop an efficient super-voxel clustering method and a conditional random field with higher-order constraints from structural and object cues, enabling progressive dense semantic segmentation without any precomputation.

Nosotros jointly address the problems of semantic and instance segmentation of 3D point clouds with a multi-job pointwise network that simultaneously performs two tasks: predicting the semantic classes of 3D points and embedding the points into high-dimensional vectors and so that points of the same object case are represented past similar embeddings. We then propose a multi-value conditional random field model to incorporate the semantic and instance labels and formulate the problem of semantic and instance partitioning every bit jointly optimising labels in the field model.

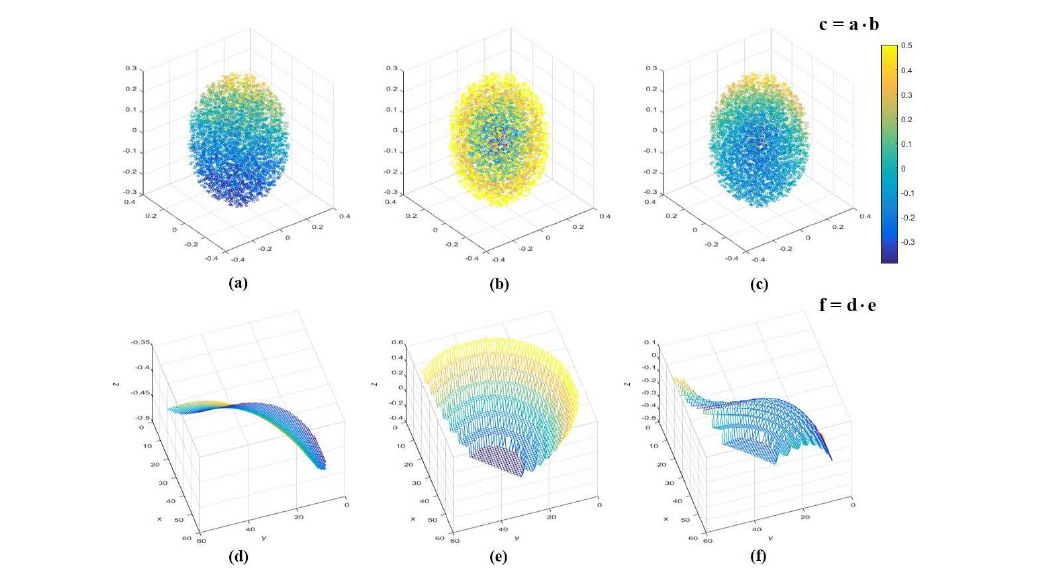

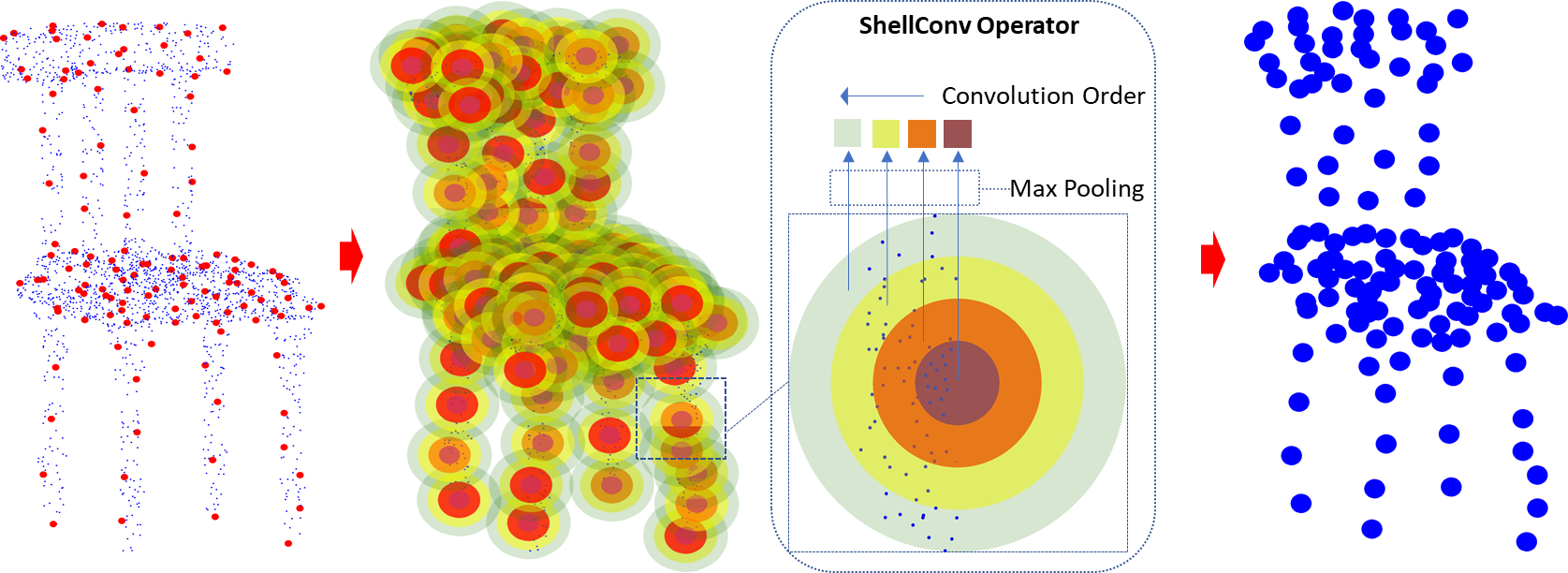

Nosotros propose an efficient end-to-terminate permutation invariant convolution for point cloud deep learning. We apply statistics from concentric spherical shells to define representative features and resolve the indicate order ambivalence, allowing traditional convolution to perform efficiently on such features.

Nosotros introduce a novel convolution operator for indicate clouds that achieves rotation invariance. Our core idea is to utilise low-level rotation invariant geometric features such every bit distances and angles to blueprint a convolution operator for indicate cloud learning.

![]()

3D Model Synthesis/Reconstruction

Parametric Morphable Model-based methods

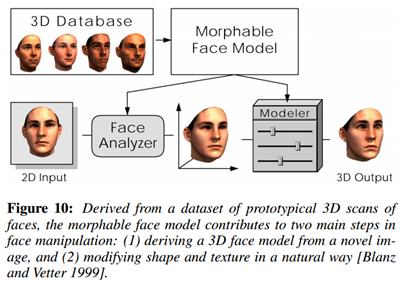

A Morphable Model For The Synthesis Of 3D Faces (1999) [Newspaper][Code]

FLAME: Faces Learned with an Articulated Model and Expressions (2017) [Newspaper][Code (Chumpy)][Code (TF)] [Code (PyTorch)]

FLAME is a lightweight and expressive generic head model learned from over 33,000 of accurately aligned 3D scans. The model combines a linear identity shape space (trained from 3800 scans of human heads) with an articulated neck, jaw, and eyeballs, pose-dependent cosmetic blendshapes, and additional global expression blendshapes. The code demonstrates how to 1) reconstruct textured 3D faces from images, ii) fit the model to 3D landmarks or registered 3D meshes, or 3) generate 3D face templates for speech-driven facial animation.

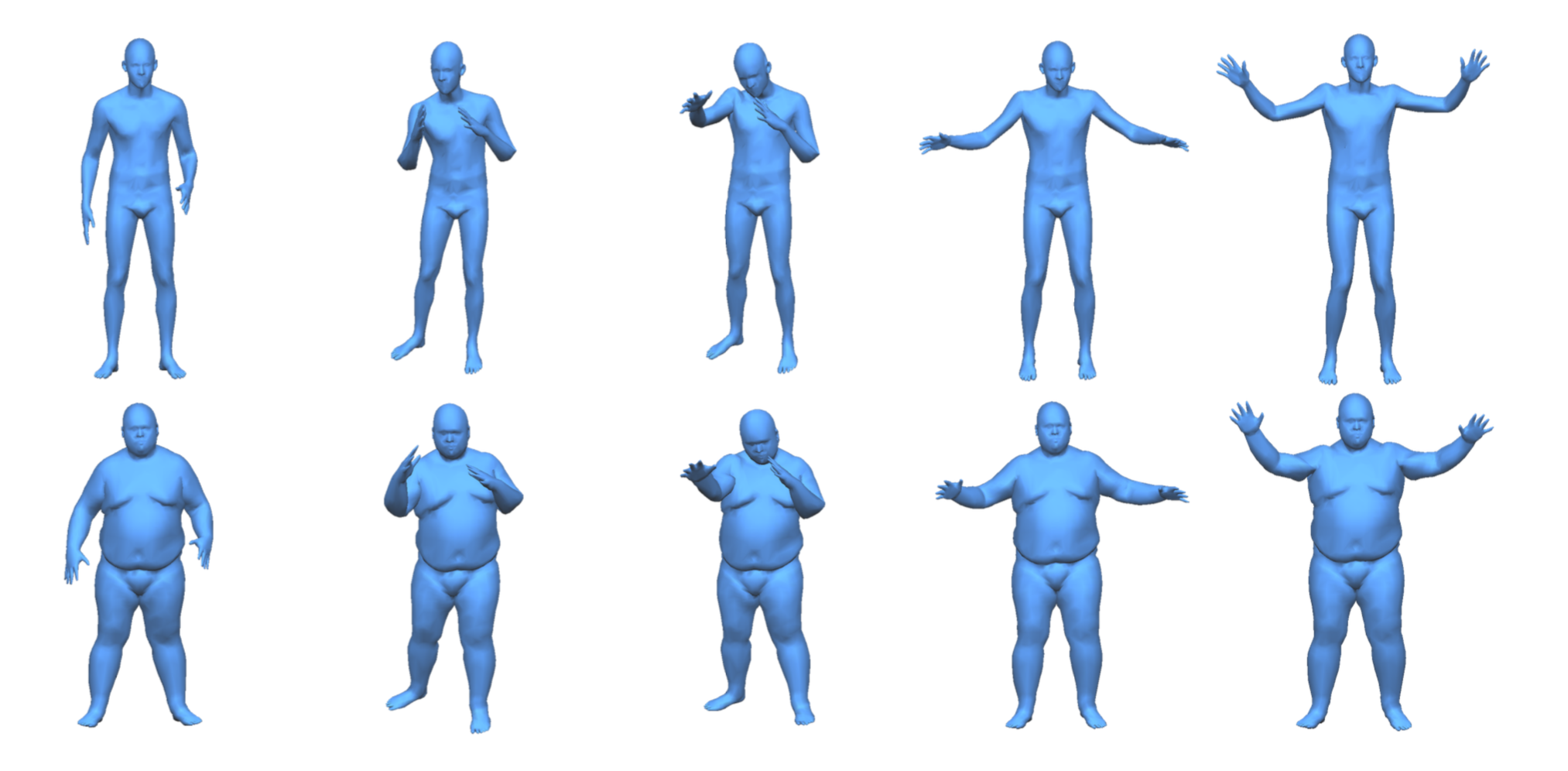

The Space of Man Trunk Shapes: Reconstruction and Parameterization from Range Scans (2003) [Newspaper]

SMPL-X: Expressive Torso Capture: 3D Hands, Face up, and Trunk from a Single Epitome (2019) [Paper][Video][Code]

PIFuHD: Multi-Level Pixel Aligned Implicit Office for Loftier-Resolution 3D Human Digitization (CVPR 2020) [Newspaper][Video][Code]

Betrayal: Monocular Expressive Body Regression through Torso-Driven Attention (2020) [Paper][Video][Code]

Category-Specific Object Reconstruction from a Single Paradigm (2014) [Paper]

Part-based Template Learning methods

Modeling by Case (2004) [Paper]

Model Composition from Interchangeable Components (2007) [Paper]

Information-Driven Suggestions for Creativity Support in 3D Modeling (2010) [Newspaper]

Photograph-Inspired Model-Driven 3D Object Modeling (2011) [Paper]

Probabilistic Reasoning for Assembly-Based 3D Modeling (2011) [Paper]

A Probabilistic Model for Component-Based Shape Synthesis (2012) [Paper]

Structure Recovery by Office Assembly (2012) [Paper]

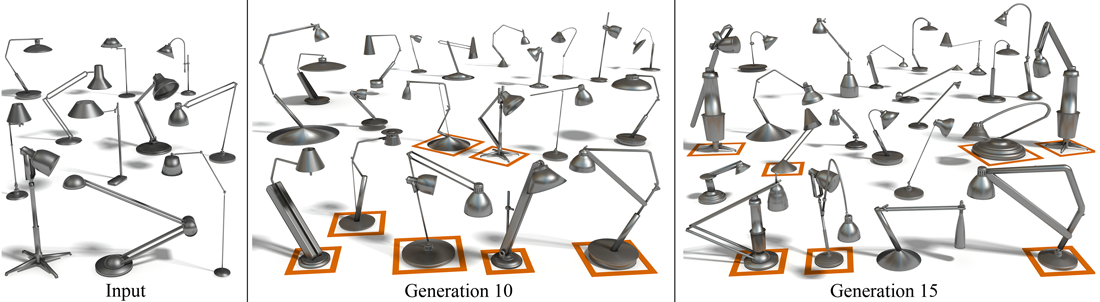

Fit and Diverse: Set Evolution for Inspiring 3D Shape Galleries (2012) [Newspaper]

AttribIt: Content Creation with Semantic Attributes (2013) [Paper]

Learning Function-based Templates from Large Collections of 3D Shapes (2013) [Paper]

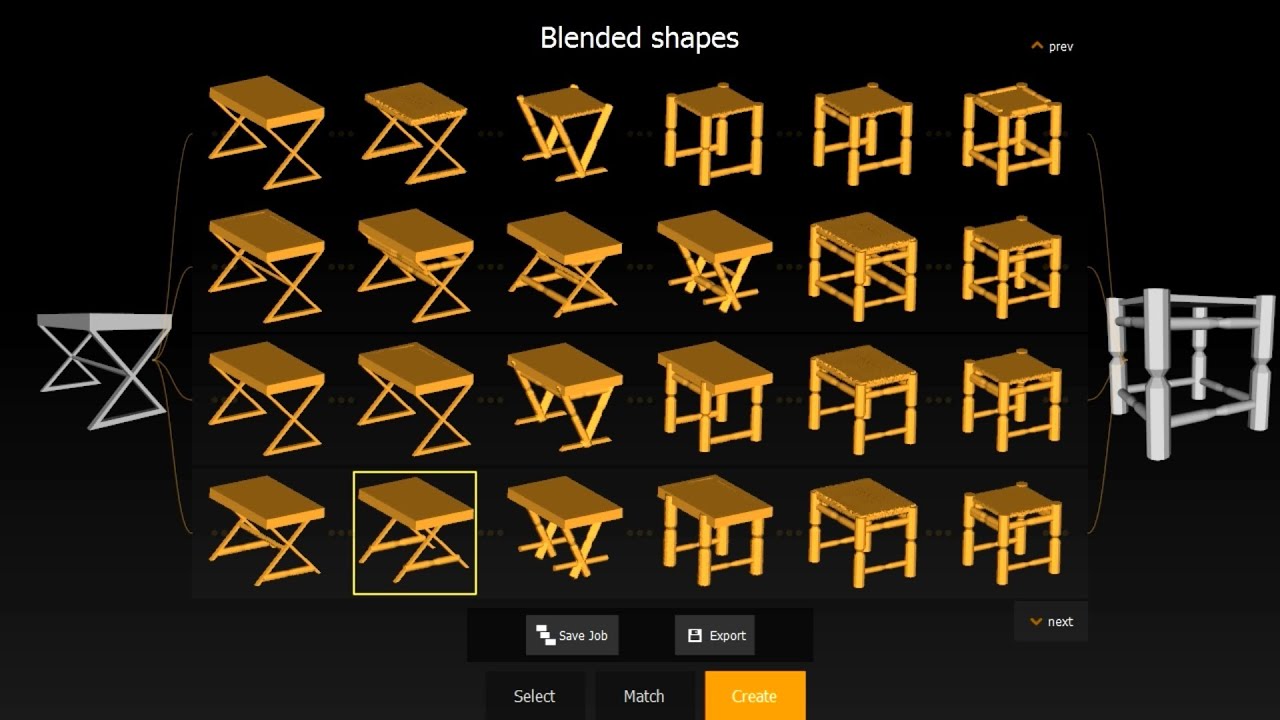

Topology-Varying 3D Shape Creation via Structural Blending (2014) [Paper]

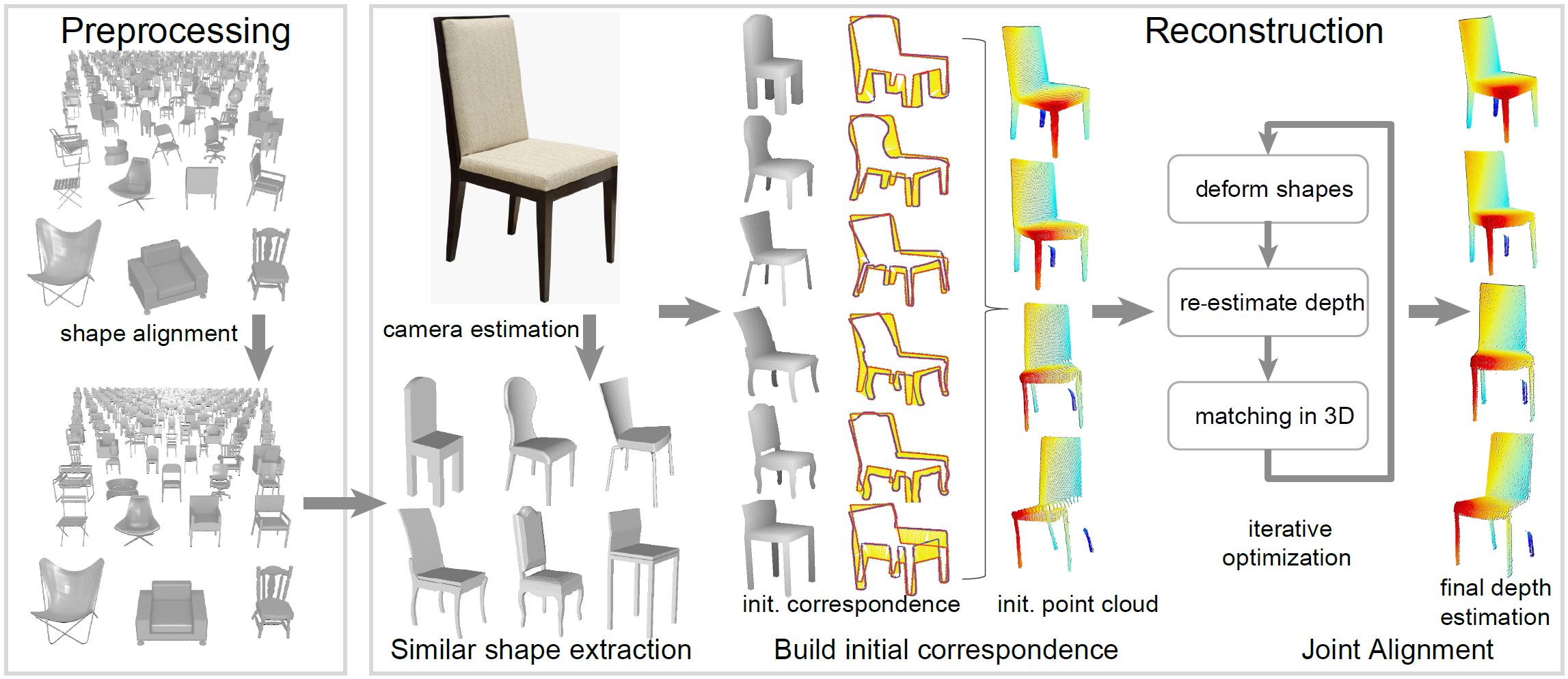

Estimating Image Depth using Shape Collections (2014) [Paper]

Single-View Reconstruction via Joint Analysis of Image and Shape Collections (2015) [Paper]

Interchangeable Components for Easily-On Assembly Based Modeling (2016) [Newspaper]

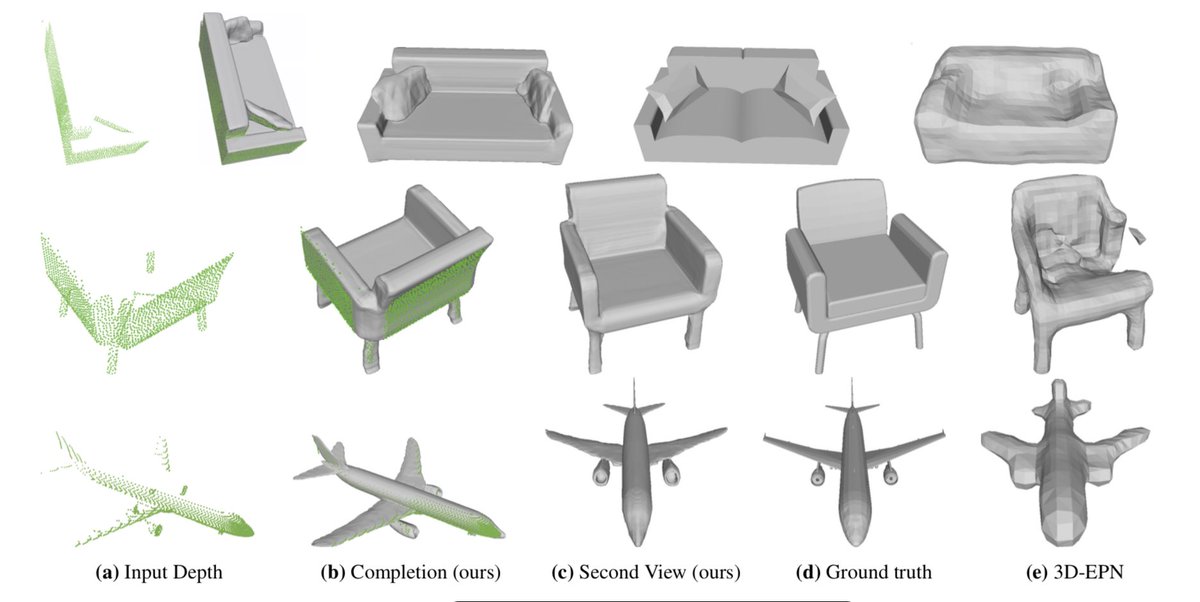

Shape Completion from a Single RGBD Image (2016) [Paper]

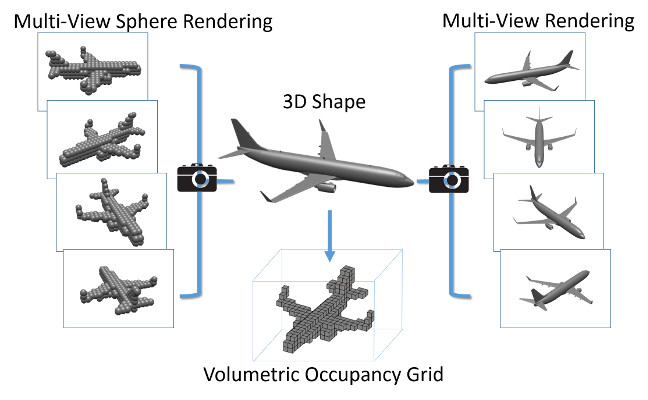

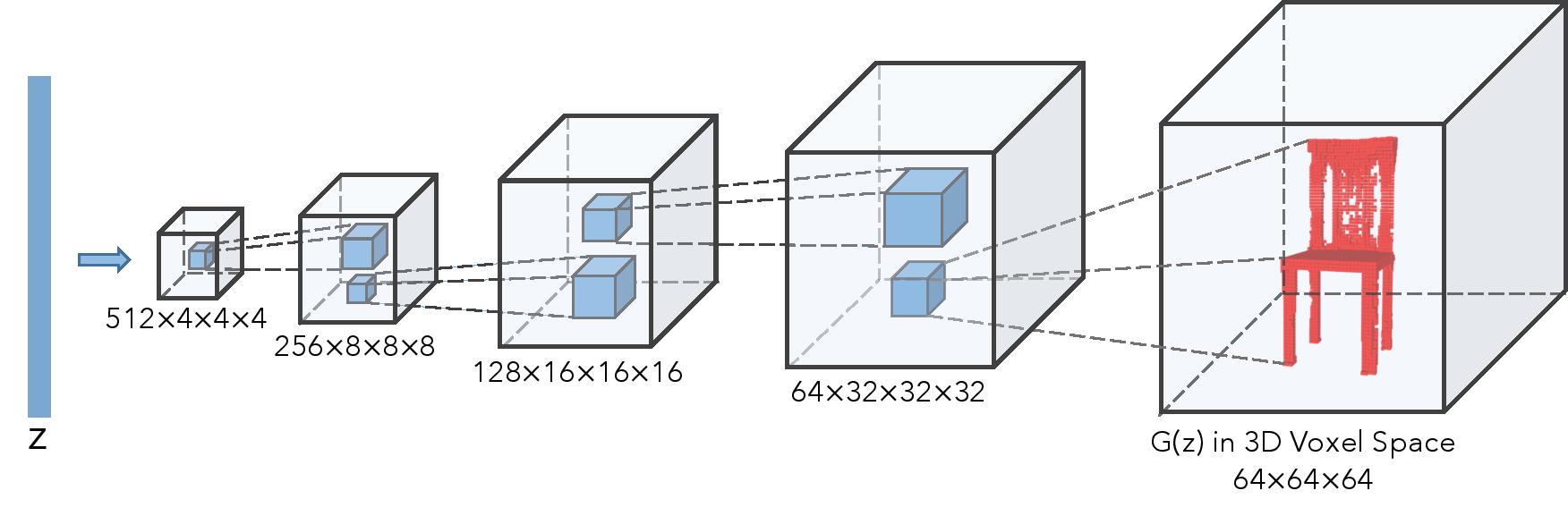

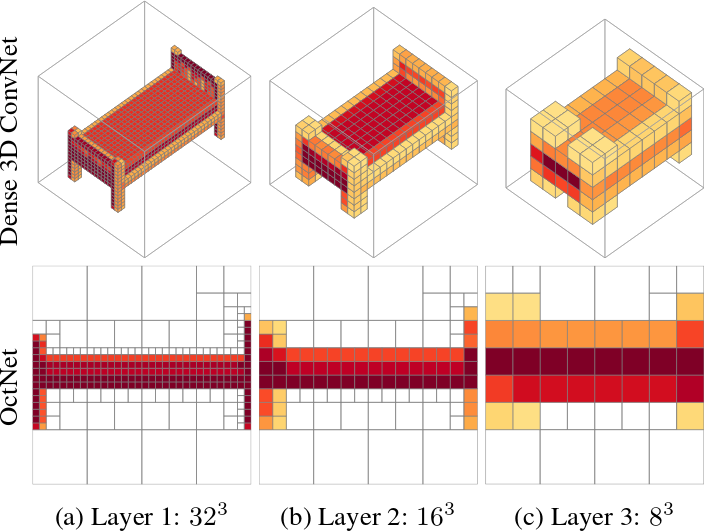

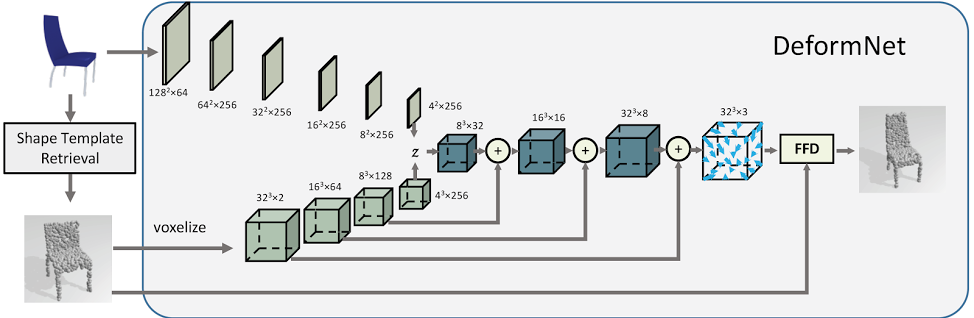

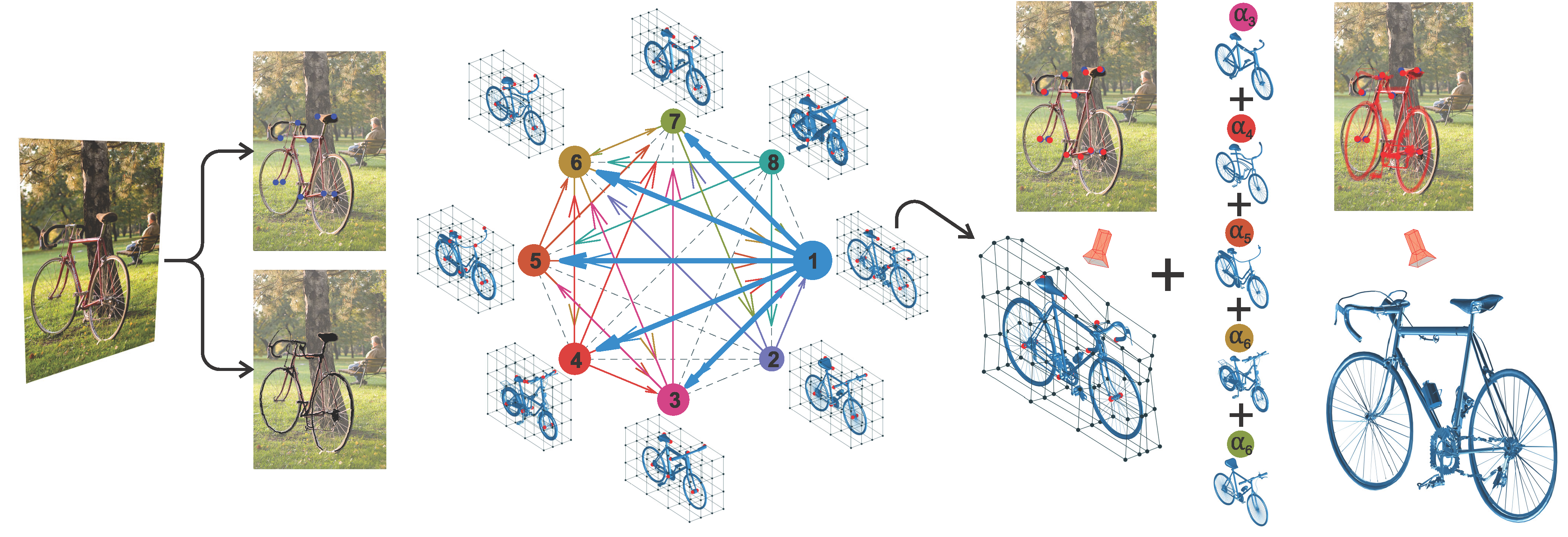

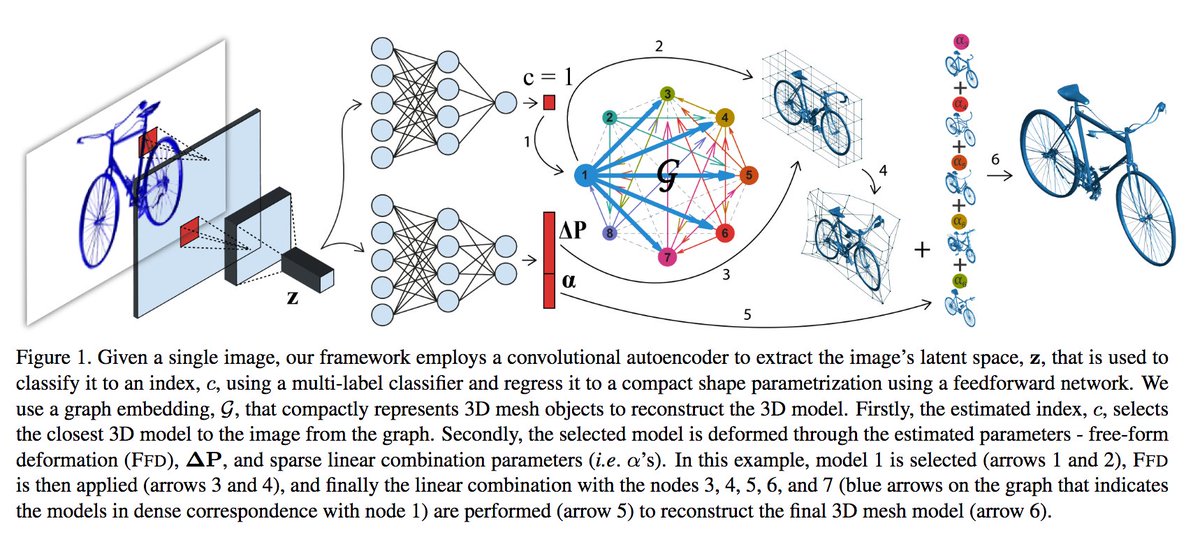

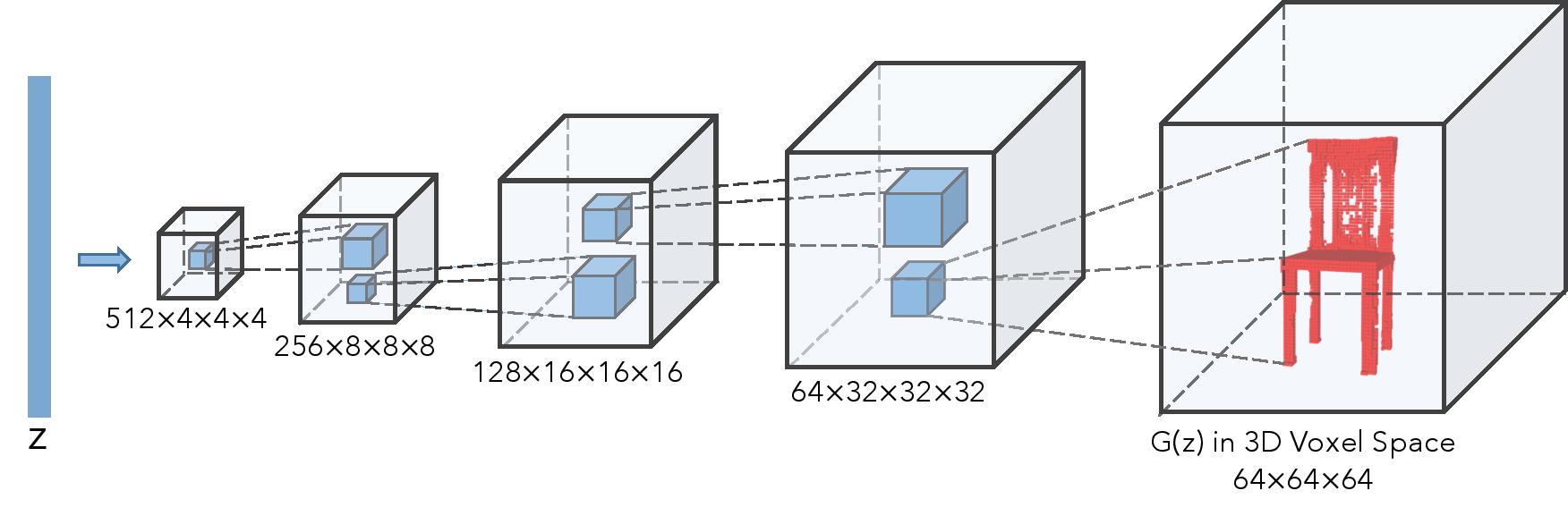

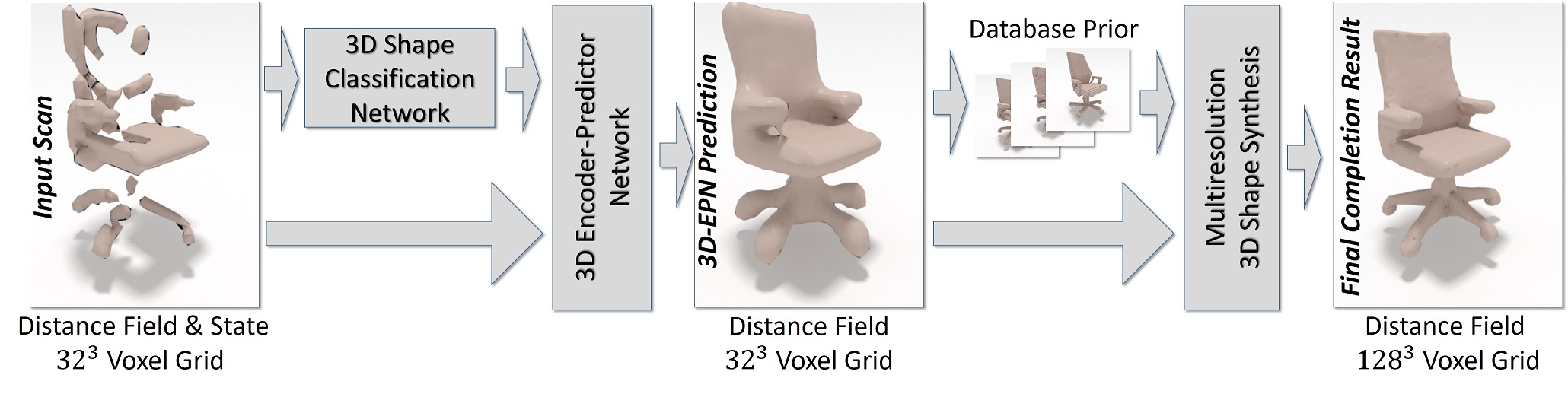

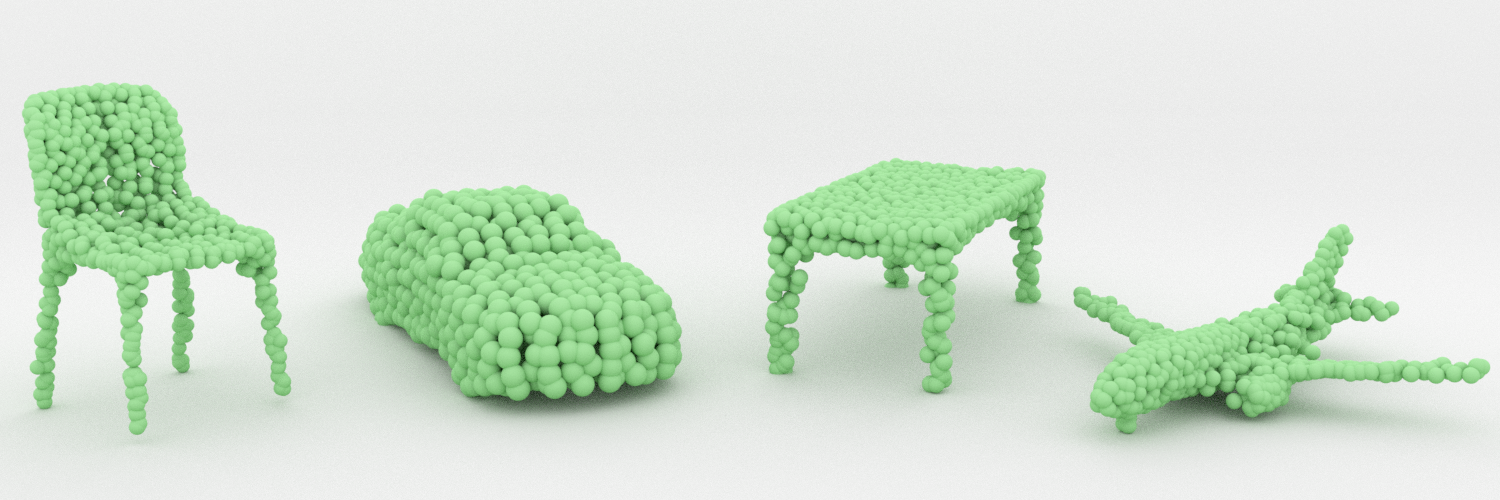

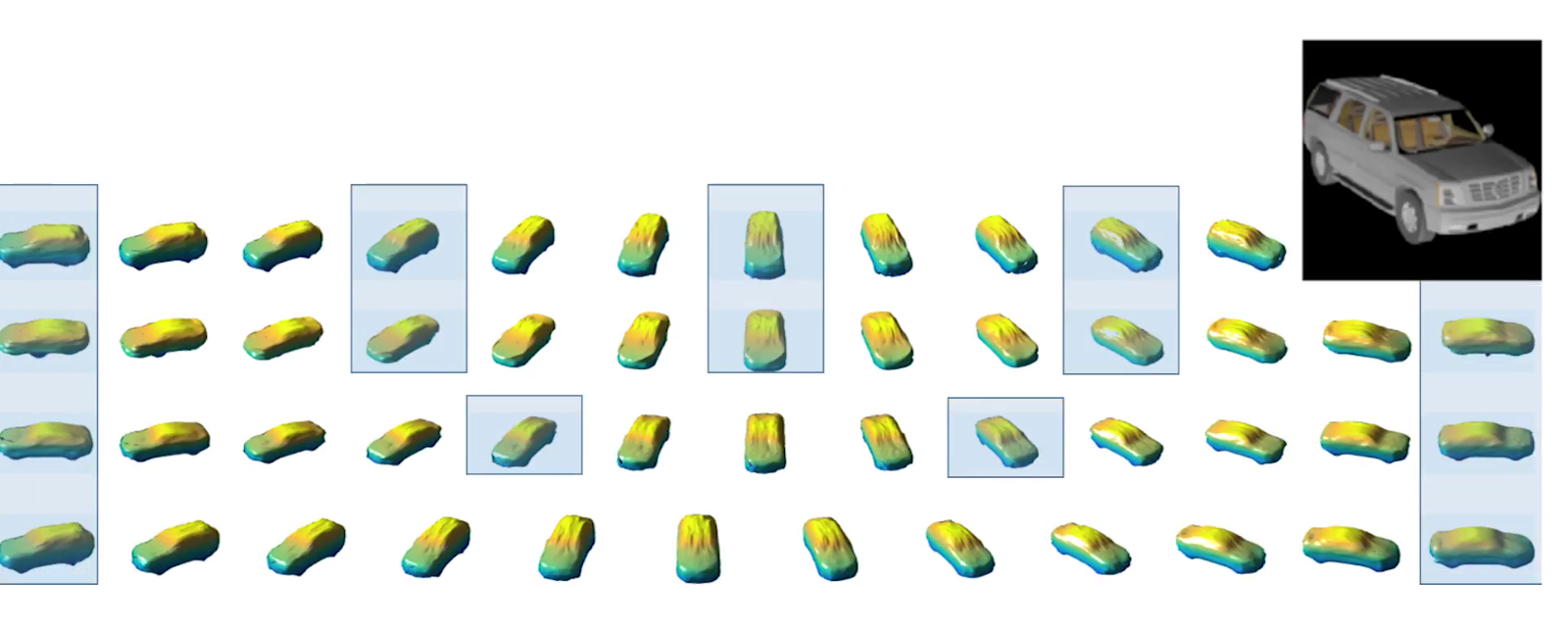

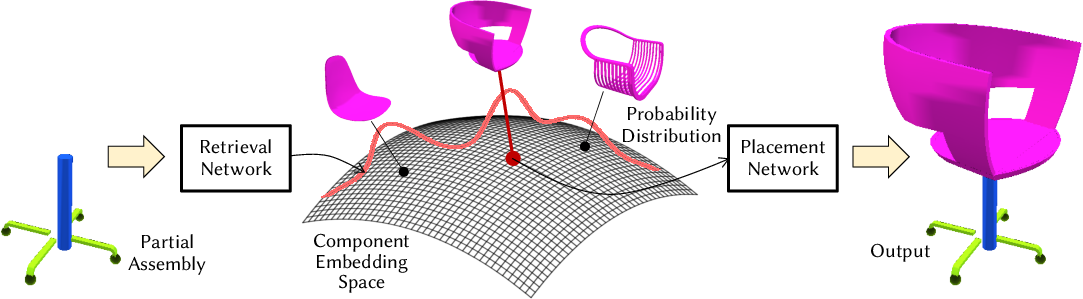

Deep Learning Methods

.jpeg)

.jpeg)

.jpeg)

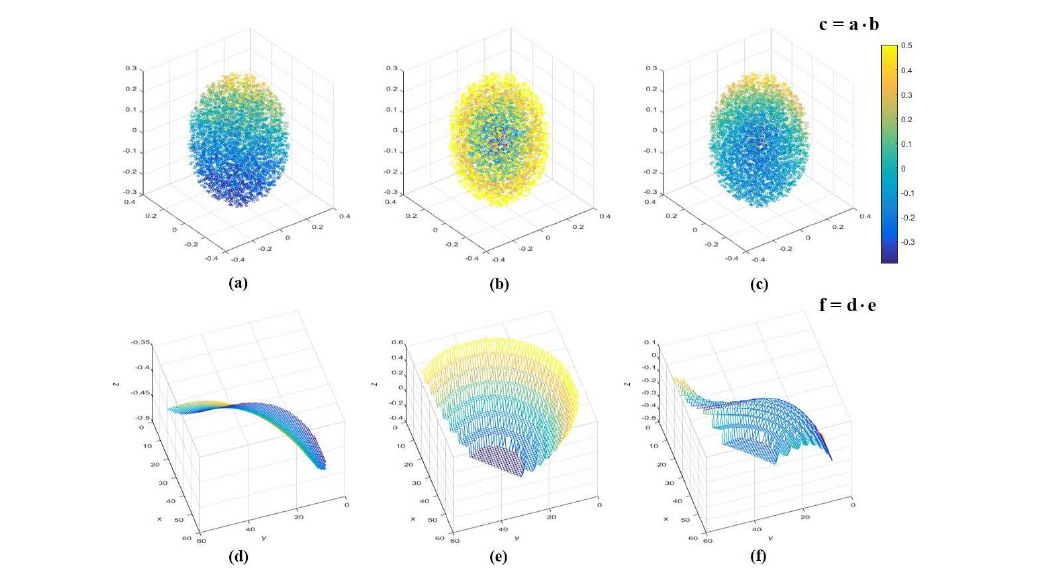

An energy-based 3D shape descriptor network is a deep free energy-based model for volumetric shape patterns. The maximum likelihood grooming of the model follows an "analysis by synthesis" scheme and can be interpreted every bit a mode seeking and mode shifting process. The model can synthesize 3D shape patterns by sampling from the probability distribution via MCMC such as Langevin dynamics. Experiments demonstrate that the proposed model tin can generate realistic 3D shape patterns and can exist useful for 3D shape analysis.

![]()

![]()

.png)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

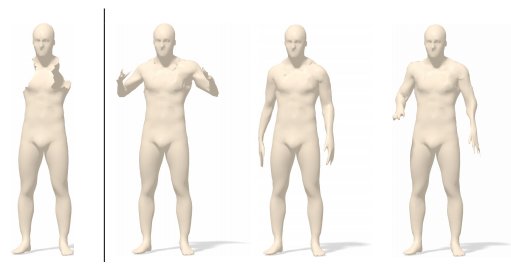

Coma: Convolutional Mesh Autoencoders (2018) [Newspaper][Code (TF)][Lawmaking (PyTorch)][Code (PyTorch)]

CoMA is a versatile model that learns a non-linear representation of a face up using spectral convolutions on a mesh surface. CoMA introduces mesh sampling operations that enable a hierarchical mesh representation that captures non-linear variations in shape and expression at multiple scales within the model.

RingNet: 3D Face Reconstruction from Single Images (2019) [Paper][Lawmaking]

VOCA: Vocalisation Operated Graphic symbol Animation (2019) [Paper][Video][Lawmaking]

VOCA is a simple and generic spoken language-driven facial blitheness framework that works beyond a range of identities. The codebase demonstrates how to synthesize realistic character animations given an arbitrary speech betoken and a static character mesh.

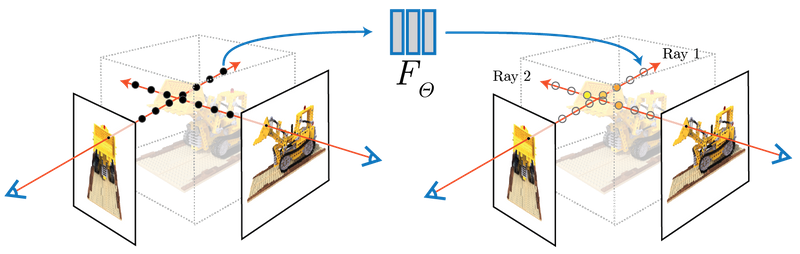

NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis [Project][Paper][Code]

This newspaper proposes a deep 3D energy-based model to correspond volumetric shapes. The maximum likelihood training of the model follows an "analysis by synthesis" scheme. Experiments demonstrate that the proposed model tin generate high-quality 3D shape patterns and can be useful for a wide variety of 3D shape analysis.

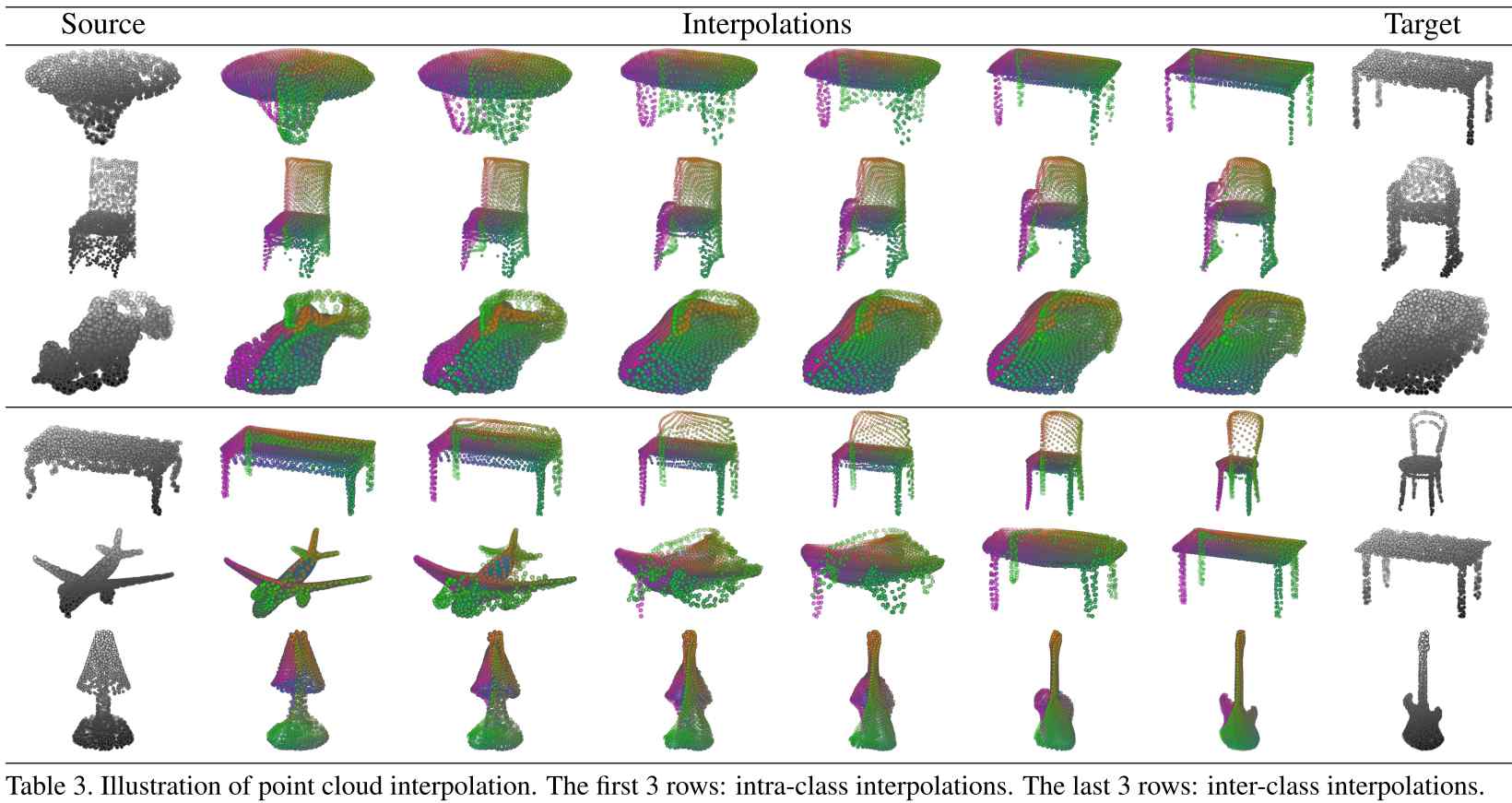

Generative PointNet is an energy-based model of unordered point clouds, where the energy function is parameterized by an input-permutation-invariant bottom-up neural network. The model can exist trained by MCMC-based maximum likelihood learning, or a short-run MCMC toward the free energy-based model as a catamenia-like generator for point deject reconstruction and interpolation. The learned betoken cloud representation can be useful for point cloud classification.

Shape My Face (SMF) is a point deject to mesh automobile-encoder for the registration of raw human face scans, and the generation of constructed human faces. SMF leverages a modified PointNet encoder with a visual attention module and differentiable surface sampling to exist independent of the original surface representation and reduce the need for pre-processing. Mesh convolution decoders are combined with a specialized PCA model of the mouth, and smoothly blended based on geodesic distances, to create a compact model that is highly robust to dissonance. SMF is applied to register and perform expression transfer on scans captured in-the-wild with an iPhone depth photographic camera represented either as meshes or bespeak clouds.

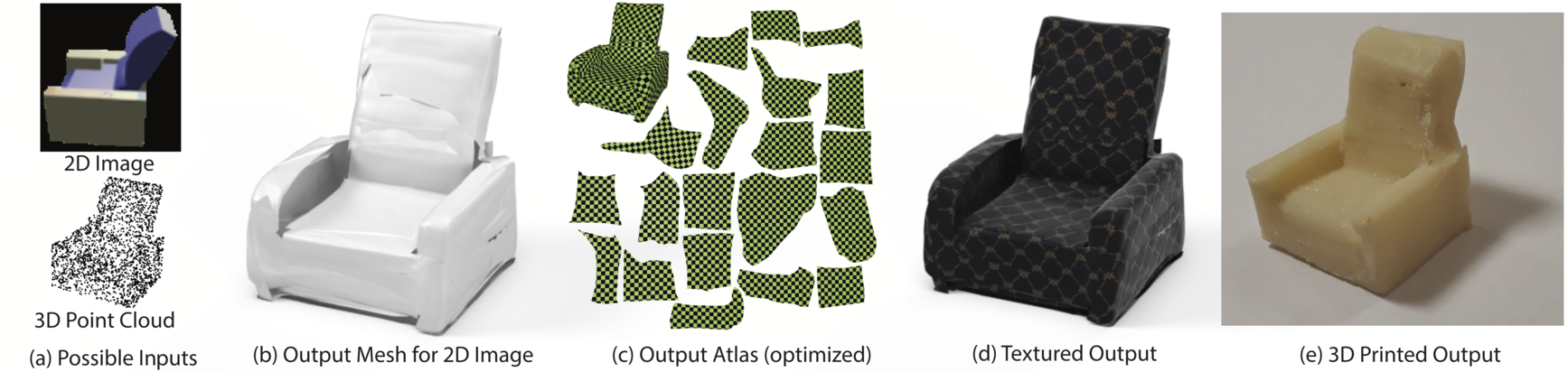

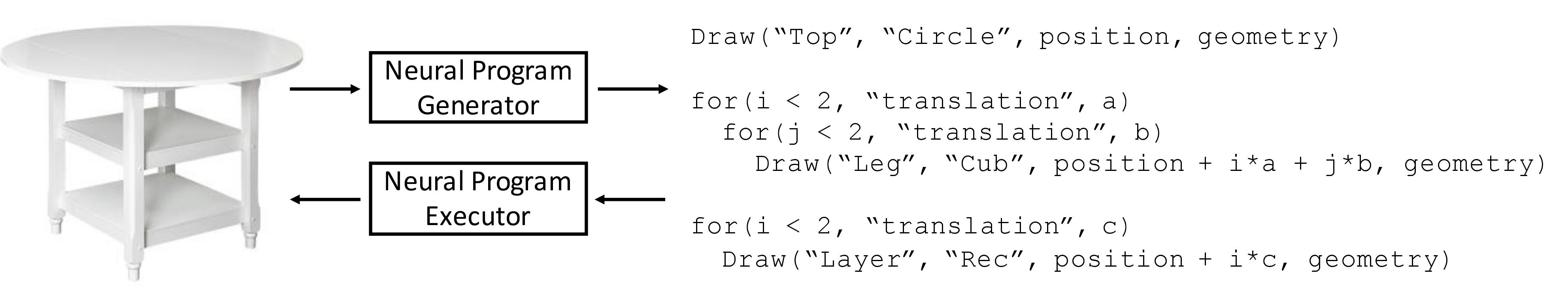

Nosotros abet the apply of implicit fields for learning generative models of shapes and introduce an implicit field decoder, called IM-NET, for shape generation, aimed at improving the visual quality of the generated shapes. An implicit field assigns a value to each point in 3D space, so that a shape can be extracted equally an iso-surface. IM-Net is trained to perform this assignment by means of a binary classifier. Specifically, information technology takes a bespeak coordinate, forth with a feature vector encoding a shape, and outputs a value which indicates whether the indicate is outside the shape or not. Past replacing conventional decoders by our implicit decoder for representation learning (via IM-AE) and shape generation (via IM-GAN), we demonstrate superior results for tasks such every bit generative shape modeling, interpolation, and unmarried-view 3D reconstruction, particularly in terms of visual quality.

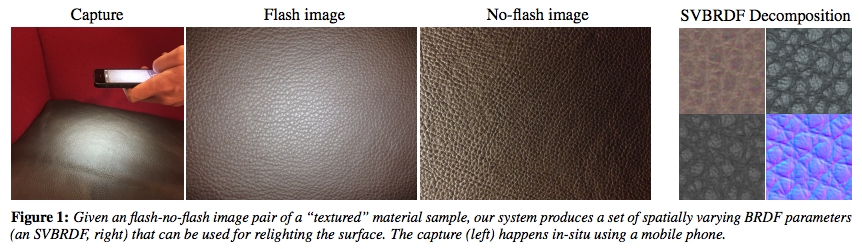

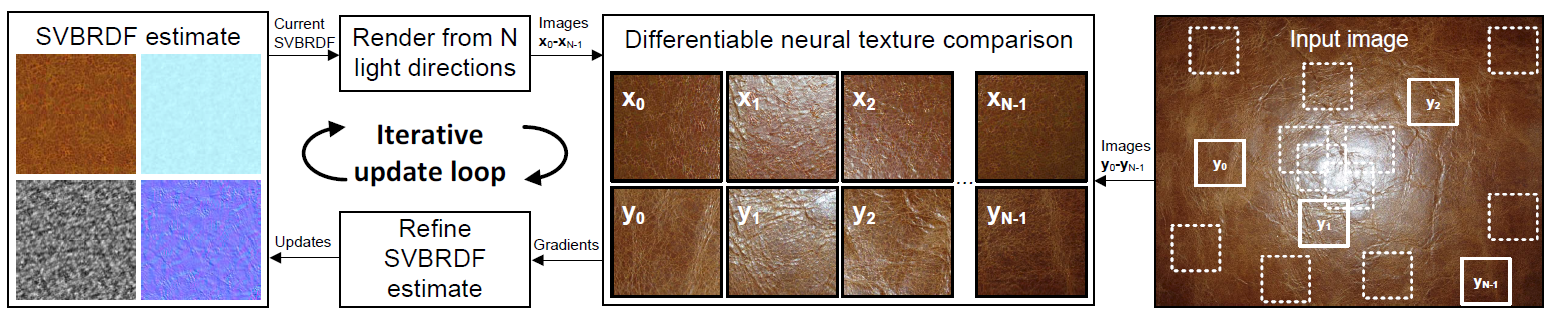

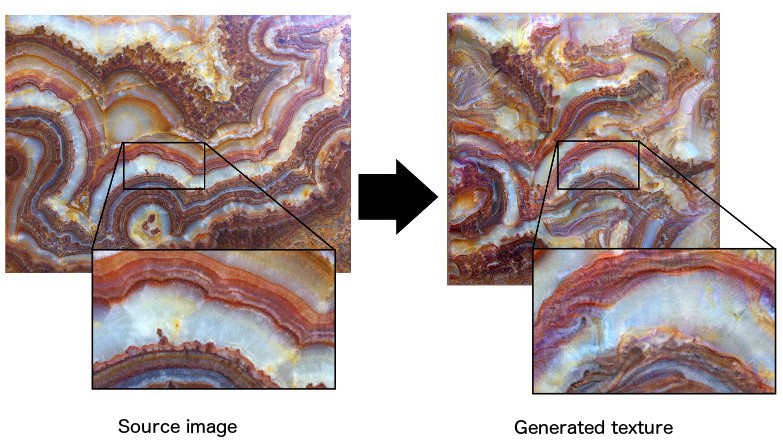

Texture/Material Analysis and Synthesis

Texture Synthesis Using Convolutional Neural Networks (2015) [Paper]

Two-Shot SVBRDF Capture for Stationary Materials (SIGGRAPH 2015) [Paper]

Reflectance Modeling by Neural Texture Synthesis (2016) [Paper]

Modeling Surface Appearance from a Single Photo using Self-augmented Convolutional Neural Networks (2017) [Paper]

Loftier-Resolution Multi-Scale Neural Texture Synthesis (2017) [Paper]

Reflectance and Natural Illumination from Unmarried Material Specular Objects Using Deep Learning (2017) [Newspaper]

Joint Material and Illumination Interpretation from Photo Sets in the Wild (2017) [Paper]

JWhat Is Effectually The Photographic camera? (2017) [Paper]

TextureGAN: Controlling Deep Image Synthesis with Texture Patches (2018 CVPR) [Paper]

Gaussian Textile Synthesis (2018 SIGGRAPH) [Newspaper]

Non-stationary Texture Synthesis by Adversarial Expansion (2018 SIGGRAPH) [Paper]

Synthesized Texture Quality Assessment via Multi-scale Spatial and Statistical Texture Attributes of Paradigm and Gradient Magnitude Coefficients (2018 CVPR) [Paper]

LIME: Live Intrinsic Material Interpretation (2018 CVPR) [Paper]

Single-Image SVBRDF Capture with a Rendering-Aware Deep Network (2018) [Paper]

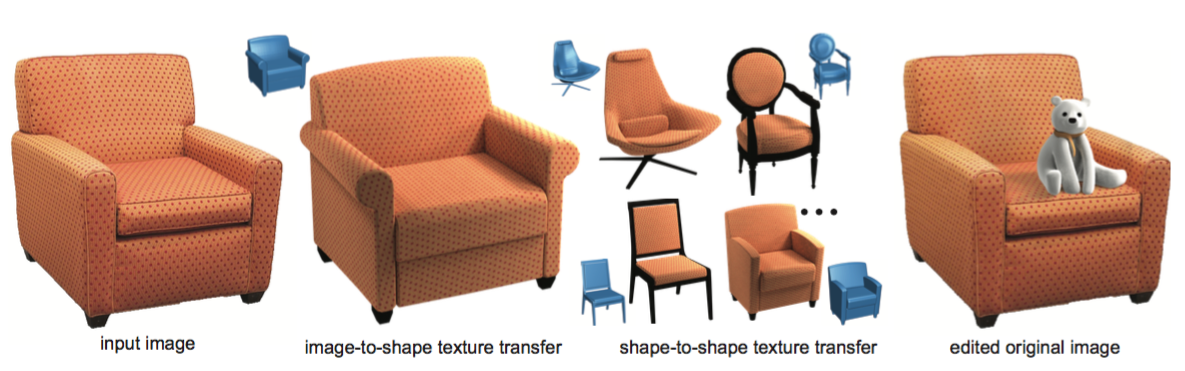

PhotoShape: Photorealistic Materials for Large-Scale Shape Collections (2018) [Paper]

Learning Material-Enlightened Local Descriptors for 3D Shapes (2018) [Paper]

.jpeg)

FrankenGAN: Guided Detail Synthesis for Building Mass Models using Mode-Synchonized GANs (2018 SIGGRAPH Asia) [Paper]

Style Learning and Transfer

Style-Content Separation past Anisotropic Role Scales (2010) [Newspaper]

Pattern Preserving Garment Transfer (2012) [Paper]

Analogy-Driven 3D Style Transfer (2014) [Paper]

Elements of Style: Learning Perceptual Shape Style Similarity (2015) [Paper] [Code]

Functionality Preserving Shape Style Transfer (2016) [Paper] [Code]

Unsupervised Texture Transfer from Images to Model Collections (2016) [Paper]

Learning Detail Transfer based on Geometric Features (2017) [Paper]

Co-Locating Way-Defining Elements on 3D Shapes (2017) [Paper]

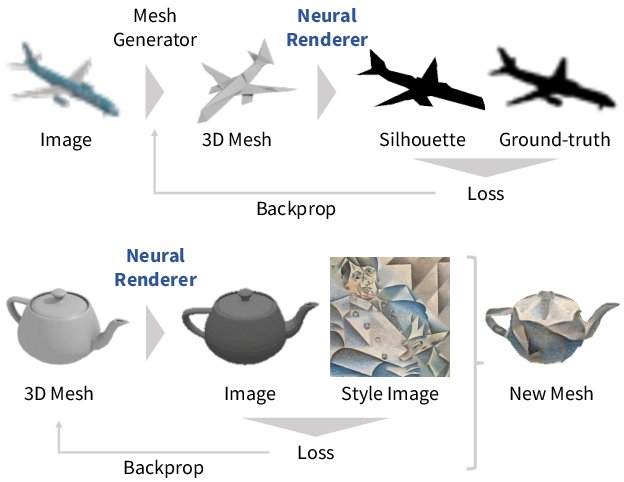

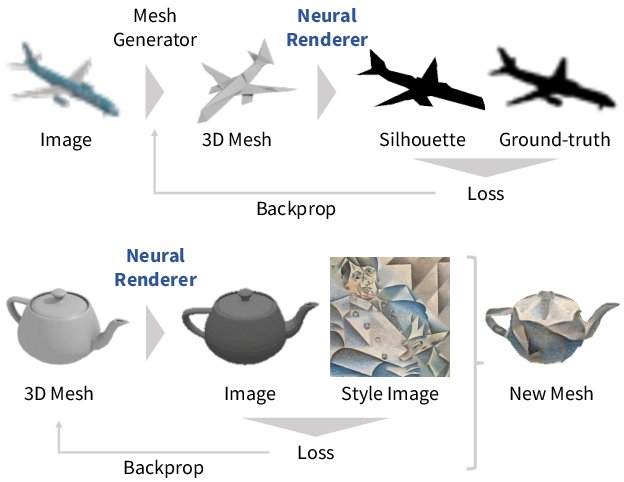

Neural 3D Mesh Renderer (2017) [Paper] [Code]

Appearance Modeling via Proxy-to-Epitome Alignment (2018) [Paper]

Automatic Unpaired Shape Deformation Transfer (SIGGRAPH Asia 2018) [Paper]

3DSNet: Unsupervised Shape-to-Shape 3D Fashion Transfer (2020) [Paper] [Code]

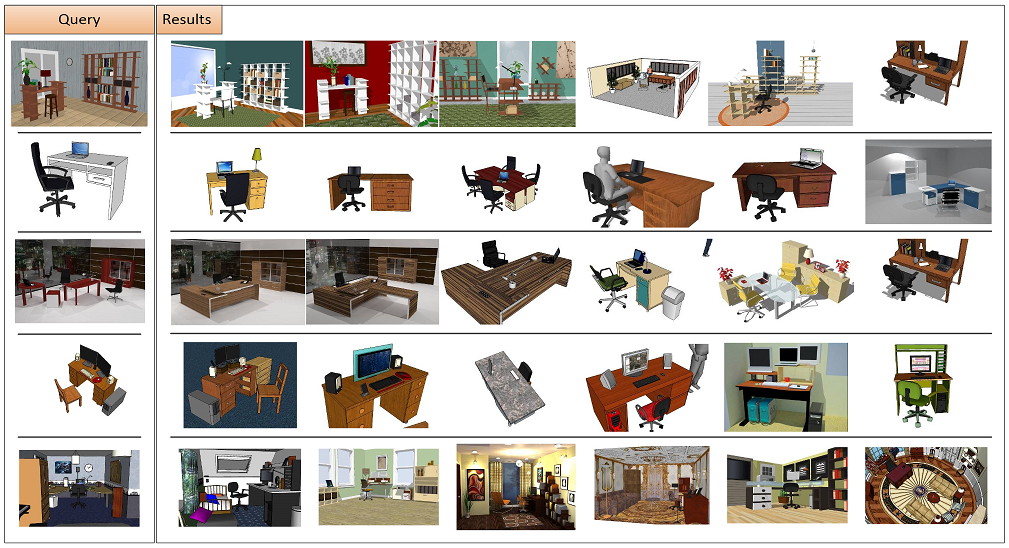

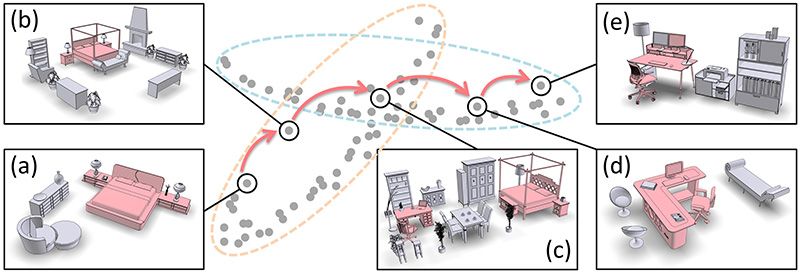

Scene Synthesis/Reconstruction

Make It Habitation: Automatic Optimization of Piece of furniture Arrangement (2011, SIGGRAPH) [Paper]

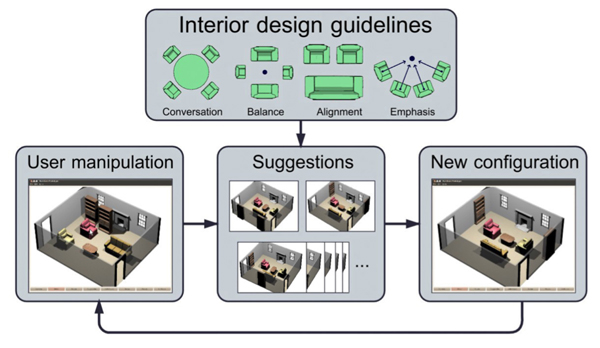

Interactive Furniture Layout Using Interior Blueprint Guidelines (2011) [Paper]

Synthesizing Open Worlds with Constraints using Locally Annealed Reversible Jump MCMC (2012) [Paper]

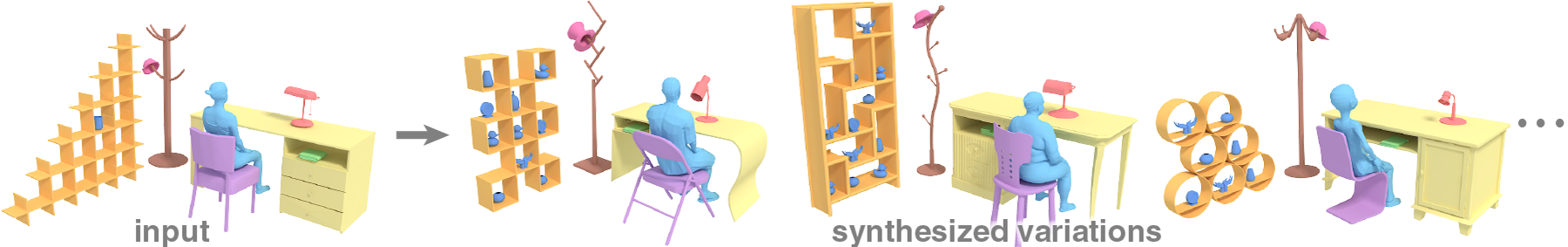

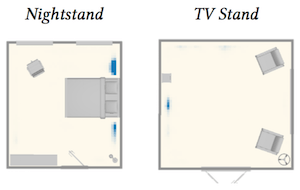

.jpeg)

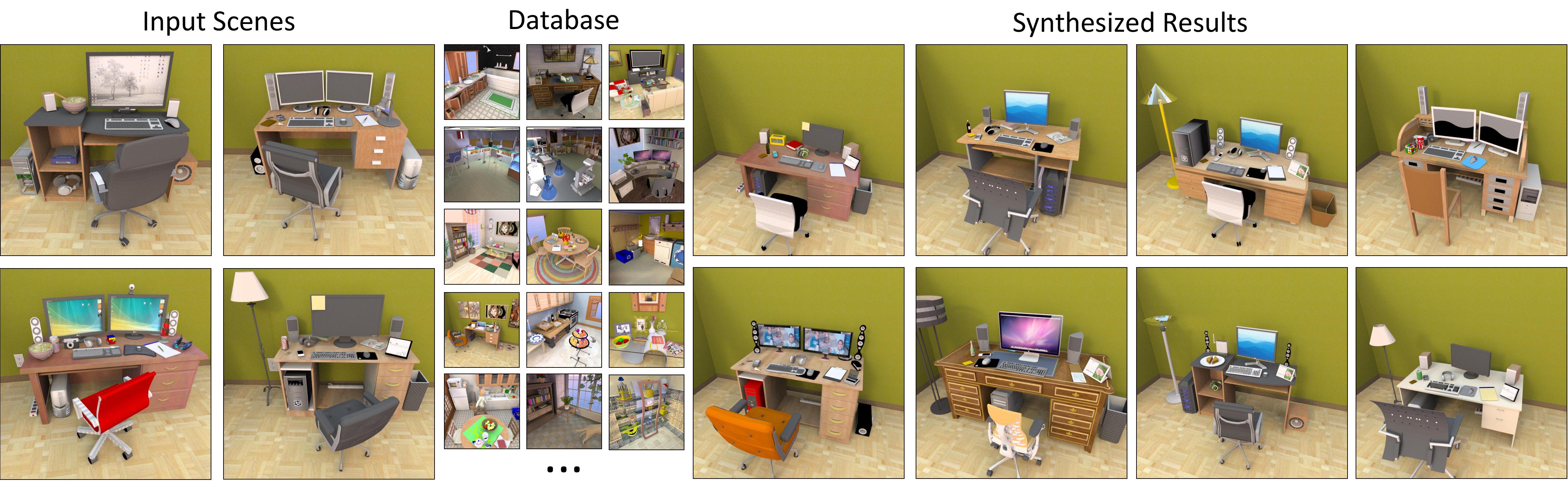

Example-based Synthesis of 3D Object Arrangements (2012 SIGGRAPH Asia) [Paper]

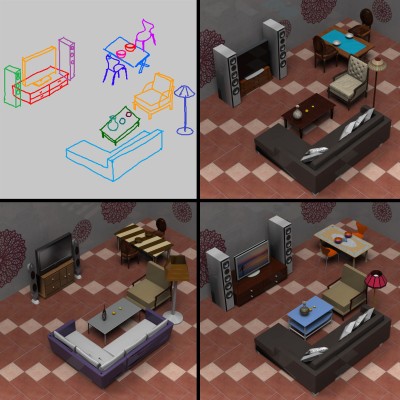

Sketch2Scene: Sketch-based Co-retrieval and Co-placement of 3D Models (2013) [Paper]

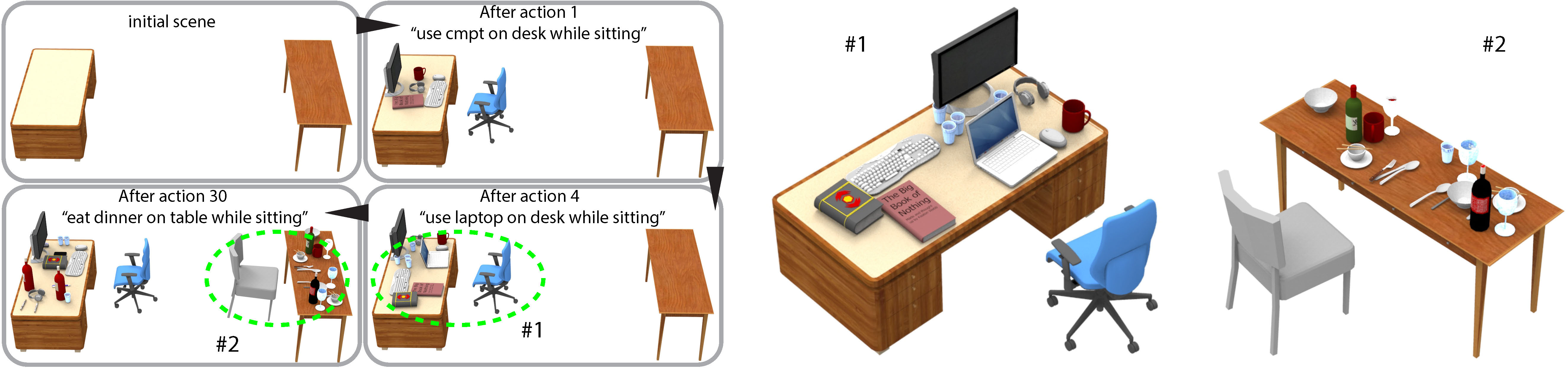

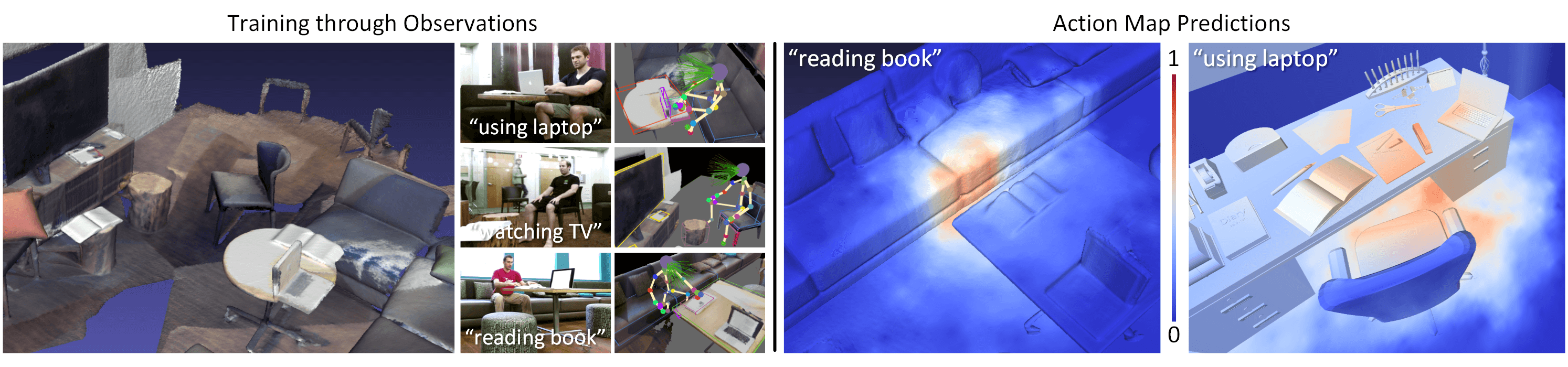

Activeness-Driven 3D Indoor Scene Evolution (2016) [Newspaper]

The Clutterpalette: An Interactive Tool for Detailing Indoor Scenes (2015) [Paper]

Image2Scene: Transforming Fashion of 3D Room (2015) [Paper]

Relationship Templates for Creating Scene Variations (2016) [Paper]

IM2CAD (2017) [Paper]

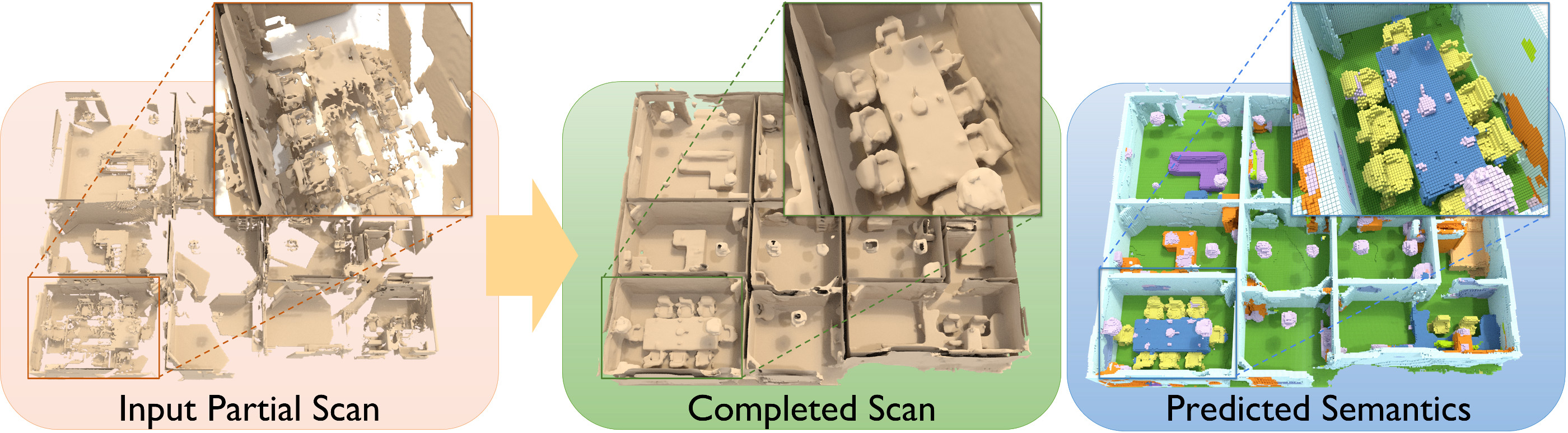

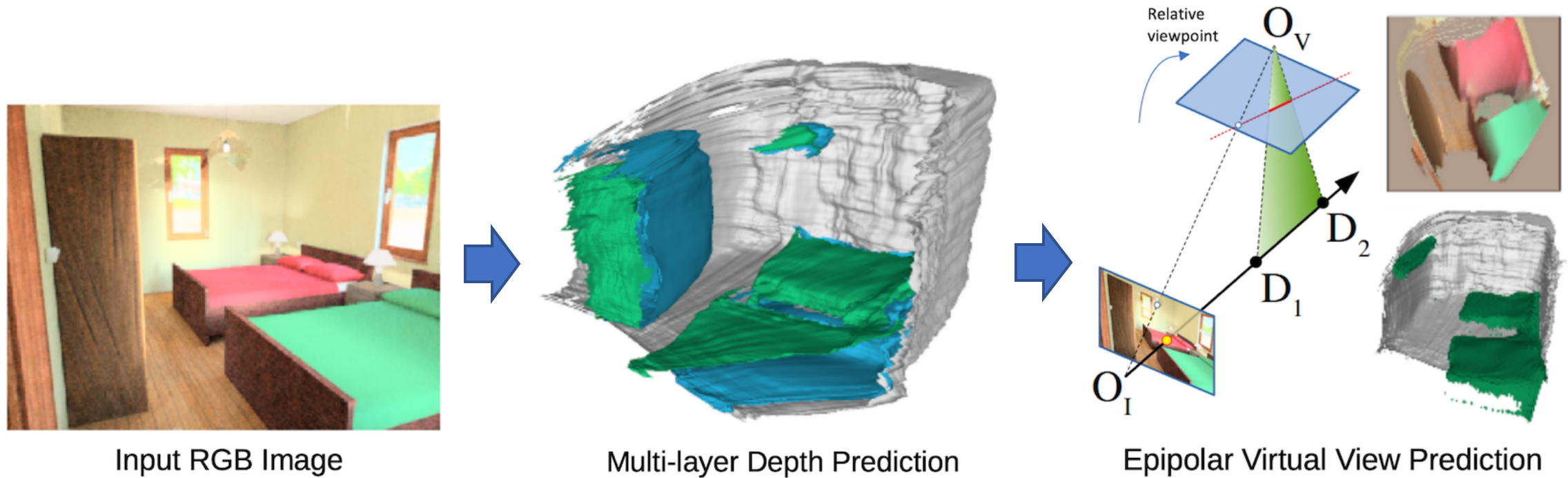

Predicting Complete 3D Models of Indoor Scenes (2017) [Paper]

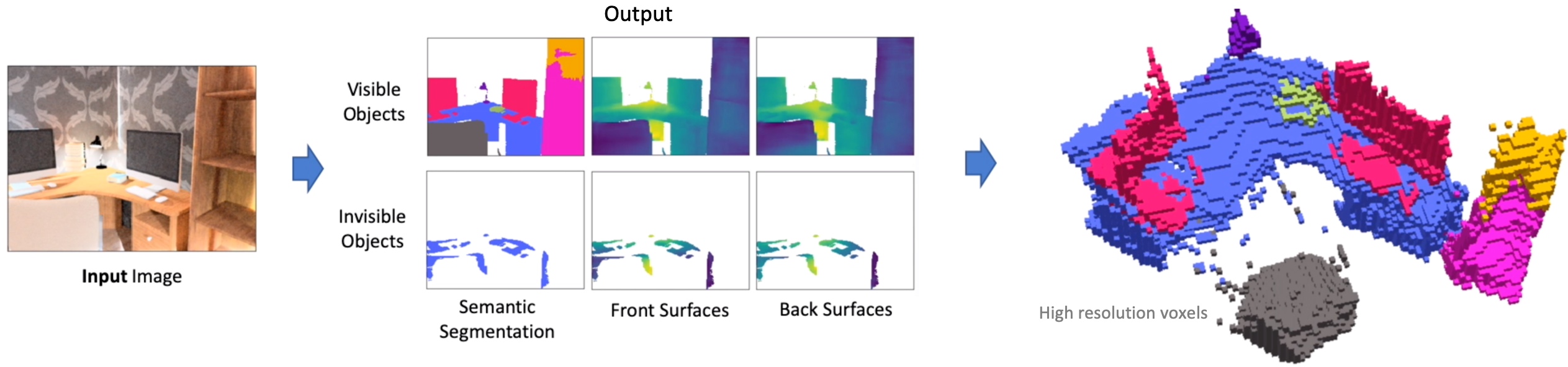

Complete 3D Scene Parsing from Unmarried RGBD Epitome (2017) [Paper]

Raster-to-Vector: Revisiting Floorplan Transformation (2017, ICCV) [Paper] [Lawmaking]

Fully Convolutional Refined Car-Encoding Generative Adversarial Networks for 3D Multi Object Scenes (2017) [Web log]

Adaptive Synthesis of Indoor Scenes via Activity-Associated Object Relation Graphs (2017 SIGGRAPH Asia) [Paper]

Automated Interior Design Using a Genetic Algorithm (2017) [Paper]

SceneSuggest: Context-driven 3D Scene Design (2017) [Paper]

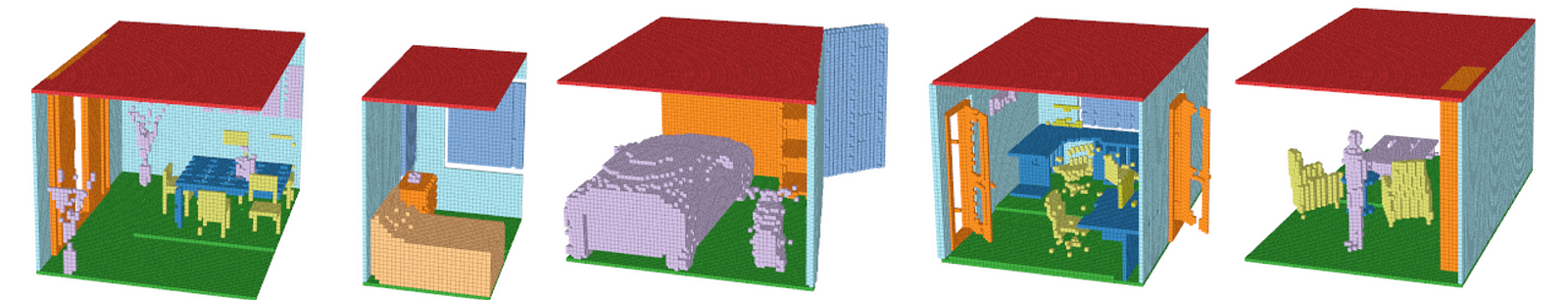

.png)

A fully terminate-to-stop deep learning approach for real-time simultaneous 3D reconstruction and material recognition (2017) [Newspaper]

.png)

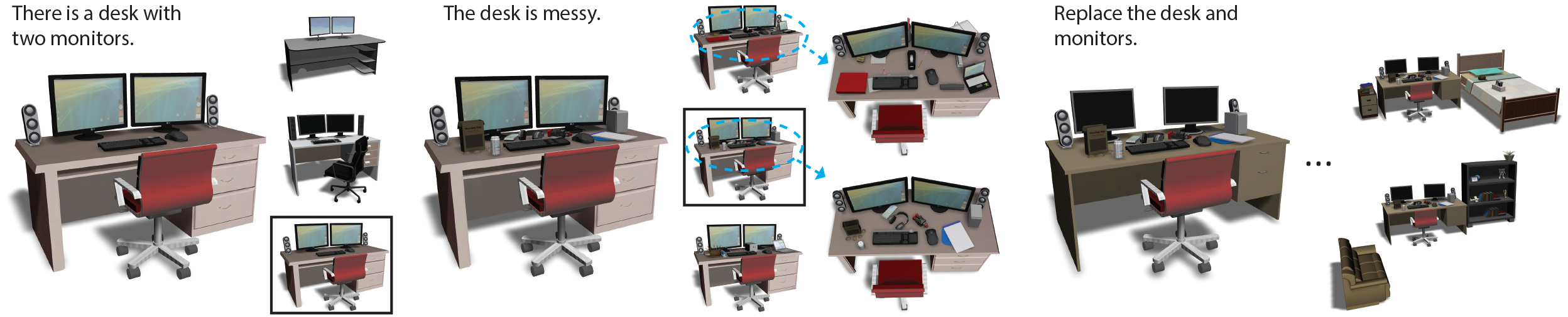

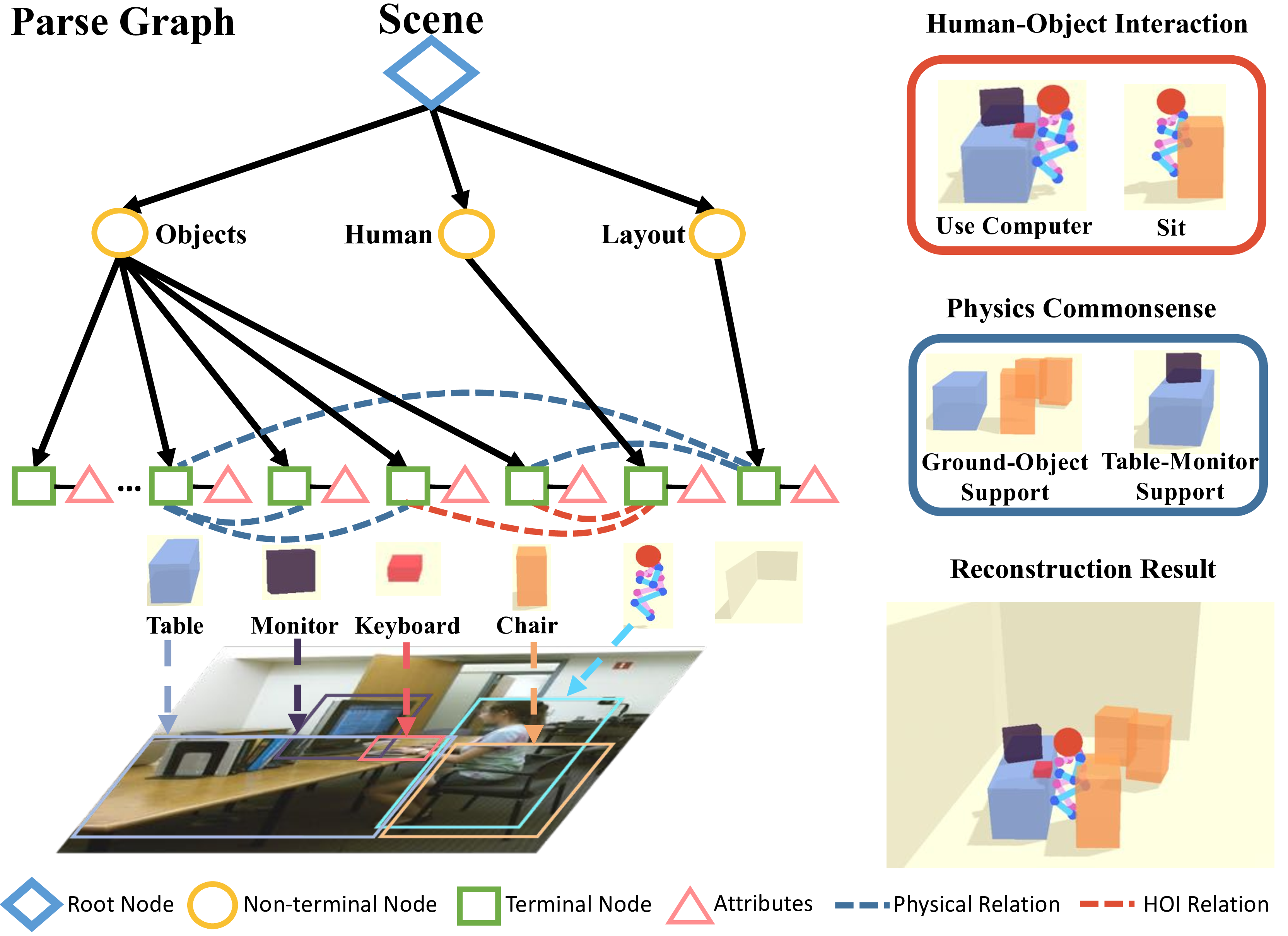

Human-axial Indoor Scene Synthesis Using Stochastic Grammar (2018, CVPR)[Newspaper] [Supplementary] [Code]

Deep Convolutional Priors for Indoor Scene Synthesis (2018) [Newspaper]

Configurable 3D Scene Synthesis and second Prototype Rendering with Per-Pixel Ground Truth using Stochastic Grammars (2018) [Paper]

Holistic 3D Scene Parsing and Reconstruction from a Unmarried RGB Epitome (ECCV 2018) [Paper]

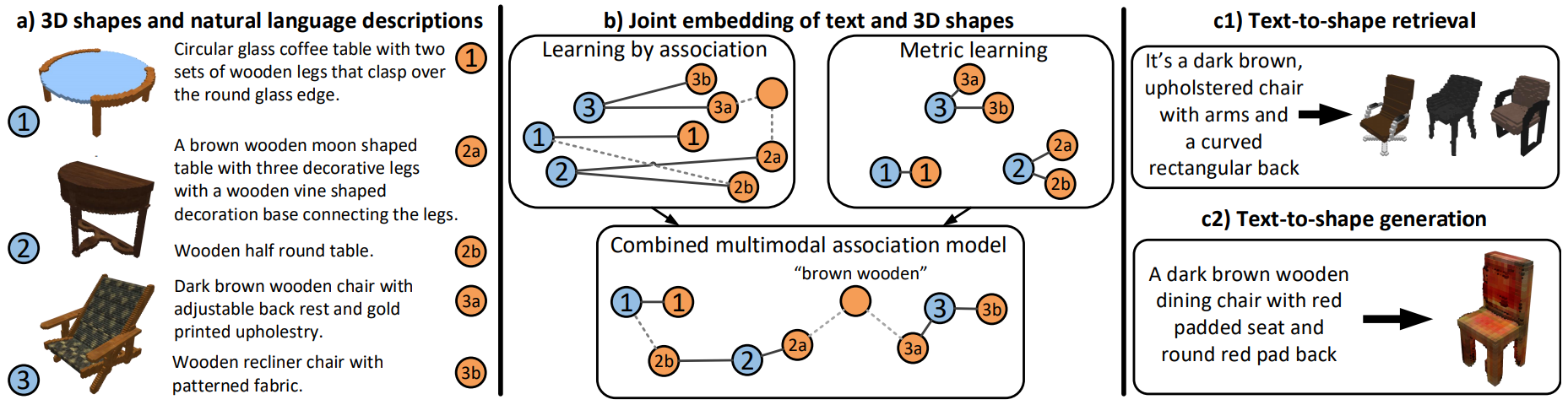

Language-Driven Synthesis of 3D Scenes from Scene Databases (SIGGRAPH Asia 2018) [Newspaper]

Deep Generative Modeling for Scene Synthesis via Hybrid Representations (2018) [Paper]

.jpeg)

GRAINS: Generative Recursive Autoencoders for INdoor Scenes (2018) [Paper]

SEETHROUGH: Finding Objects in Heavily Occluded Indoor Scene Images (2018) [Newspaper]

A Survey of 3D Indoor Scene Synthesis (2020) [Paper]

SceneCAD: Predicting Object Alignments and Layouts in RGB-D Scans (2020) [Paper]

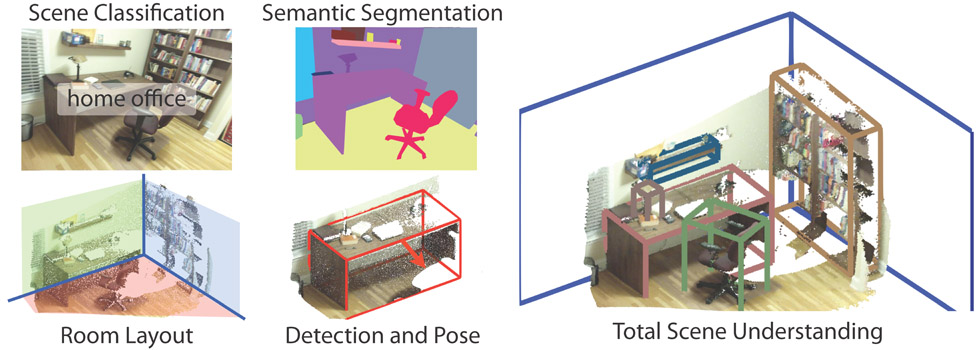

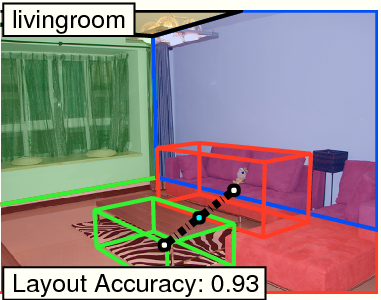

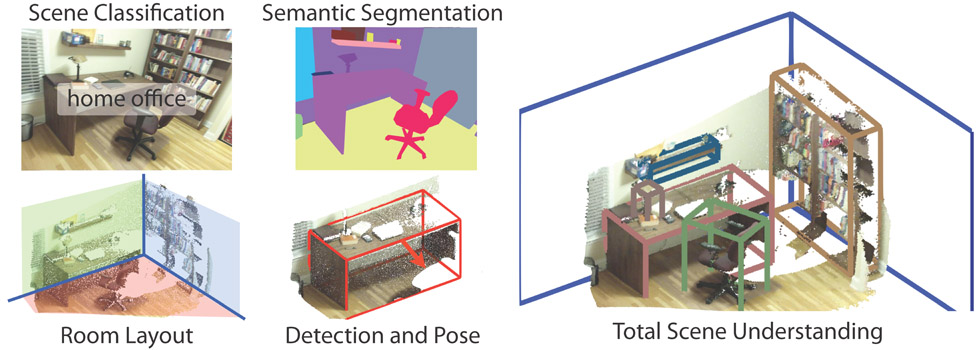

Scene Understanding (Another more detailed repository)

Recovering the Spatial Layout of Cluttered Rooms (2009) [Paper]

Characterizing Structural Relationships in Scenes Using Graph Kernels (2011 SIGGRAPH) [Paper]

Understanding Indoor Scenes Using 3D Geometric Phrases (2013) [Paper]

Organizing Heterogeneous Scene Collections through Contextual Focal Points (2014 SIGGRAPH) [Newspaper]

SceneGrok: Inferring Action Maps in 3D Environments (2014, SIGGRAPH) [Newspaper]

PanoContext: A Whole-room 3D Context Model for Panoramic Scene Understanding (2014) [Paper]

Learning Informative Edge Maps for Indoor Scene Layout Prediction (2015) [Paper]

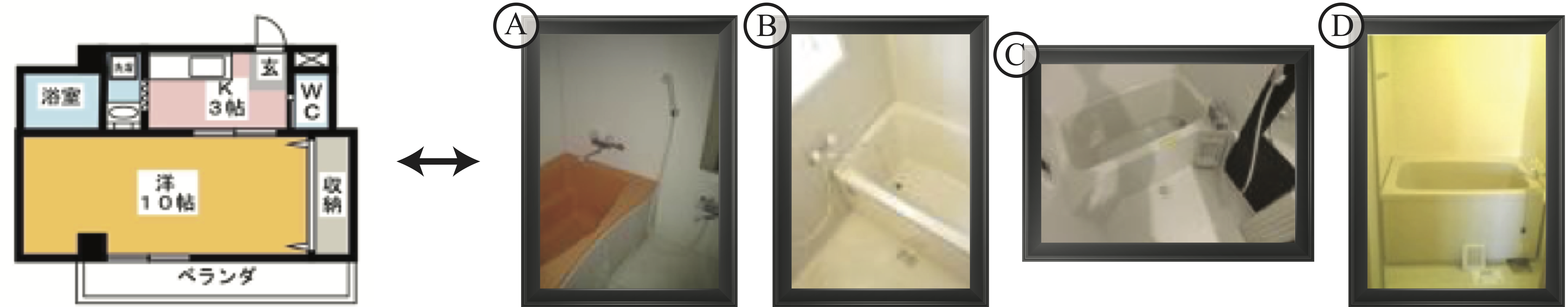

Rent3D: Floor-Programme Priors for Monocular Layout Interpretation (2015) [Newspaper]

A Coarse-to-Fine Indoor Layout Estimation (CFILE) Method (2016) [Newspaper]

%20Method%20(2016).png)

DeLay: Robust Spatial Layout Estimation for Cluttered Indoor Scenes (2016) [Paper]

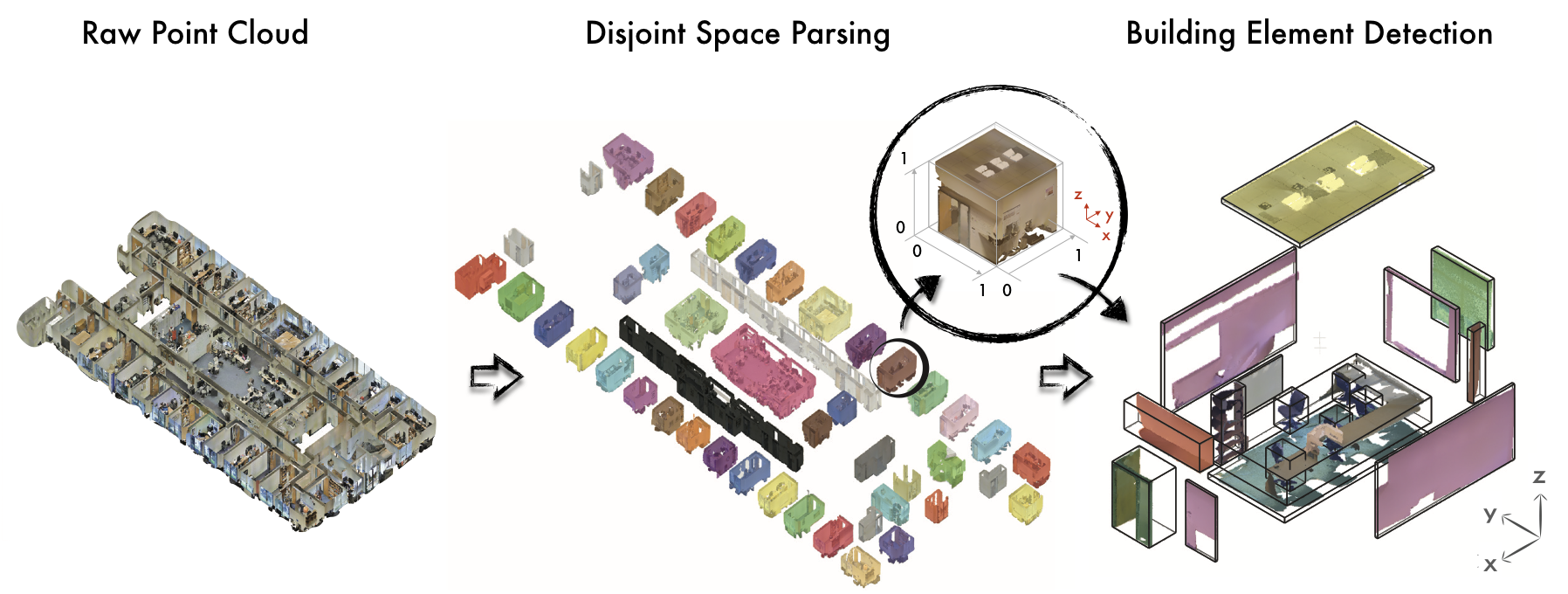

3D Semantic Parsing of Large-Scale Indoor Spaces (2016) [Paper] [Code]

Single Paradigm 3D Interpreter Network (2016) [Paper] [Lawmaking]

Deep Multi-Modal Prototype Correspondence Learning (2016) [Newspaper]

Physically-Based Rendering for Indoor Scene Understanding Using Convolutional Neural Networks (2017) [Paper] [Lawmaking] [Code] [Code] [Lawmaking]

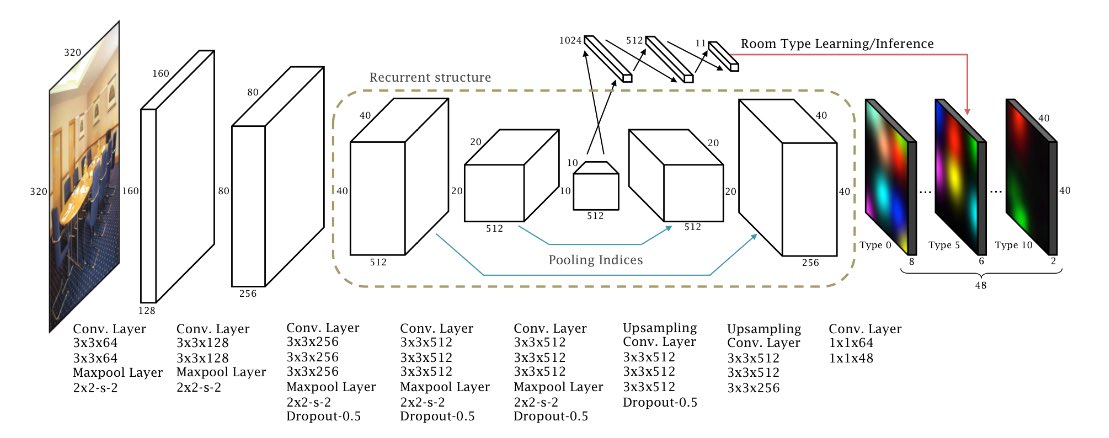

RoomNet: Terminate-to-Terminate Room Layout Estimation (2017) [Paper]

Dominicus RGB-D: A RGB-D Scene Understanding Criterion Suite (2017) [Paper]

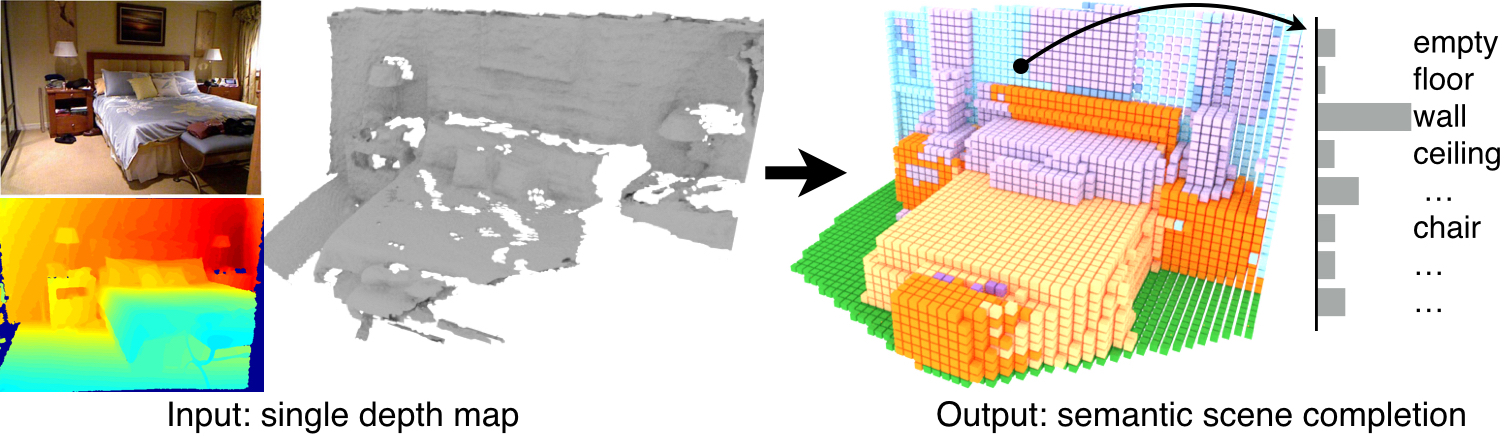

Semantic Scene Completion from a Single Depth Image (2017) [Paper] [Code]

Factoring Shape, Pose, and Layout from the 2D Prototype of a 3D Scene (2018 CVPR) [Newspaper] [Code]

LayoutNet: Reconstructing the 3D Room Layout from a Single RGB Image (2018 CVPR) [Paper] [Code]

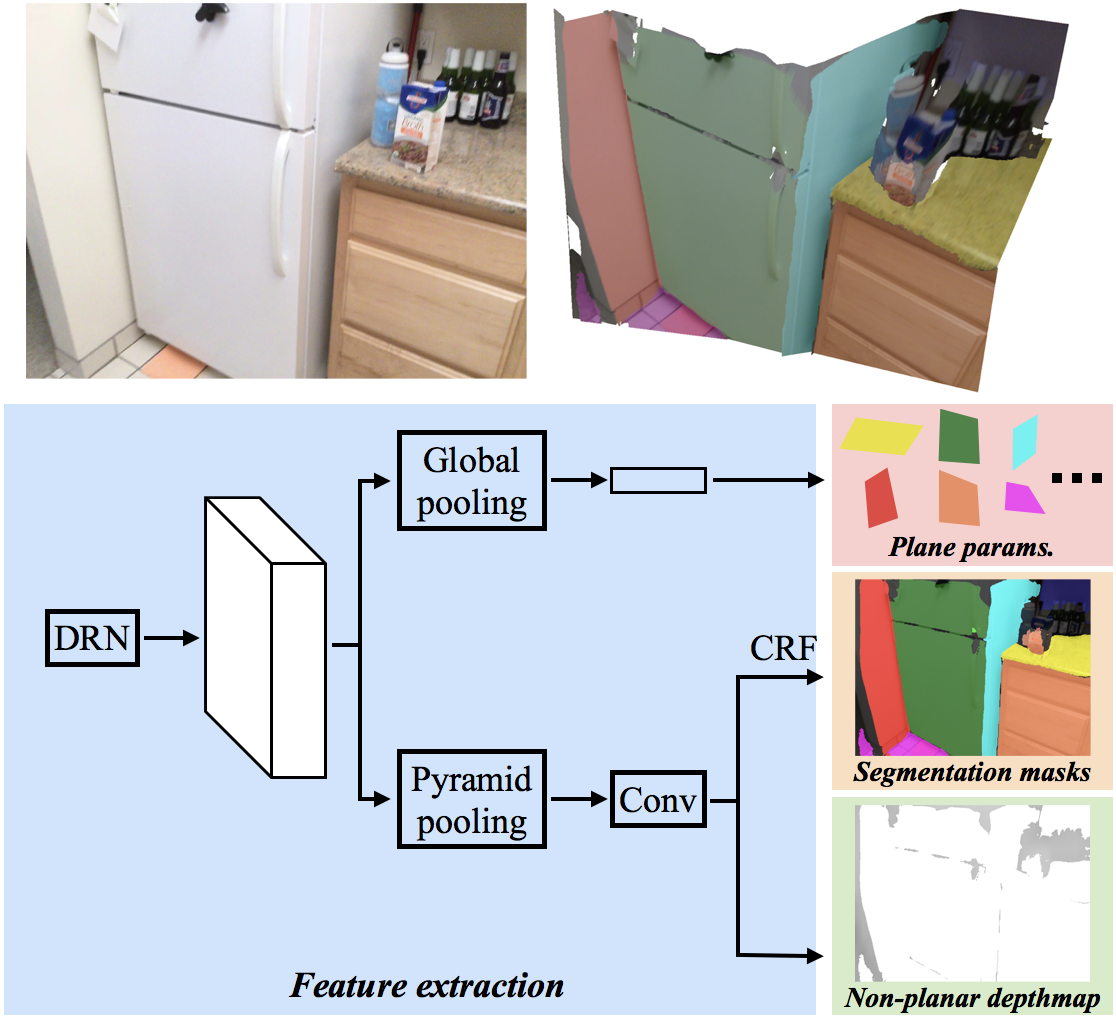

PlaneNet: Slice-wise Planar Reconstruction from a Unmarried RGB Image (2018 CVPR) [Paper] [Code]

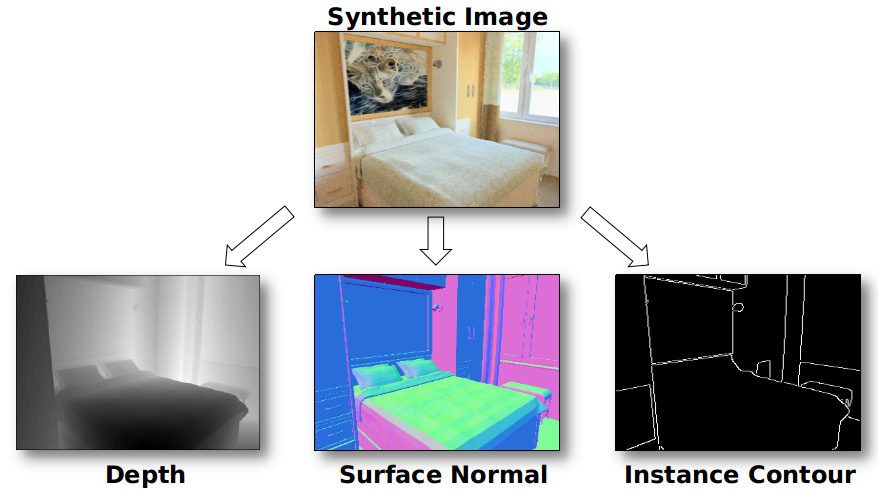

Cross-Domain Cocky-supervised Multi-task Feature Learning using Constructed Imagery (2018 CVPR) [Paper]

Pano2CAD: Room Layout From A Single Panorama Epitome (2018 CVPR) [Paper]

Automatic 3D Indoor Scene Modeling from Single Panorama (2018 CVPR) [Paper]

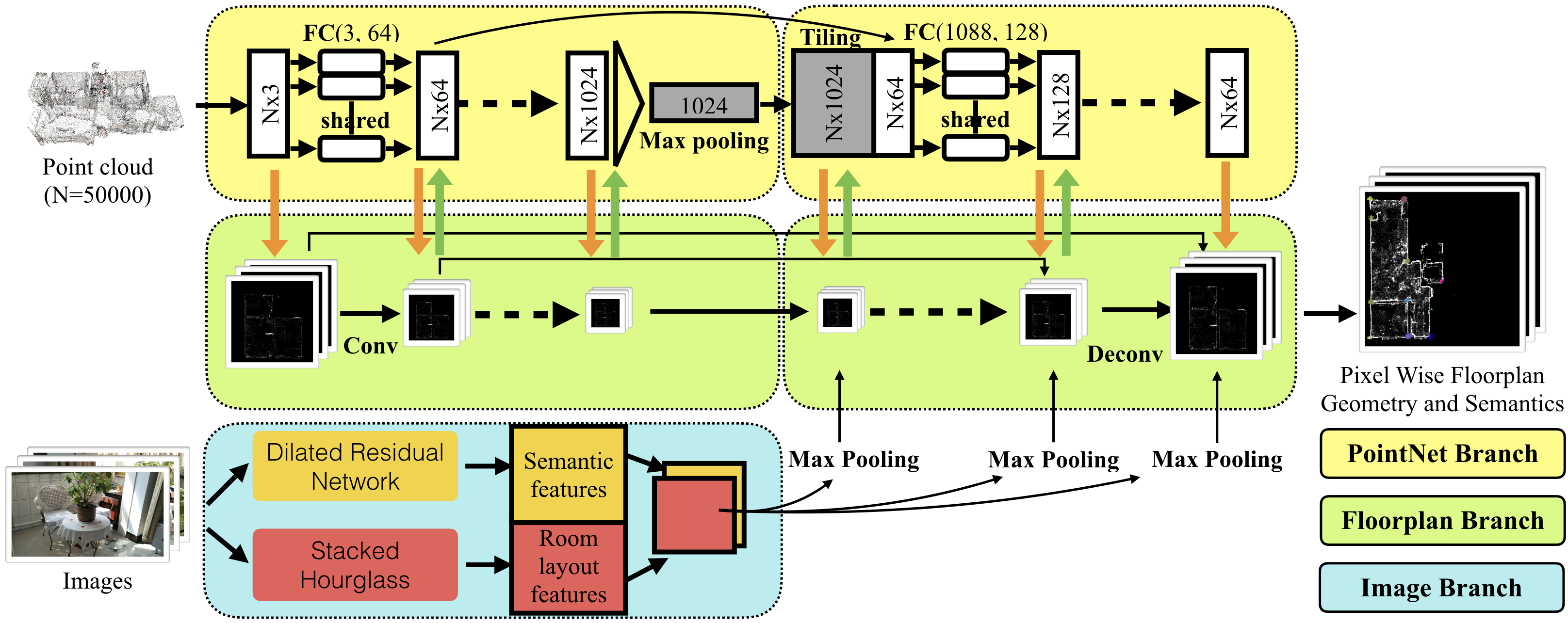

.jpeg)

Single-Image Piece-wise Planar 3D Reconstruction via Associative Embedding (2019 CVPR) [Paper] [Lawmaking]

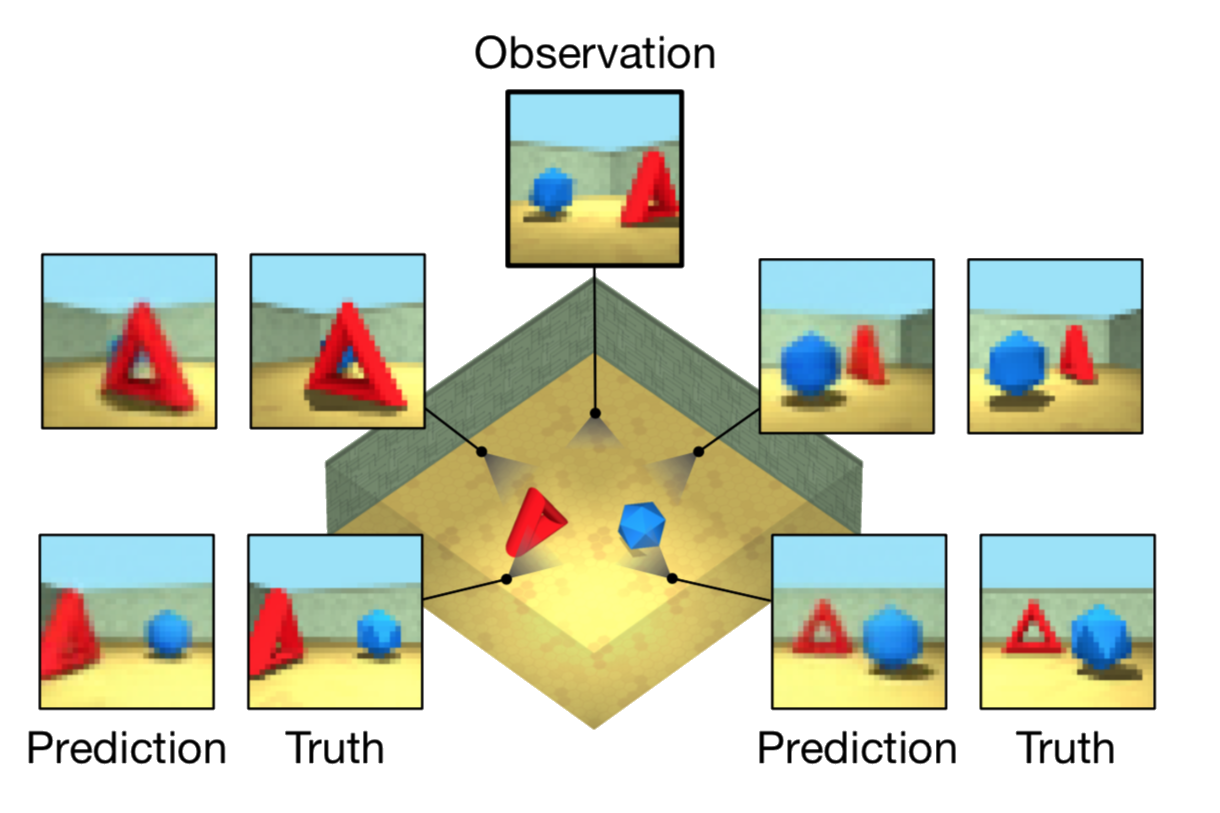

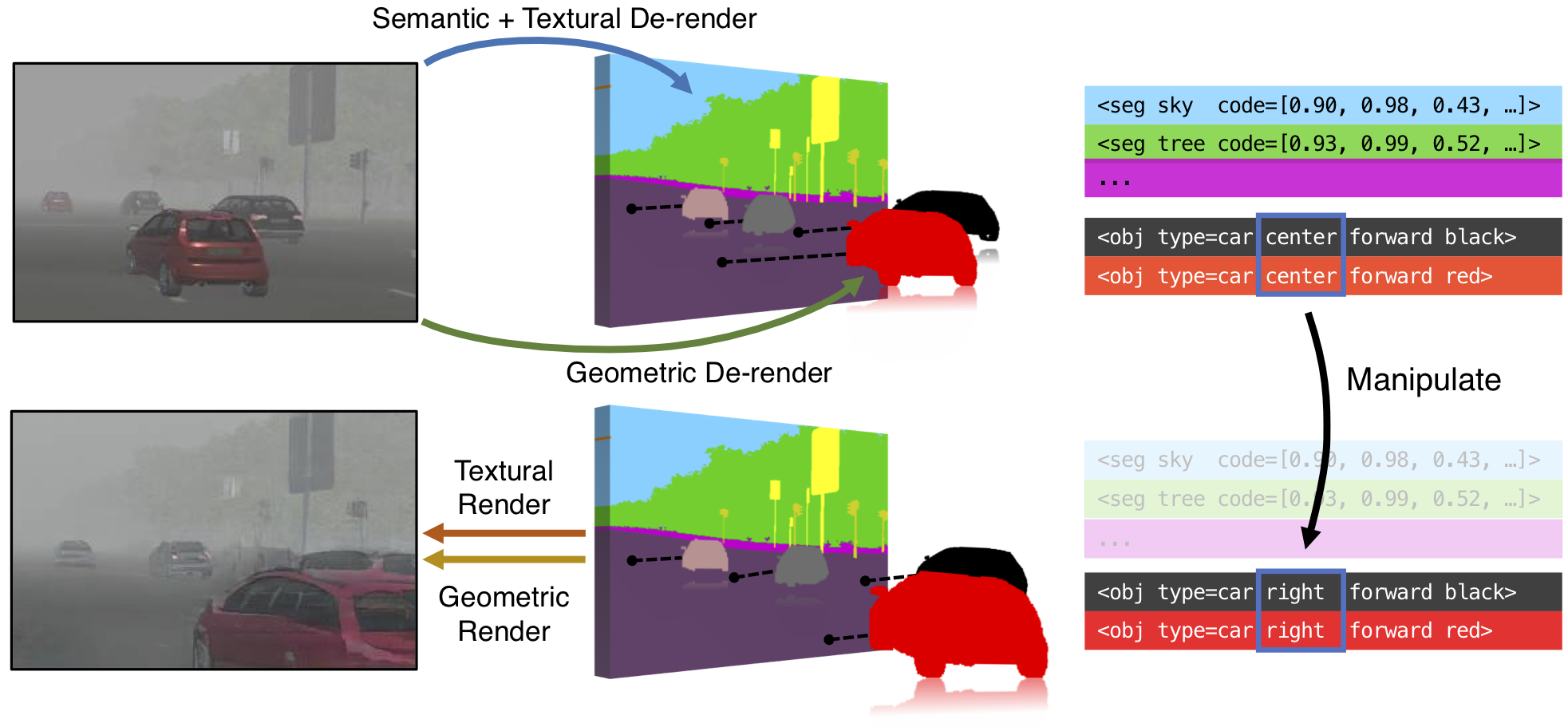

3D-Aware Scene Manipulation via Inverse Graphics (NeurIPS 2018) [Paper] [Lawmaking]

PerspectiveNet: 3D Object Detection from a Single RGB Paradigm via Perspective Points (NIPS 2019) [Paper]

Holistic++ Scene Understanding: Single-view 3D Holistic Scene Parsing and Human Pose Estimation with Man-Object Interaction and Physical Commonsense (ICCV 2019) [Paper & Lawmaking]

Source: https://github.com/timzhang642/3D-Machine-Learning

0 Response to "kid goku drawing 3d modeling pose"

Post a Comment